The nuts and bolts of SEO for SPAs (Single-Page Applications)

Historically, web developers have been using HTML for content, CSS for styling, and JavaScript (JS) for interactivity elements. JS enables the addition of features like pop-up dialog boxes and expandable content on web pages. Nowadays, over 98% of all sites use JavaScript due to its ability to modify web content based on user actions.

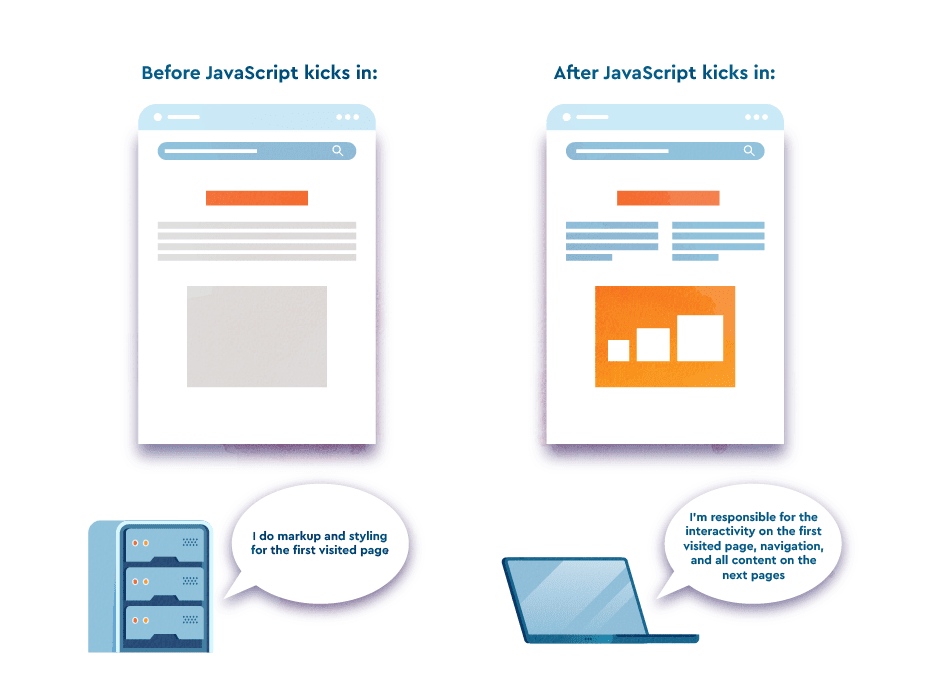

A relatively new trend of incorporating JS into websites is the adoption of single-page applications. Unlike traditional websites that load all their resources (HTML, CSS, JS) by requesting each from the server each time it’s needed, SPAs only require an initial loading and do not continue to burden the server. Instead, the browser handles all the processing.

This leads to faster websites, which is good because studies show that online shoppers expect websites to load within three seconds. The longer the load takes, the fewer customers will stay on the site. Adopting the SPA approach can be a good solution to this problem, but it can also be a disaster for SEO if done incorrectly.

In this post, we’ll discuss how SPAs are made, examine the challenges they present for optimization, and provide guidance on how to do SPA SEO properly. Get SPA SEO right and search engines will be able to understand your SPAs and rank them well.

Download our free ebook with research findings and sign up to SE Ranking’s news and SEO tips digests!

Click the link we sent you in the email to confirm your email

SPA in a nutshell

A single-page application, or SPA, is a specific JavaScript-based technology for website development that doesn’t require further page loads after the first page view load. React, Angular, and Vue are the most popular JavaScript frameworks used for building SPA. They mostly differ in terms of supported libraries and APIs, but both serve fast client-side rendering.

An SPA greatly enhances site speed by eliminating the requests between the server and browser. But search engines are not so thrilled about this JavaScript trick. The issue lies in the fact that search engines don’t interact with the site like users do, resulting in a lack of accessible content. Search engines don’t understand that the content is being added dynamically, leaving them with a blank page yet to be filled.

End users benefit from SPA technology because they can easily navigate through web pages without having to deal with extra page loads and layout shifts. Given that single page applications cache all the resources in a local storage (after they are loaded at the initial request), users can continue browsing them even with an unstable connection. Despite the extra SEO effort it demands, the technology’s benefits ensure its enduring presence.

Examples of SPAs

Many high-profile websites are built with single-page application architecture. Examples of popular ones include:

- Google Maps

Google Maps allows users to view maps and find directions. When a user initially visits the site, a single page is loaded, and further interactions are handled dynamically through JavaScrip. The user can pan and zoom the map, and the application will update the map view without reloading the page.

- Airbnb

Airbnb is a popular travel booking site that uses a single page design that dynamically updates as users search for accommodations. Users can filter search results and explore various property details without the need to navigate to new pages.

When users log in to Facebook, they are presented with a single page that allows them to interact with posts, photos, and comments, eliminating the need to refresh the page.

- Trello

Trello is a web-based project management tool powered by SPA. On a single page, you can create, manage, and collaborate on projects, cards, and lists.

- Spotify

Spotify is a popular music streaming service. It lets you browse, search, and listen to music on a single page. No need for reloading or switching between pages.

Is a single-page application good for SEO?

Yes, if you implement it wisely.

SPAs provide a seamless and intuitive user experience. They don’t require the browser to reload the entire page when navigating between different sections. Users can enjoy a fast browsing experience. Users are also less likely to be distracted or frustrated by page reloads or interruptions in the browsing experience. So their engagement can be higher.

The SPA approach is also popular among web developers as it provides high-speed operation and rapid development. Developers can apply this technology to create different platform versions based on ready-made code. This speeds up the desktop and mobile application development process, making it more efficient.

While SPAs can offer numerous benefits for users and developers, they also present several challenges for SEO. As search engines traditionally rely on HTML content to crawl and index websites, it can be challenging for them to access and index the content on SPAs that rely heavily on JavaScript. This can result in crawlability and indexability issues.

This approach tends to be a good one for both users and SEO, but you must take the right steps to ensure your pages are easy to crawl and index. With the proper single page app optimization, your SPA website can be just as SEO-friendly as any traditional website.

In the following sections, we’ll go over how to optimize SPAs.

Why it’s hard to optimize SPAs

Before JS became dominant in web development, search engines only crawled and indexed text-based content from HTML. As JS was becoming more and more popular, Google recognized the need to interpret JS resources and understand pages that rely on them. Google’s search crawlers have made significant improvements over the years, but there are still a lot of issues that persist regarding how they perceive and access content on single page applications.

While there is little information available on how other search engines perceive single-page applications, it’s safe to say that all of them are not crazy about Javascript-dependent websites. If you’re targeting search platforms beyond Google, you’re in quite a pickle. A 2017 Moz experiment revealed that only Google and surprisingly, Ask, were able to crawl JavaScript content, while all other search engines remained totally blind to JS.

Currently, no search engine, apart from Google, has made any noteworthy announcements regarding efforts to better understand JS and single-page application websites. But some official recommendations do exist. For example, Bing makes the same suggestions as Google, promoting server-side pre-rendering—a technology that allows bingbot (and other crawlers) to access static HTML as the most complete and comprehensible version of the website.

Crawling issues

HTML, which is easily crawlable by search engines, doesn’t contain much information on an SPA. All it contains is an external JavaScript file and the helpful <script> src attribute. The browser runs the script from this file, and then the content is dynamically loaded, unless the crawler fails to perform the same operation. When that happens, it sees an empty page.

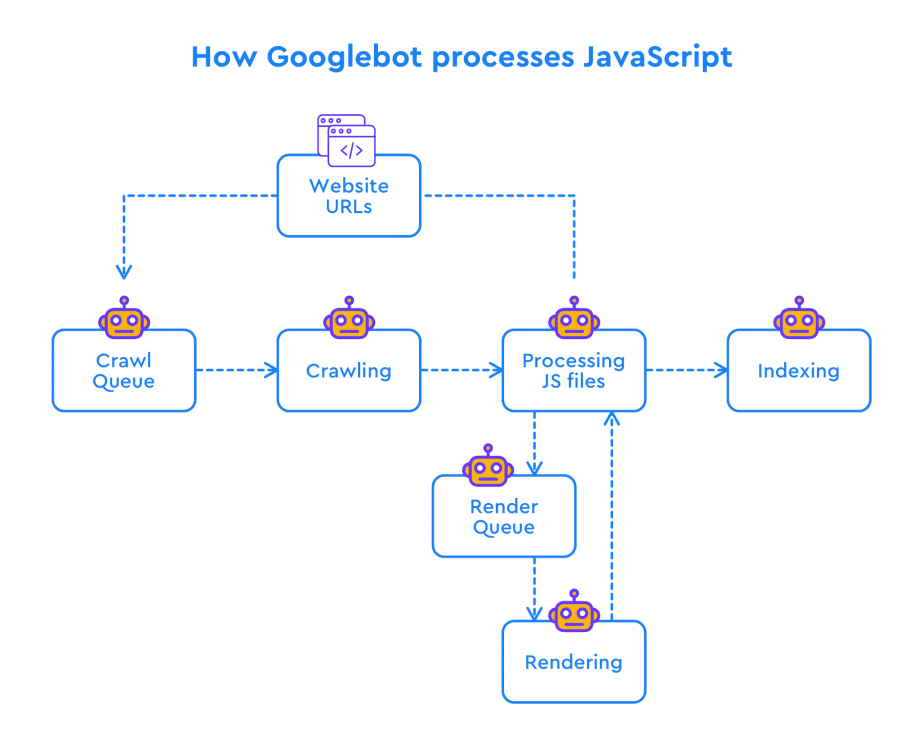

Back in 2014, Google announced that while they were improving its functionality to better understand JS pages, they also admitted that there were tons of blockers preventing them from indexing JS-rich websites. During the Google I/O ‘18 series, Google analysts discussed the concept of two waves of indexing for JavaScript-based sites. This means that Googlebot re-renders the content when it has the necessary resources. Due to the increased processing power and memory required for JavaScript, the cycle of crawling, rendering, and indexing isn’t instantaneous.

Fortunately, in 2019, Google said that they aimed for a median time of 5 seconds for JS-based web pages to go from crawler to renderer. Just as webmasters were becoming accustomed to the two waves of indexing approach, Google’s Martin Splitt said in 2020 that the situation was “more complicated” and that the previous concept no longer held true.

Google continued to upgrade its Googlebot using the latest web technologies, improving its ability to crawl and index websites. As part of these efforts, Google has introduced the concept of an evergreen Googlebot, which operates on the latest Chromium rendering engine (currently version 114).

With Googlebot’s evergreen status, it gains access to numerous new features that are available to modern browsers. This empowers Googlebot to more accurately render and understand the content and structure of modern websites, including single-page applications. This results in website content that can be crawled and indexed better.

The major thing to understand here is that there’s a delay in how Google processes JavaScript on web pages, and all JS content that is loaded on the client side might not be seen as complete, let alone properly indexed. Search engines may discover the page but won’t be able to determine whether the copy on that page is of high-quality or if it corresponds to the search intent.

Problems with error 404

With an SPA, you also lose the traditional logic behind the 404 error page and many other non-200 server status codes. Due to the nature of SPAs, where everything is rendered by the browser, the web server tends to return a 200 HTTP status code to every request. As a result, search engines face difficulty in distinguishing pages that are not valid for indexing.

URL and routing

While SPAs provide an optimized user experience, it can be difficult to create a good SEO strategy around them due to their confusing URL structure and routing. Unlike traditional websites, which have distinct URLs for each page, SPAs typically have only one URL for the entire application and rely on JavaScript to dynamically update the content on the page.

Developers must carefully manage the URLs, making them intuitive and descriptive, ensuring that they accurately reflect the content displayed on the page.

To address these challenges, you can use server-side rendering and pre-rendering. This creates static versions of the SPA. Another option is to use the History API or pushState() method. This method allows developers to fetch resources asynchronously and update URLs without using fragment identifiers. By combining it with the History API, you can create URLs that accurately reflect the content displayed on the page.

Tracking issues

Another issue that arises with SPA SEO is related to Google Analytics tracking. For traditional websites, the analytics code is run every time a user loads or reloads a page, accurately counting each view. But when users navigate through different pages on a single-page application, the code is only run once, failing to trigger individual pageviews.

The nature of dynamic content loading prevents GA from getting a server response for each pageview. This is why standard reports in GA4 do not offer the necessary analytics in this scenario. Still, you can overcome this limitation by leveraging GA4’s Enhanced measurement and configuring Google Tag Manager accordingly.

Pagination can also pose challenges for SPA SEO, as search engines may have difficulty crawling and indexing dynamically loaded paginated content. Luckily, there are some methods that you can use to track user activity on a single-page application website

We’ll cover these methods later. For now, bear in mind that they require additional effort.

How to do SPA SEO

To ensure that your SPA is optimized for both search engines and users, you’ll need to take a strategic approach to on-page optimization. Here’s your complete on-page SEO guide, fully covers the most compelling strategies for on-site optimization.

Also, consider using tools that can help you with this process, such as SE Ranking’s On-Page SEO Checker Tool. With this tool, you can optimize your page content for your target keywords, your page title and description, and other elements.

Now, let’s take a close look at the best practices of SEO for SPA.

Server-side rendering

Server-side rendering (SSR) involves rendering a website on the server and sending it to the browser. This technique allows search bots to crawl all website content based on JavaScript-based elements. While this is a life saver in terms of crawling and indexing, it might slow down the load. One noteworthy aspect of SSR is that is diverges from the natural approach taken by SPAs. SPAs rely mostly on client-side rendering, which contributes to their fast and interactive nature, providing a seamless user experience. It also simplifies the deployment process.

Isomorphic JS

One possible rendering solution for a single-page application is isomorphic, or “universal” JavaScript. Isomorphic JS plays a major role in generating pages on the server side, alleviating the need for a search crawler to execute and render JS files.

The “magic” of isomorphic JavaScript applications lies in their ability to run on both the server and client side. It works by letting users interact with the website as if its content was rendered by the browser when in fact, the user was actually using the HTML file generated on the server side. There are frameworks that facilitate isomorphic app development for each popular SPA framework. Let’s use Next.js and Gatsby for React as examples of this The former generates HTML for each request, while the latter generates a static website and stores HTML in the cloud. Similarly, Nuxt.js for Vue renders JS into HTML on the server and sends the data to the browser.

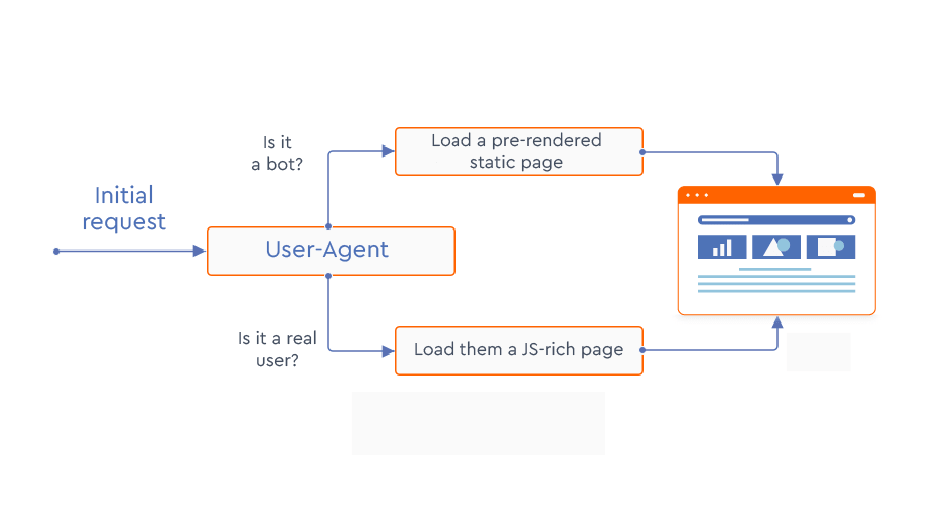

Pre-rendering

Another go-to solution for single page applications is pre-rendering. This involves loading all HTML elements and storing them in server cache, which can then be served to search crawlers. Several services, like Prerender and BromBone, intercept requests made to a website and show different page versions of pages to search bots and real users. The cached HTML is shown to the search bots, while the “normal” JS-rich content is shown to real users. Websites with fewer than 250 pages can use Prerender for free, while bigger ones have to pay a monthly fee starting from $200. It’s a straightforward solution: you upload the sitemap file and it does the rest. BromBone doesn’t even require a manual sitemap upload and costs $129 per month.

There are other, more time-consuming methods for serving a static HTML to crawlers. One example is using Headless Chrome and the Puppeteer library, which will convert routes to pages into the hierarchical tree of HTML files. But keep in mind that you’ll need to remove bootstrap code and edit your server configuration file to locate the static HTML meant for search bots.

Progressive enhancement with feature detection

Using the feature detection method is one of Google’s strongest recommendations for SPAs. This technique involves progressively enhancing the experience with different code resources. It works by using a simple HTML page as the foundation that is accessible to both crawlers and users. On top of this page, additional features such as CSS and JS are added and enabled or disabled according to browser support.

To implement feature detection, you’ll need to write separate chunks of code to check if each of the required feature APIs is compatible with each browser. Fortunately, there are specific libraries like Modernizr that can help you save time and simplify this process.

Views as URLs to make them crawlable

When users scroll through an SPA, they pass separate website sections. Technically, an SPA contains only one page (a single index.html file) but visitors feel like they’re browsing multiple pages. When users move through different parts of a single-page application website, the URL changes only in its hash part (for example, http://website.com/#/about, http://website.com/#/contact). The JS file instructs browsers to load certain content based on fragment identifiers (hash changes).

To help search engines perceive different sections of a website as different pages, you need to use distinct URLs with the help of the History API. This is a standardized method in HTML5 for manipulating the browser history. Google Codelabs suggests using this API instead of hash-based routing to help search engines recognize and treat different fragments of content triggered by hash changes as separate pages. The History API allows you to change navigation links and use paths instead of hashes.

Google analyst Martin Splitt gives the same advice—to treat views as URLs by using the history API. He also suggests adding link markup with href attributes, and creating unique titles and description tags for each view (with “a little extra JavaScript”).

Note that markup is valid for any links on your website. So to make links on your website crawlable by search engines, you should use the <a> tag with an href attribute instead of relying on the onclick action. This is because JavaScript onclick can’t be crawled and is pretty much invisible to Google.

So, the primary rule is to make links crawlable. Make sure your links follow Google standards for SPA SEO and that they appear as follows:

<a href="https://yoursite.com"> <a href="/services/category/SEO">

Google may try to parse links formatted differently, but there’s no guarantee it’ll do so or succeed. So avoid links that appear in the following way:

<a routerLink="services/category">

<span href="https://yoursite.com">

<a onclick="goto('https://yoursite.com')">

You want to begin by adding links using the <a> HTML element, which Google understands as a classic link format. Next, make sure that the URL included is a valid and functioning web address, and that it follows the rules of a Uniform Resource Identifier (URI) standard. Otherwise, crawlers won’t be able to properly index and understand the website’s content.

Views for error pages

With single-page websites, the server has nothing to do with error handling and will always return the 200 status code, which indicates (incorrectly in this case) that everything is okay. But users may sometimes use the wrong URL to access an SPA, so there should be some way to handle error responses. Google recommends creating separate views for each error code (404, 500, etc.) and tweaking the JS file so that it directs browsers to the respective view.

Titles & descriptions for views

Titles and meta descriptions are essential elements for on-page SEO. A well-crafted meta title and description can both improve the website’s visibility in SERPs and increase its click-through rate.

In the case of SPA SEO, managing these meta tags can be challenging because there’s only one HTML file and URL for the entire website. At the same time, duplicate titles and descriptions are one of the most common SEO issues.

How to fix this issue?

Focus on views, which are the HTML fragments in SPAs that users perceive as screens or ‘pages’. It is crucial to create unique views for each section of your single-page website. It’s also important to dynamically update titles and descriptions to reflect the content being displayed within each view.

To set or change the meta description and <title> element in an SPA, developers can use JavaScript.

Using robots meta tags

Robots meta tags provide instructions to search engines on how to crawl and index a website’s pages. When implemented correctly, they can ensure that search engines crawl and index the most important parts of the website, while avoiding duplicate content or incorrect page indexing.

For example, using a “nofollow” directive can prevent search engines from following links within a certain view, while a “noindex” directive in the robots meta tag can exclude certain views or sections of the SPA from be indexed.

<meta name="robots" content="noindex, nofollow">

You can also use JavaScript to add a robots meta tag, but if a page has a noindex tag in its robots meta tag, Google won’t render or execute JavaScript on that page. In this case, your attempts to change or remove the noindex tag using JavaScript won’t be effective because Google will never even see that code.

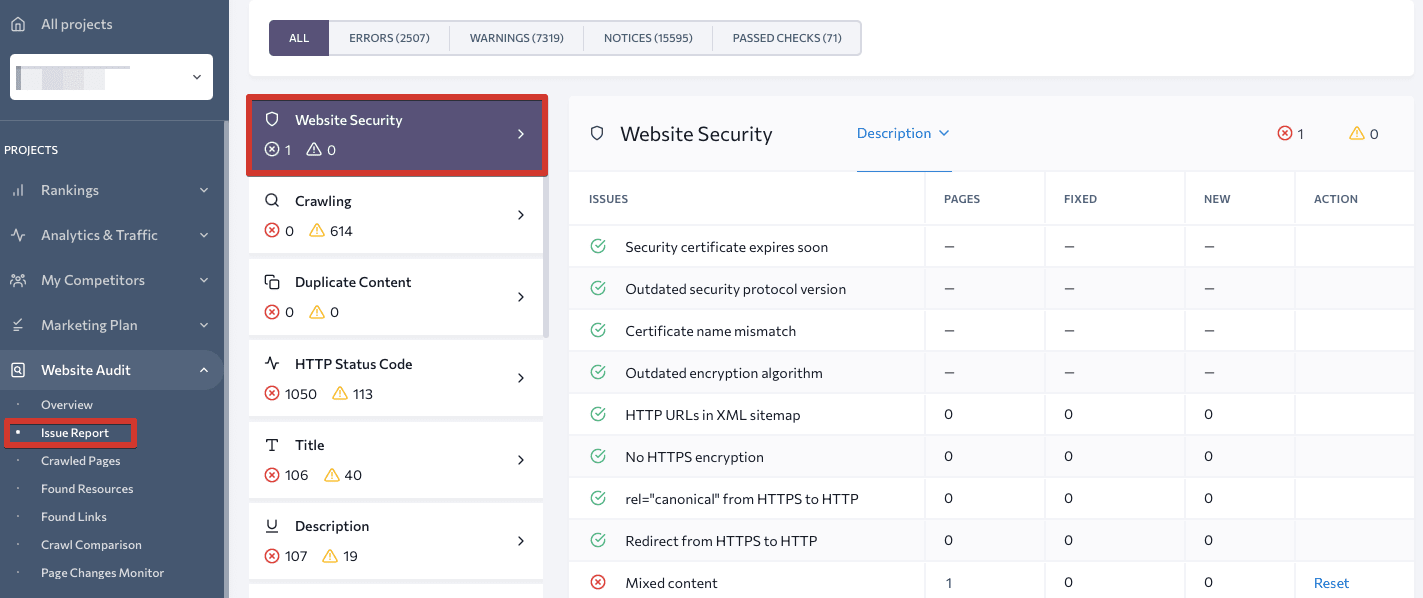

To check robots meta tags issues on your SPA, run a single page app audit with SE Ranking. The audit tool will analyze your website and detect any technical issues that are blocking your website from reaching the top of the SERP.

Avoid soft 404 errors

A soft 404 error occurs when a website returns a status code of 200 (OK) instead of the appropriate 404 (Not Found) for a page that doesn’t exist. The server incorrectly telling search engines that the page exists.

Soft 404 errors can be particularly problematic for SPA websites because of the way they are built and the technology they use. Since SPAs rely heavily on JavaScript to dynamically load content, the server may not always be able to accurately identify whether a requested page exists or not. Because of client-side routing, which is typically used in client-side rendered SPAs, it’s often impossible to use meaningful HTTP status codes.

You can avoid soft 404 errors by applying one of the following techniques:

- Use a JavaScript redirect to a URL that triggers a 404 HTTP status code from the server.

- Add a noindex tag to error pages through JavaScript.

Lazily loaded content

Lazy loading refers to the practice of loading content, such as images or videos, only when they are needed, typically as a user scrolls down the page. This technique can improve page speed and experience, especially for SPAs where large amounts of content can be loaded at once. But if applied incorrectly, you can unintentionally hide content from Google.

To ensure that Google indexes and sees all the content on your page, it is essential to take precautions. Make sure that all relevant content is loaded every time it is displayed in the viewport. You can do this by:

- Applying native lazy-loading for images and iframes, implemented using the “loading” attribute.

- Using IntersectionObserver API that allows developers to see when an element enters or exits the viewport and a polyfill to ensure browser compatibility.

- Resorting to JavaScript library that provides a set of tools and functions that make it easy to load content only when it enters the viewport.

Whichever approach you choose, make sure it works correctly. Use a Puppeteer script to run local tests, and use the URL inspection tool in Google Search Console to see if all images were loaded.

Social shares and structured data

Social sharing optimization is often overlooked by websites. No matter how insignificant it may look, implementing Twitter Cards and Facebook’s Open Graph will allow for rich sharing across popular social media channels, which is good for your website’s search visibility. If you don’t use these protocols, sharing your link will trigger the preview display of a random, and sometimes irrelevant, visual object.

Using structured data is also extremely useful for making different types of website content readable to crawlers. Schema.org provides options for labeling data types like videos, recipes, products, and so on.

You can also use JavaScript to generate the required structured data for your SPA in the form of JSON-LD and inject it into the page. JSON-LD is a lightweight data format that is easy to generate and parse.

You can conduct a Rich Results Test on Google to discover any currently assigned data types and to enable rich search results for your web pages.

Testing an SPA for SEO

There are several ways to test your SPA website’s SEO. You can use tools like Google Search Console or Mobile-Friendly Tests. You can also check your Google cache or inspect your content in search results. We’ve outlined how to use each of them below.

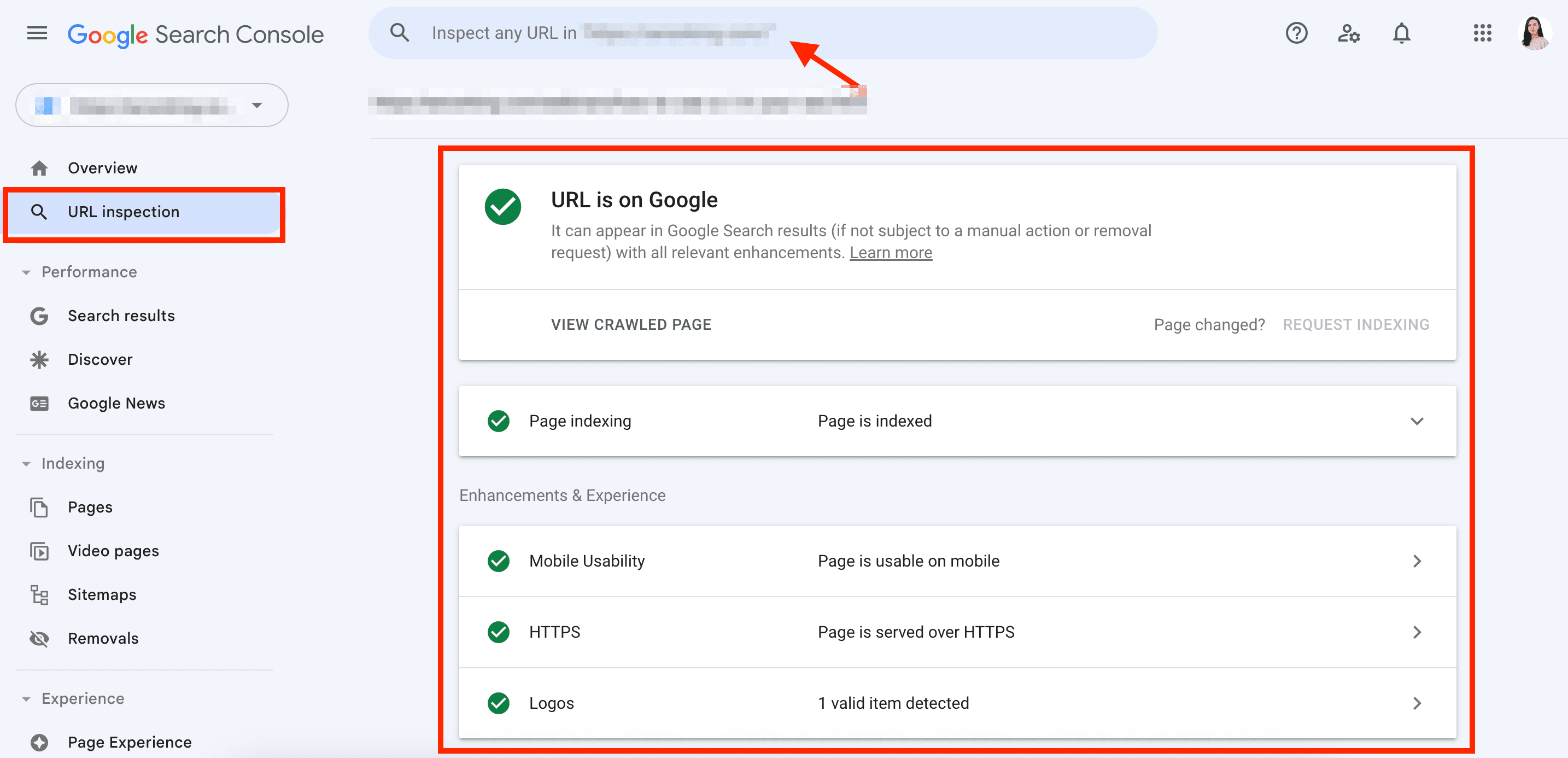

URL inspection in Google Search Console

You can access the essential crawling and indexing information in the URL Inspection section of Google Search Console. It doesn’t give a full preview of how Google sees your page, but it does provide you with basic information, including:

- Whether the search engine can crawl and index your website

- The rendered HTML

- Page resources that can’t be loaded and processed by search engines

You can find out details related to page indexing, mobile usability, HTTPS, and logos by opening the reports.

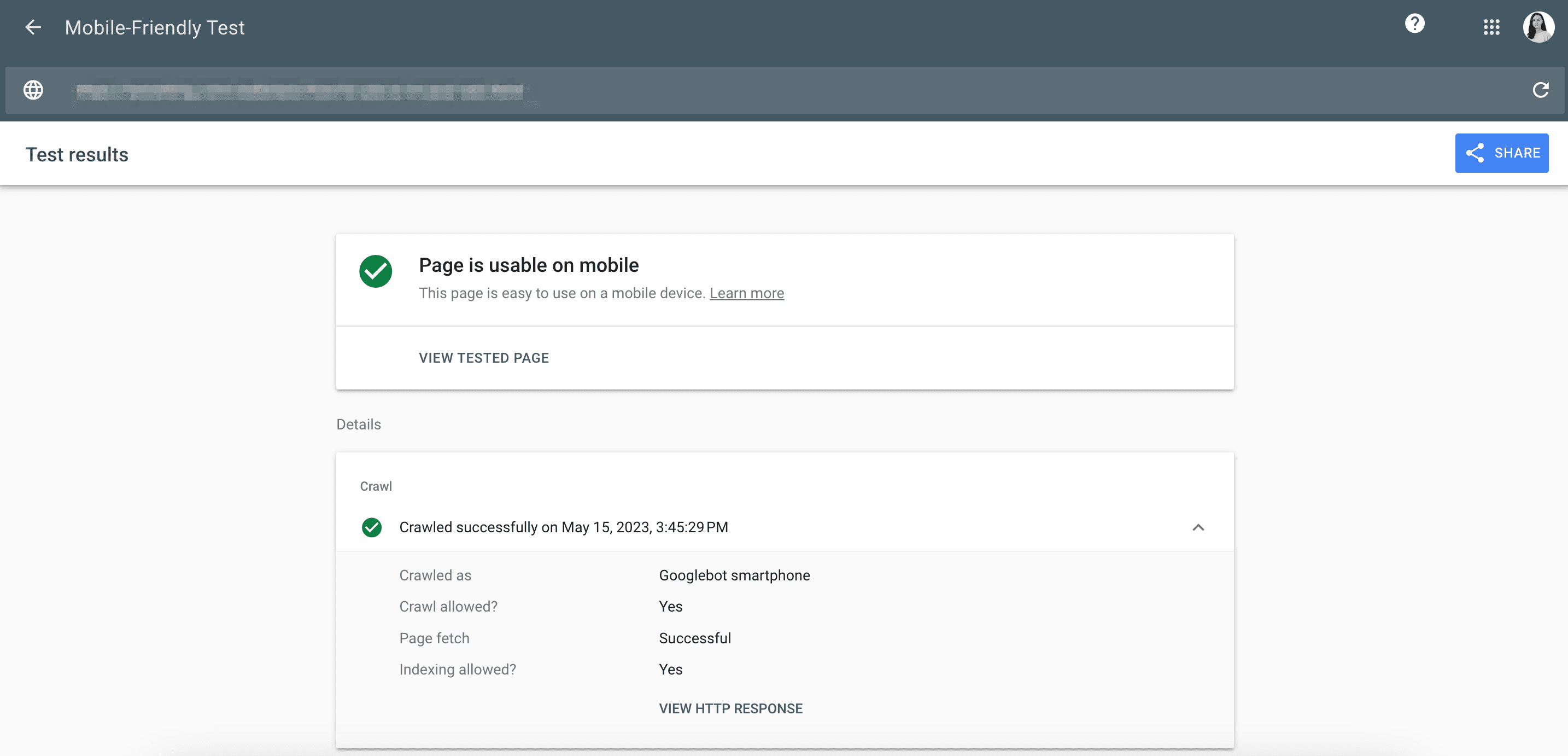

Google’s Mobile-Friendly Test

Google’s Mobile-Friendly Test shows almost the same information as GSC. It also helps to check whether the rendered page is readable on mobile screens.

Read our guide on mobile SEO to get pro tips on how to make your website mobile-friendly.

Plus, Headless Chrome is an excellent tool for testing your SPA and observing how JS will be executed. Unlike traditional browsers, a headless browser doesn’t have a full UI but provides the same environment that real users would experience.

Finally, use tools like BrowserStack to test your SPA on different browsers.

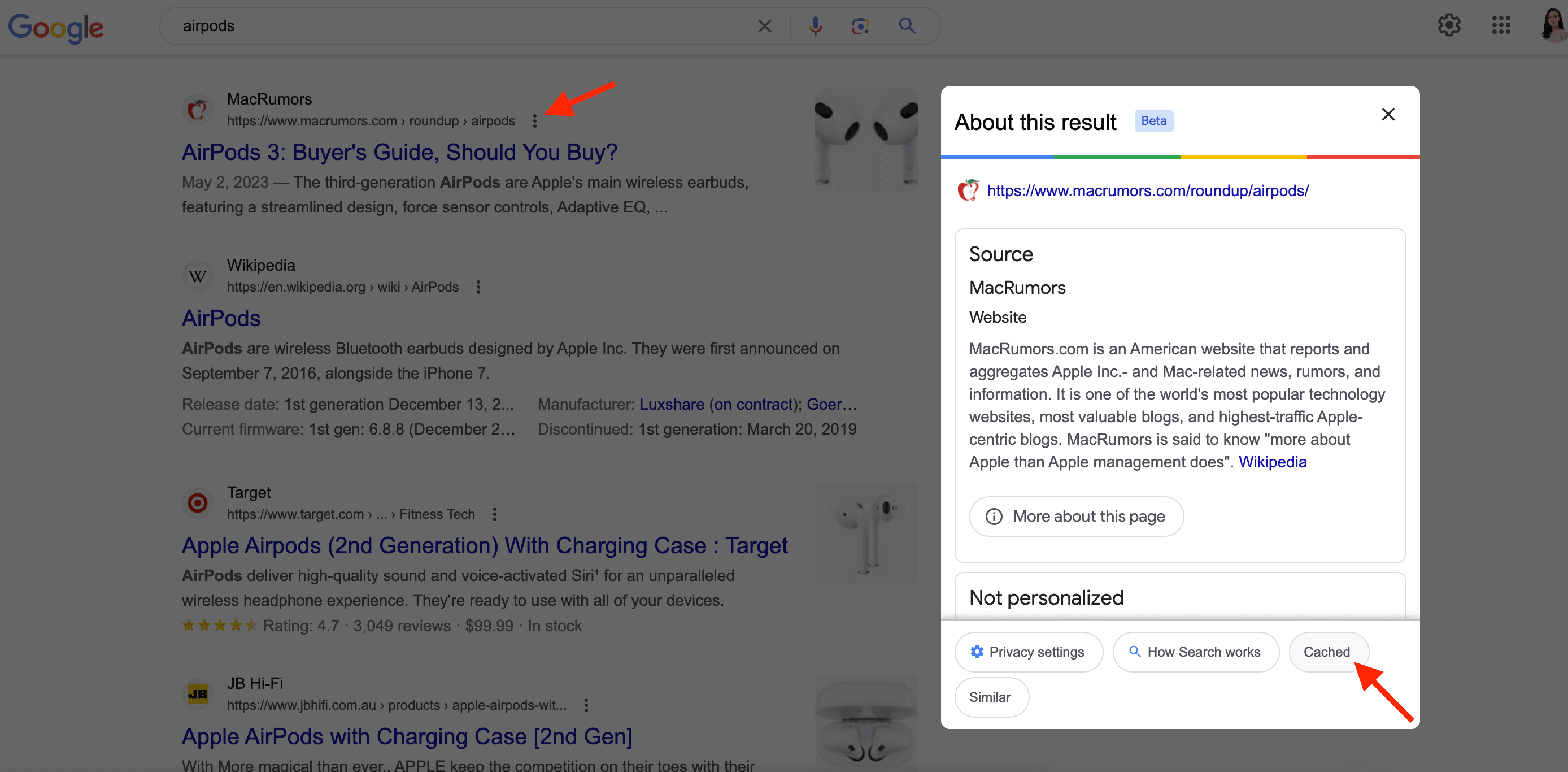

Check your Google cache

Another basic technique for testing your SPA website for SEO is to check the Google cache of your website’s pages. Google cache is a snapshot of a web page taken by Google’s crawler at a specific point in time.

To open the cache:

- Search for the page on Google.

- Click on the icon with three dots next to the search result.

- Choose the Cached option.

Alternatively, you can use the search operator “cache:”, and paste your URL after a colon (without a space), or you could utilize Google Cache Checker, which is our free and simple tool for checking your cached version.

Note! The cached version of your page isn’t always the most up-to-date. Google updates its cache regularly, but there can be a delay between when a page is updated and when the new version appears in the cache. If Google’s cached version of a page is outdated, it may not accurately reflect the page’s current content and structure. That’s why it’s not a good idea to solely rely on Google cache for single page application SEO testing or even for debugging purposes.

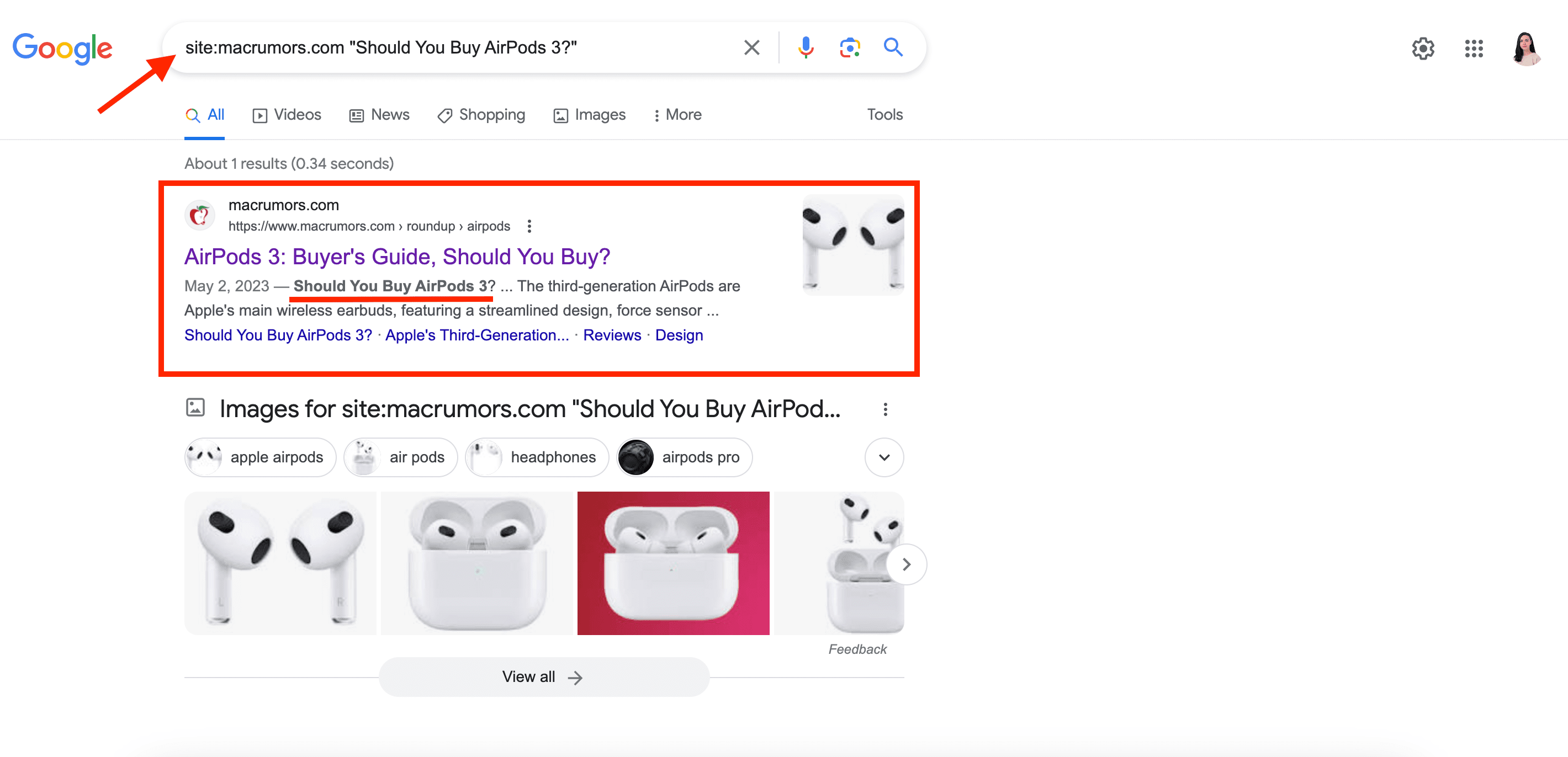

Check the content in the SERP

There are a few ways to check how your SPA appears in SERPs:

- You can check direct quotes of your content in the SERP to see whether the page containing that text is indexed.

- You can use the site: command to check your URL in the SERP.

Finally, you can combine both. Enter site:domain name “content quote”, like in the screenshot below, and if the content is crawled and indexed, you’ll see it in the search results.

There’s no way around basic SEO

Aside from the specific nature of a single page application, most general optimization advice applies to this type of website. Some basic SEO strategies to include involve optimizing for:

- Security. If you haven’t already, protect your website with HTTPS. Otherwise, search engines might cast aside your site and compromise its user data if it’s using any. Never cross Website security off your to-do list, as it requires regular monitoring. Check your SSL/TLS certificate for critical errors regularly to make sure your website can be safely accessed:

- Content optimization. We’ve talked about specific measures for optimizing content in SPA, such as writing unique title tags and description meta tags for each view, similar to how you would for each page on a multi-page website. But you need to have optimized content before taking the above measures. Your content should be tailored to the right user intents, well-organized, visually appealing, and rich in helpful information. If you haven’t collected a keyword list for the site, it will be challenging to deliver the content your visitors need. Take a look at our guide on keyword research for new insights.

- Link building. Backlinks play a major role in signaling Google about the level of trust other resources have in your website. Because of this, building a backlink profile is a vital part of your site’s SEO. No two backlinks are alike, and each link pointing to your website holds a different value. While some backlinks can significantly boost your rankings, spammy ones can damage your search presence. Consider learning more about backlink quality and following best practices to strengthen your link profile.

- Competitor monitoring. You’ve most likely already conducted research on your competitors during the early stages of your website’s development. However, as with any SEO and marketing tasks, it is important to continually monitor your niche. Thanks to data-rich tools, you can easily monitor rivals’ strategies in organic and paid search. This allows you to evaluate the market landscape, spot fluctuations among major competitors, and draw inspiration from successful keywords or campaigns that already work for similar sites.

Tracking single page applications

Track SPA with GA4

Tracking user behavior on SPA websites can be challenging, but GA4 has the tools to handle it. By using GA4 for SEO, you’ll be able to better understand how users engage with your website, identify areas for improvement, and make data-driven decisions to improve user experience and ultimately drive business success.

If you still haven’t installed Google Analytics, read the guide on GA4 setup to find out how to do it quickly and correctly.

Once you are ready to proceed, follow the next steps:

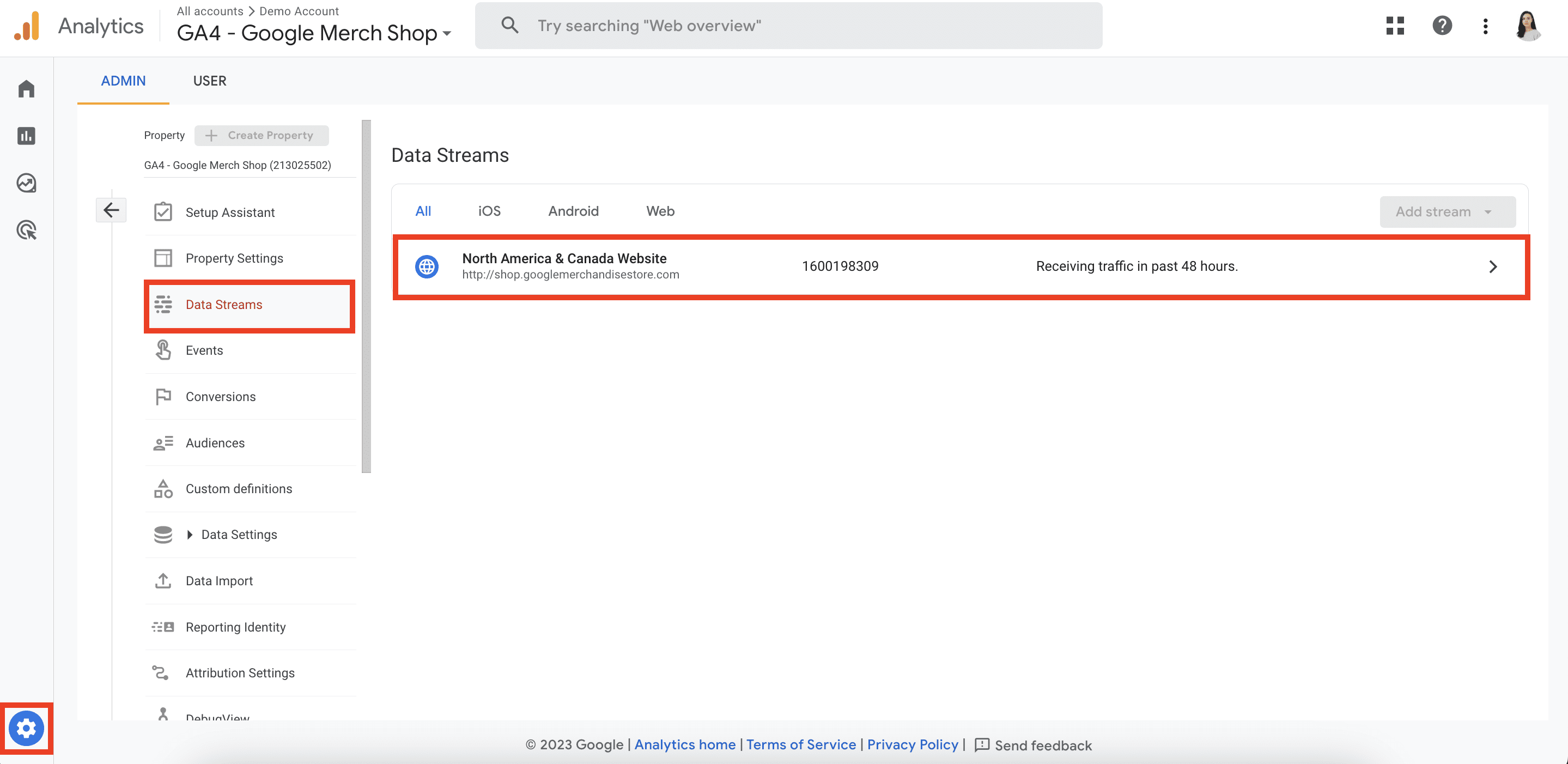

- Go to your GA4 account and then on Data Streams in the Admin section. Click on your web data stream.

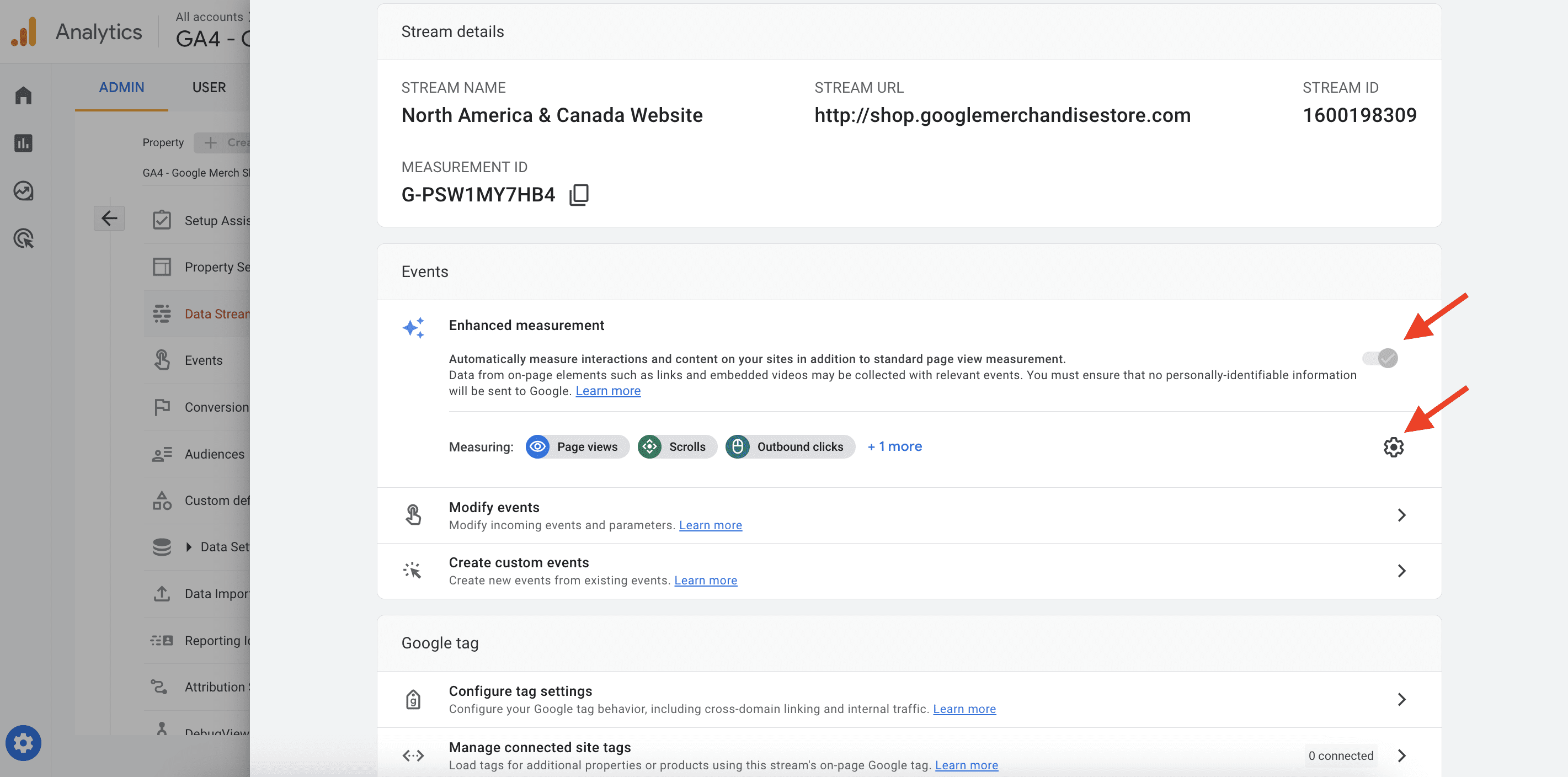

- Make sure that the Enhanced Measurement toggle is enabled. Click the gear icon.

- Open the advanced settings within the Page views section and enable the Page changes setting based on browser history events. Remember to save the changes. It’s also recommended to disable all default tracks that are unrelated to pageviews, as they might affect accuracy.

- Open Google Tag Manager and enable the Preview and Debug mode.

- Navigate through different pages on your SPA website.

- In the Preview mode, the GTM container will start showing you the History Change events.

- If you click on your GA4 measurement ID next to the GTM container in the preview mode, you should observe multiple Page View events being sent to GA4.

If these steps work, GA4 will be able to track your SPA website. If it doesn’t, you might need to take the following additional steps:

- Implementing the history change trigger in GTM.

- Asking developers to activate a dataLayer.push code

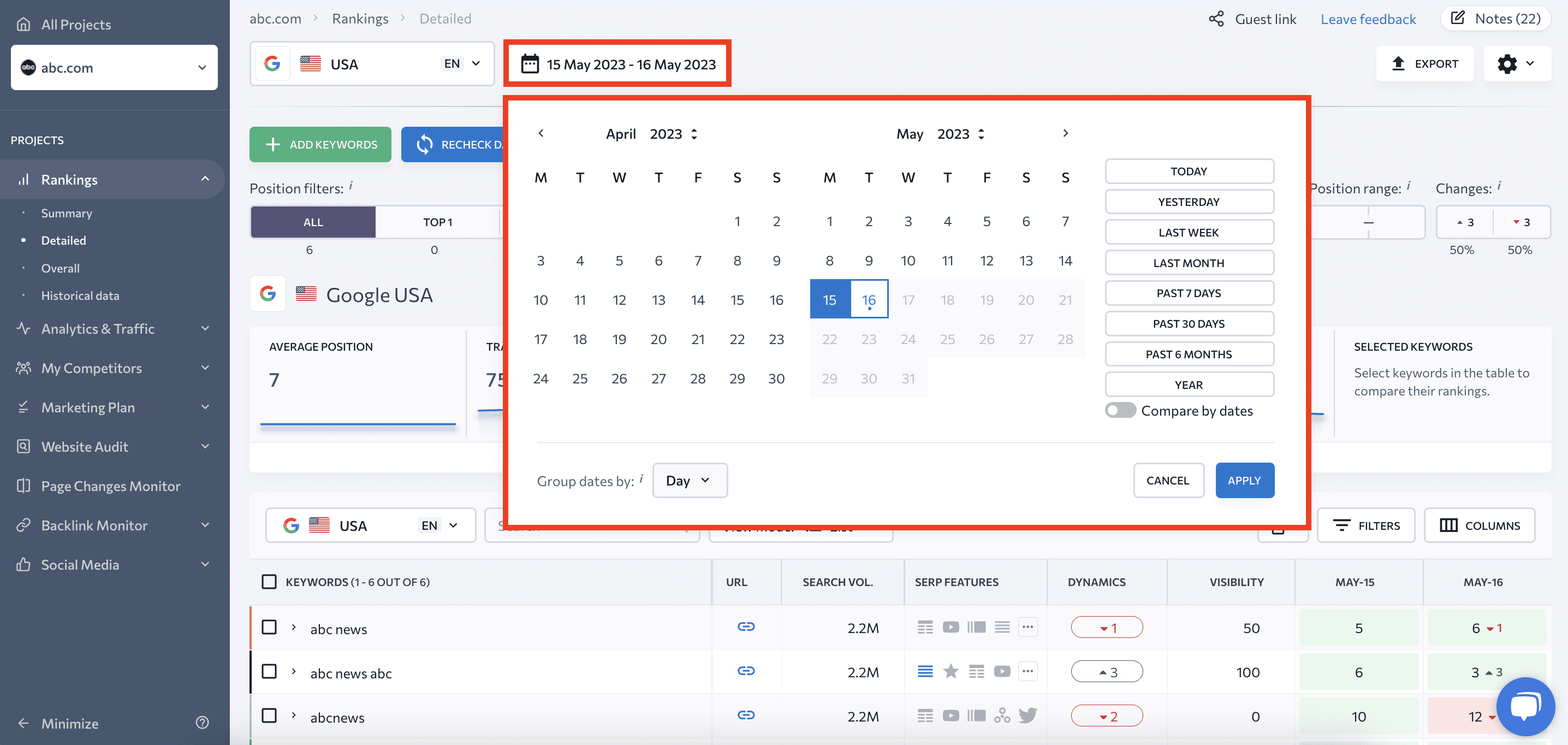

Track SPA with SE Ranking’s Rank Tracker

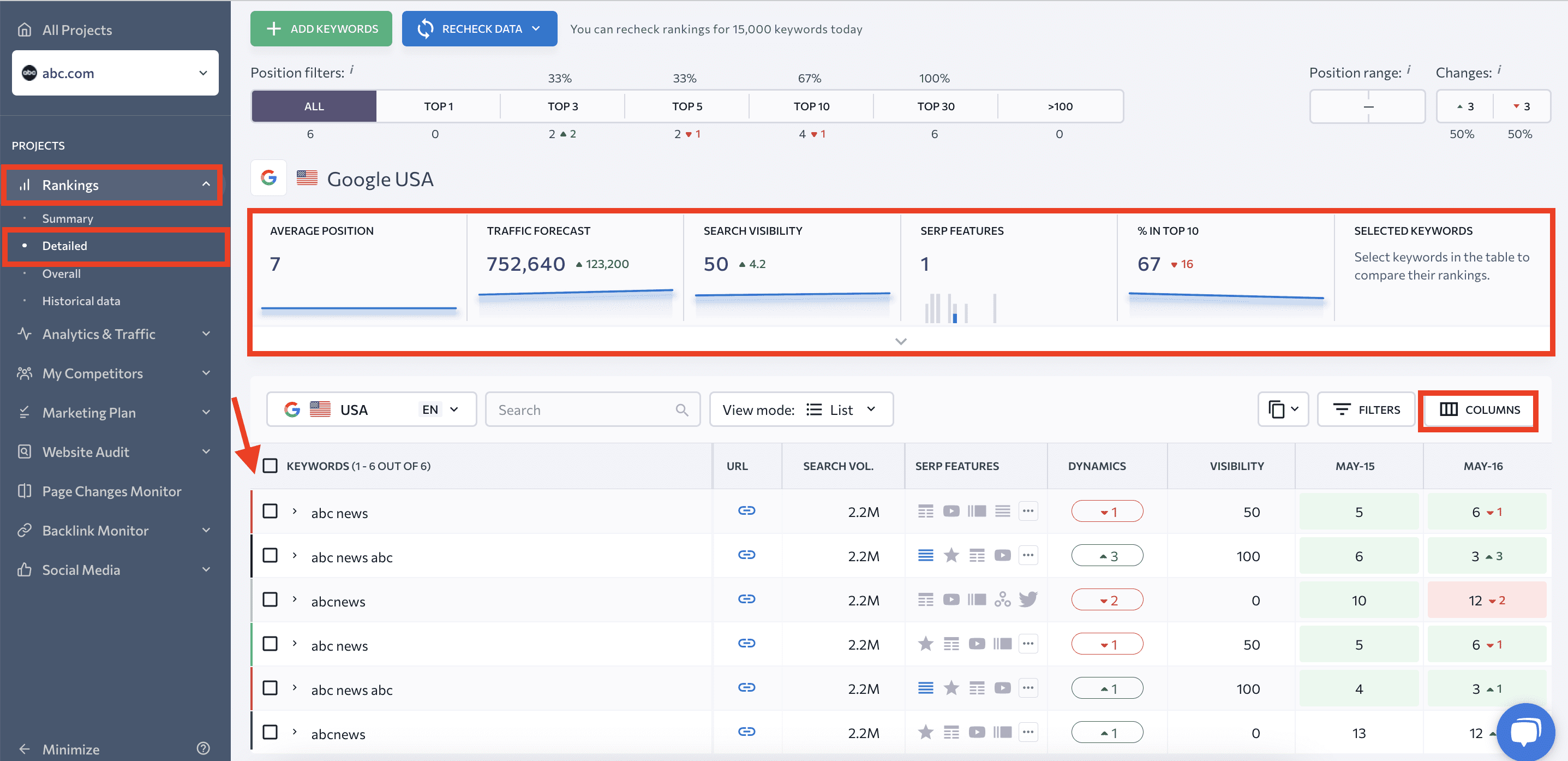

Another comprehensive tracking tool is SE Ranking’s Rank Tracker. This tool allows you to check single page applications for the keywords you want it to rank for, and it can even check them in multiple geographical locations, devices, and languages. This tool supports tracking on popular search engines such as Google, Google Mobile, Yahoo!, and Bing.

To start tracking, you need to create a project for your website on the SE Ranking platform, add keywords, choose search engines, and specify competitors.

Once your project is setup, go to the Rankings tab, which consists of several reports:

- Summary

- Detailed

- Overall

- Historical Data

We’ll focus on the default Detailed tab. It will likely be the first report you see after adding your project. At the top of this section, you’ll find your SPA’s:

- Average position

- Traffic forecast

- Search visibility

- SERP features

- % in top 10

- Keyword list

The keyword table displayed beneath these graphs provides information on each keyword that your website ranks for. It includes details such as the target URL, search volume, SERP features, ranking dynamics, and so on. You can customize the table with additional parameters available in the Columns section.

The tool lets you filter your keywords based on your preferred parameters. You can also set target URLs and tags, see ranking data for different dates, and even compare results.

Keyword Rank Tracker provides you with two additional reports:

- Your website ranking data: This includes all search engines you have added to the project, which can be found in a single tab, labeled as “Overall”.

- Historical information: This includes data regarding the changes in your website rankings since the baseline date. Navigate to the Historical Data tab to find this info.

For more information on how to monitor website positions, check out our guide on rank tracking in different search engines.

Single-page application websites done right

Now that you know all the ins and outs of SEO for SPA websites, the next step is to put theory into action. Make your content easily accessible to crawlers and watch as your website shines in the eyes of search engines. While providing visitors with dynamic content load, blasting speed, and seamless navigation, it’s also important to remember to present a static version to search engines. You’ll also want to make sure that you have a correct sitemap, use distinct URLs instead of fragment identifiers, and label different content types with structured data.

The rise of single-page experiences powered by JavaScript caters to the demands of modern users who crave immediate interaction with web content. To maintain the UX-centered benefits of SPAs while achieving high rankings in search, developers are switching to what Airbnb’s engineer Spike Brehm calls “the hard way”—skillfully balancing the client and server aspects of web development.