Pagination: A Detailed Overview of What It Is and How to Implement It Properly

Whether you are working with an ecommerce website or a blog, how you organize your content is key. Doing it correctly enhances UX and ensures that users and search engines alike can find information easily. SEO pagination can help with this task. In this complete guide, we’ll explain pagination for SEO, share key SEO solutions for paginated content, and provide best practices for implementation.

Key takeaways

- Paginated content enhances Google’s ranking signals by loading faster and improving backend performance.

- Better navigation through paginated content signals higher content quality by reducing bounce ratesy.

- There are four methods for handling pagination. Choose one of the following:

- Indexing all paginated pages: Optimize all paginated URLs by making paginated page elements unique, linking paginated URLs to each other, and ensuring they are canonical.

- Indexing the View All page only: Create a page that includes all paginated results and canonicalize the View All page. This signals search engines to prioritize it while allowing crawlers to follow links on non-canonical pages.

- Block indexing of paginated content: Use robots meta tags to prevent indexing of all paginated pages except the first. Optimize the first page for ranking.

- Infinite scrolling: Create unique links for dynamically loaded content to support engagement and sharing.

What is pagination?

Pagination is the numbering of site pages in ascending order. Website pagination comes in handy when you want to split content across pages and show an extensive data set in manageable chunks. Sites with a large assortment and lots of content (e.g., ecommerce websites, news portals and blogs) may need multiple pages for easier navigation, better user experience, the buyers’ journey, etc.

Numbering is displayed at the top or bottom of the page and allows users to move from one group of links to another.

Get your free copy of our research findings and sign up to SE Ranking’s news and SEO tips digests!

Click the link we sent you in the email to confirm your email

How does friendly pagination affect SEO?

Splitting information across several pages increases the website’s usability . It’s also vital to implement pagination correctly, as this determines whether your important content will be indexed . Both the website’s usability and indexing directly affect its search engine visibility.

Let’s take a closer look at these factors.

Website usability

Search engines strive to show the most relevant and informative results at the top of search results and have many criteria for evaluating the website’s usability, as well as its content quality. Pagination affects:

- Behavioral factors

One of the indirect signs of content quality is the time users spend on the site. The more convenient it is for users to move between paginated content, the more time they spend on your site.

- Navigation

Website pagination makes it easier for users to find the content they are looking for. Users immediately understand the site structure and can get to the desired page in a single click.

- Page experience

According to Google , pagination can help you improve page performance (which is a Google Search ranking signal). And here’s why. Paginated pages load faster than all results at once. Plus, you improve backend performance by reducing the volume of content retrieved from databases.

Crawling and indexing

If you want paginated content to appear on SERPs, think about how bots crawl and index pages:

- Unique content

Google must ensure that all site pages are completely unique: duplicate content poses indexing problems. Crawlers perceive paginated pages as separate URLs. At the same time, it is very likely that these pages may contain similar or identical content.

- Crawling budget

The search engine bot has an allowance for how many pages it can crawl during a single visit to the site. While Google bots are busy crawling numerous pagination pages, they will not be visiting other pages, probably more important URLs. As a result, important content may be indexed later or not indexed at all.

SEO solutions to paginated content

There are several ways to help search engines understand that your paginated content is not duplicated and to get it to rank well on SERPs.

Index all paginated pages and their content

For this method, you’ll need to optimize all paginated URLs according to search engine guidelines. This means you should make paginated content unique and establish connections between URLs to give clear guidance to crawlers on how you want them to index and display your content.

- Expected result: All paginated URLs are indexed and ranked in search engines. This versatile option will work for both short and long pagination chains.

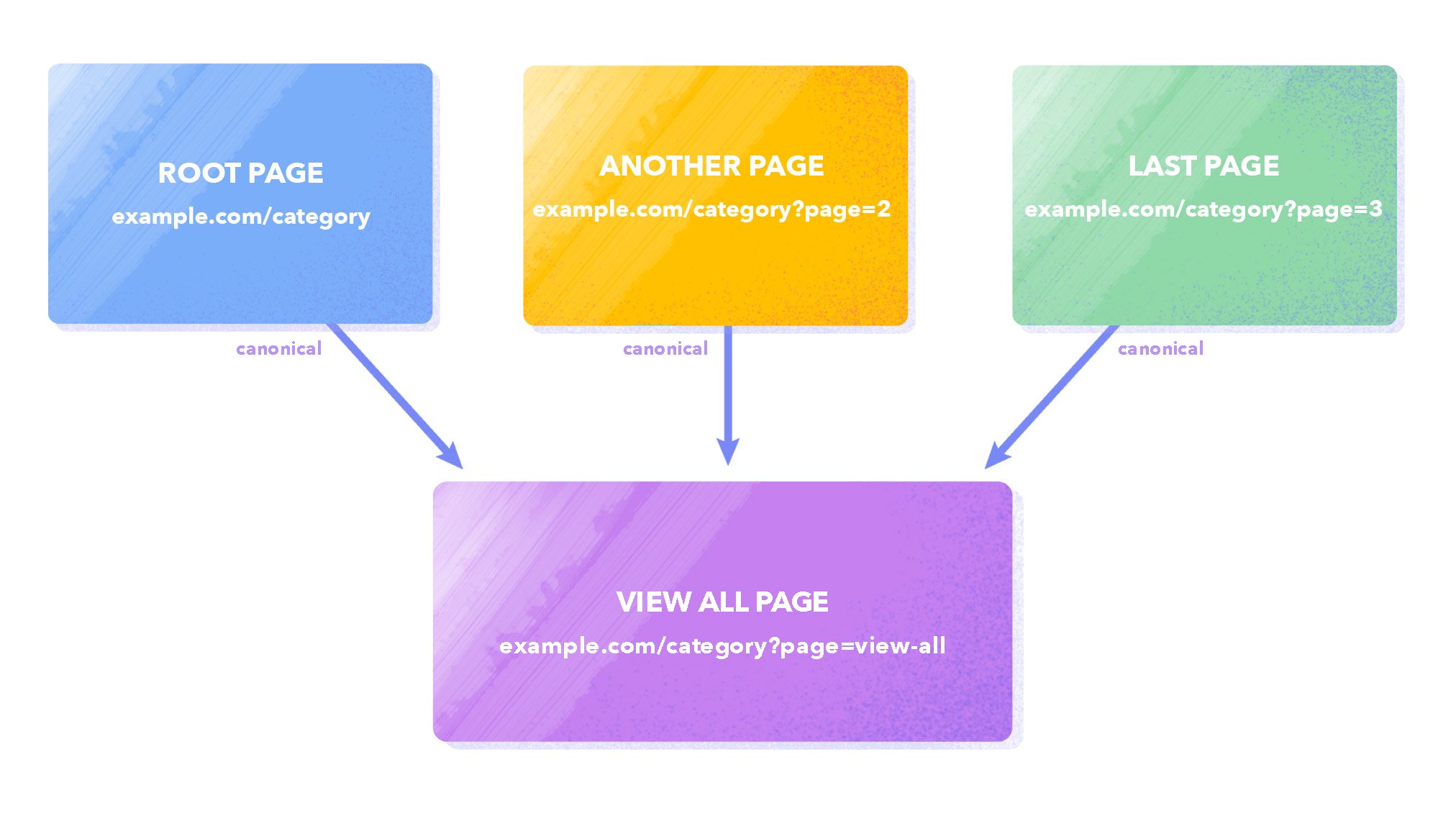

Index the View all page only

Another approach is to canonicalize the View All page (where all products/blog posts, comments, etc. are displayed). You need to add a canonical link pointing to the View all page to each paginated URL . The canonical link signals to search engines to consider the page’s priority for indexing. At the same time, the crawler can scan through all the links of non-canonical pages (if those pages don’t block indexing by search engine crawlers). This way, you indicate that non-primary pages like page=2/3/4 don’t need to be indexed but can be followed.

Here is an example of the code you need to add to each paginated page:

<link href="http://site.com/canonical-page" rel="canonical" />

- Expected result : This method is suitable for small site categories that have, for instance, three or four paginated pages. If there are more pages, this option will not work well since loading a large amount of data on one page can negatively affect its speed.

Prevent paginated content from being indexed by search engines

A classic method to solve pagination issues is using a robots noindex tag . The idea is to exclude all paginated URLs from the index except the first one. This saves the crawling budget to let Google index your essential URLs. It is also a simple way to hide duplicate pages.

One option is to restrict access to paginated content by adding a directive to your robots.txt file :

Disallow: *page=

Still, since the robots.txt file is just a set of recommendations for crawlers, you can’t force them to observe any commands. This is why it is better to block different pages from indexing with the help of the robots meta tag.

To do this, add <meta name= “robots” content= “noindex”> to the <head> section of all paginated pages but the root one.

The HTML code will look like this:

<!DOCTYPE html> <html><head>

<meta name="robots" content="noindex">

(…) </head> <body>(…)</body> </html>

- Expected result: this method is suitable for large websites with multiple sections and categories. If you’re going to follow this method, then you must have a well-optimized XML sitemap . One of the cons is that you are likely to have issues with indexing product pages featured on paginated URLs that are closed from Googlebot.

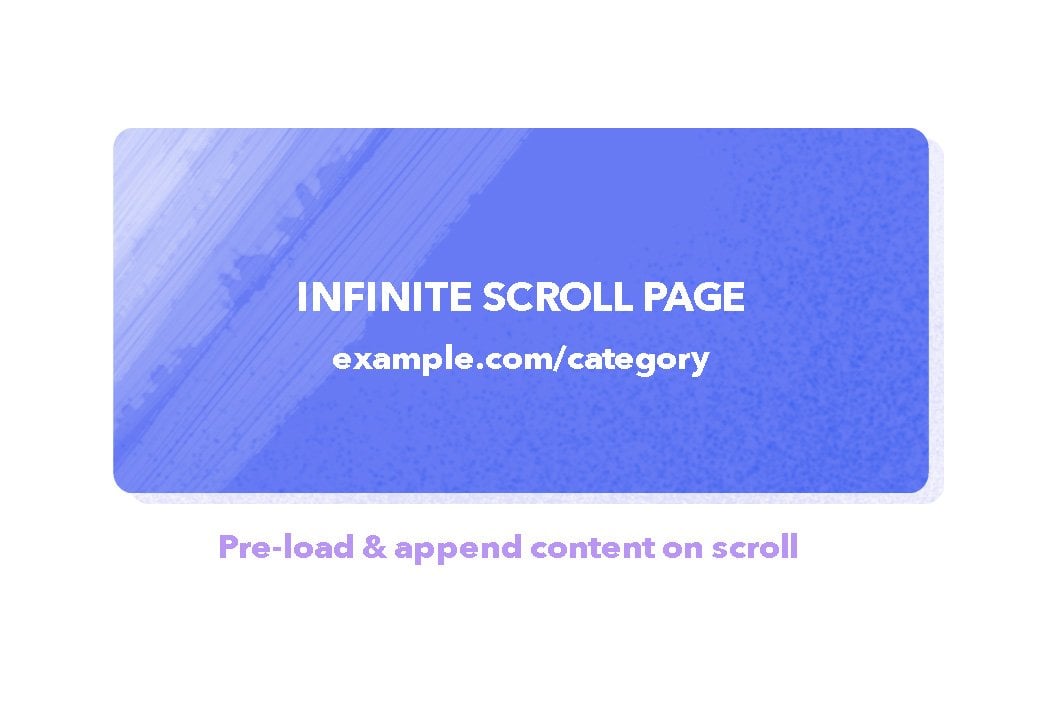

Infinite scrolling

You’ve probably come across endless scrolling of goods on ecommerce websites, where new products are constantly added to the page when you scroll to the bottom of the screen. This type of user experience is called single page content . Some experts prefer the Load more approach. In this method, in contrast to infinite scroll, content is loaded using a button that users can click in order to extend an initial set of displayed results.

Load more and infinite scroll are generally implemented using the Ajax load method ( JavaScript ).

According to Google recommendations , if you are implementing an infinite scroll and Load more experience, you need to support paginated loading, which assists with user engagement and content sharing. To do this, provide a unique link to each section that users can click, copy, share and load directly. The search engine recommends using the History API to update the URL when the content is loaded dynamically.

- Expected result: As website content automatically loads upon scroll, it keeps the visitor on the site for longer. But there are several disadvantages. First, the user can’t bookmark a particular page to return and explore it later. Second, infinite scroll can make the footer inaccessible as new results are continually loaded in, constantly pushing down the footer. Third, the scroll bar does not show the actual browsing progress and may cause scrolling fatigue.

Common pagination mistakes and how to detect them

Now, let’s talk about pagination issues, which can be detected with special tools.

1. Issues with implementing canonical tags.

As we said above, canonical links are used to redirect bots to priority URLs for indexing. The rel=”canonical” attribute is placed within the <head> section of pages and defines the main version for duplicate and similar pages. In some cases, the canonical link is placed on the same page it leads to, increasing the likelihood of this URL being indexed.

If the canonical links aren’t set up correctly, then the crawler may ignore directives for the priority URL.

2. Combining the canonical URL and noindex tag in your robots meta tag.

Never mix noindex tag and rel=canonical , as they are contradictory pieces of information for Google. While rel=canonical indicates to the search engine the prioritized URL and sends all signals to the main page, noindex simply tells the crawler not to index the page. But at the same time, noindex is a stronger signal for Google.

If you want the URL not to be indexed and still point to the canonical page, use a 301 redirect .

3. Blocking access to the page with robots.txt and using canonical tag simultaneously.

We described a similar mistake above: some specialists block access to non-canonical pages in robots.txt.

User-agent: * Disallow: /

But you should not do so. Otherwise, the bot won’t be able to crawl the page and won’t consider the added canonical tag. This means the crawler will not understand which page is canonical.

Tools for finding SEO pagination issues on a site

Webmaster tools can quickly detect issues related to site optimization, including page pagination.

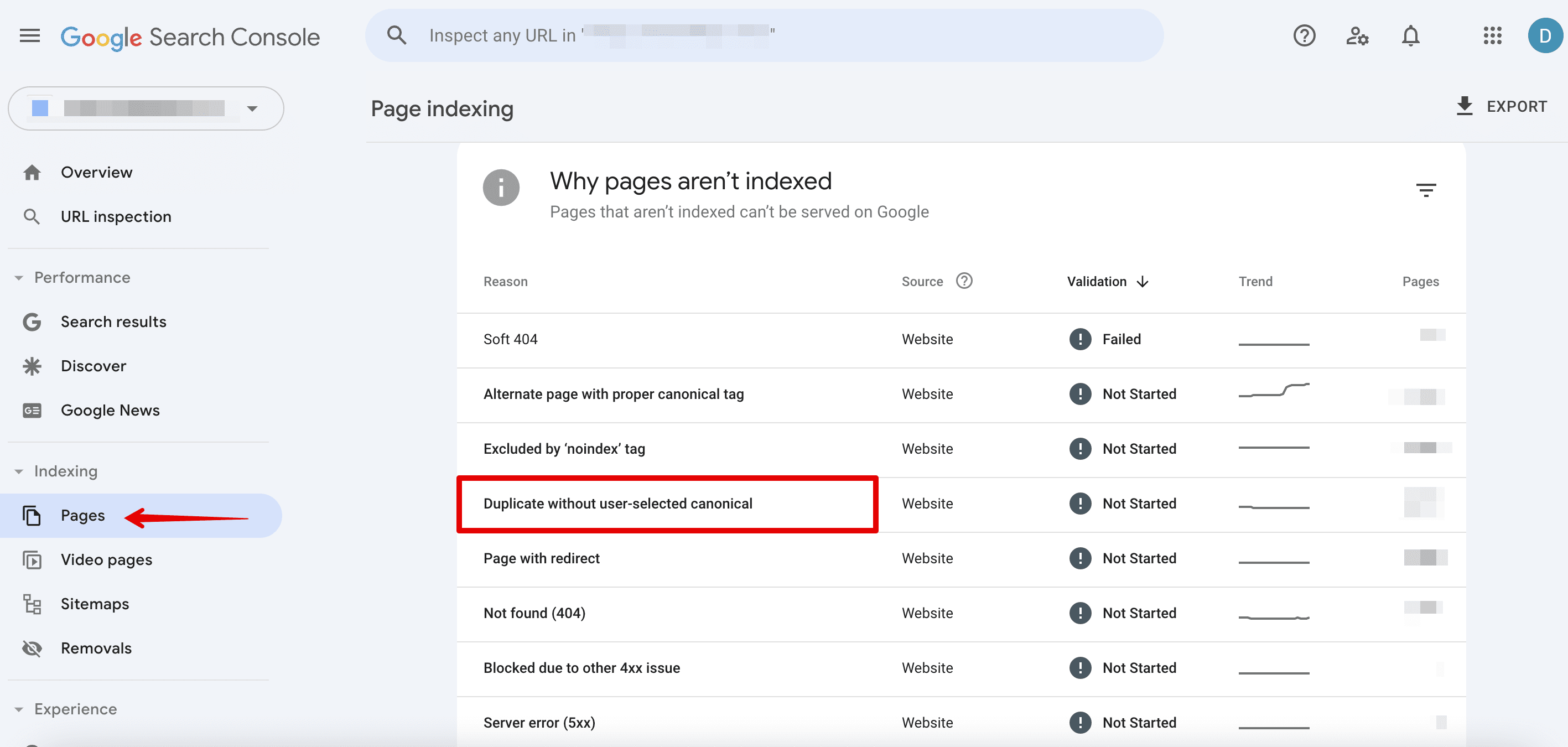

Google Search Console

The Non-indexed tab of the Pages section displays all the non-indexed URLs. Here, you can see which site pages have been identified by the search engine as duplicates.

It is worth paying attention to the following reports:

- Duplicate without user-selected canonical & Duplicate

- Google chose different canonical than user

There, you’ll see data on problems with implementing canonical tags. It means Google has not been able to determine which page is the original/canonical version. It could also mean that the priority URL chosen by the webmaster does not match the URL recommended by Google.

SEO tools for in-depth website auditing

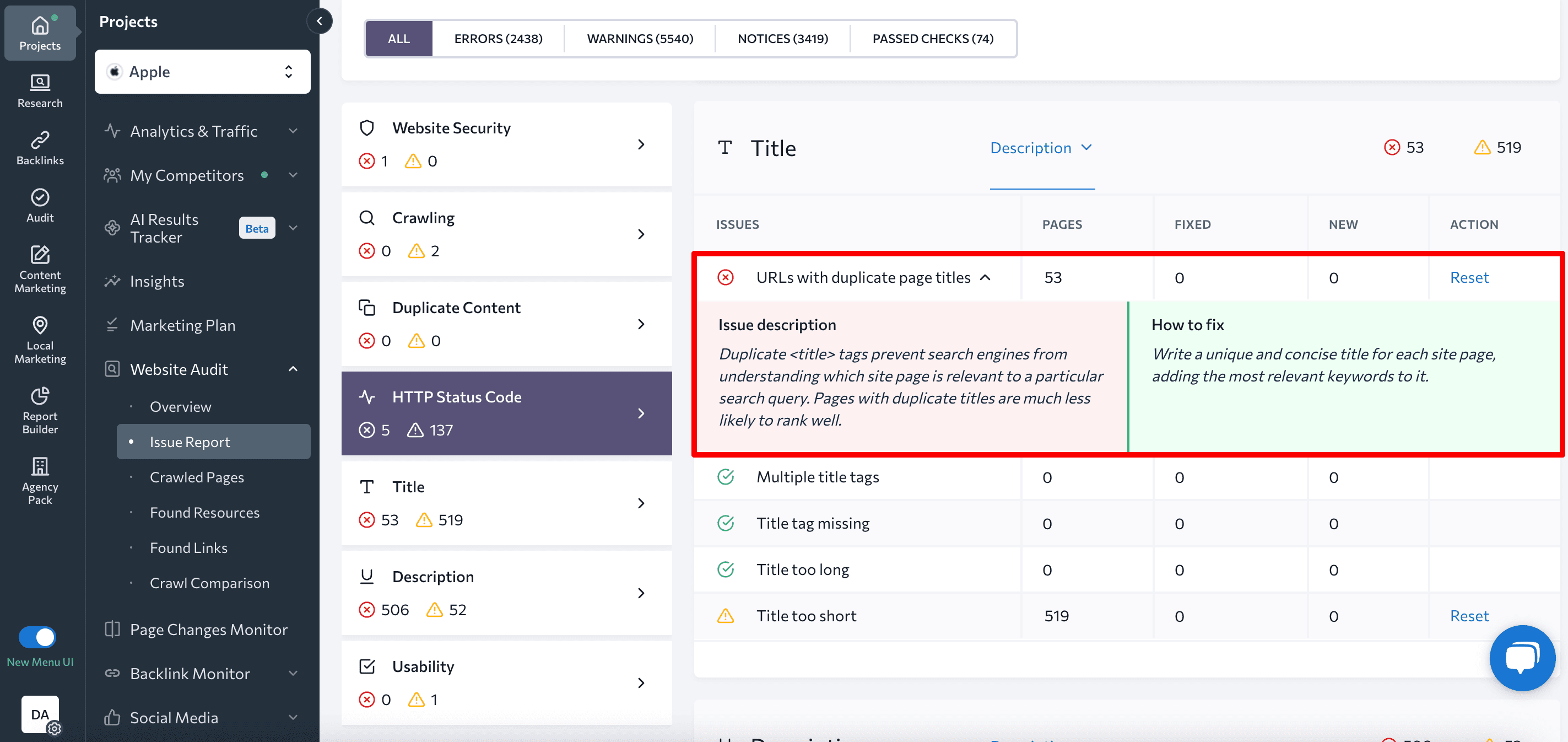

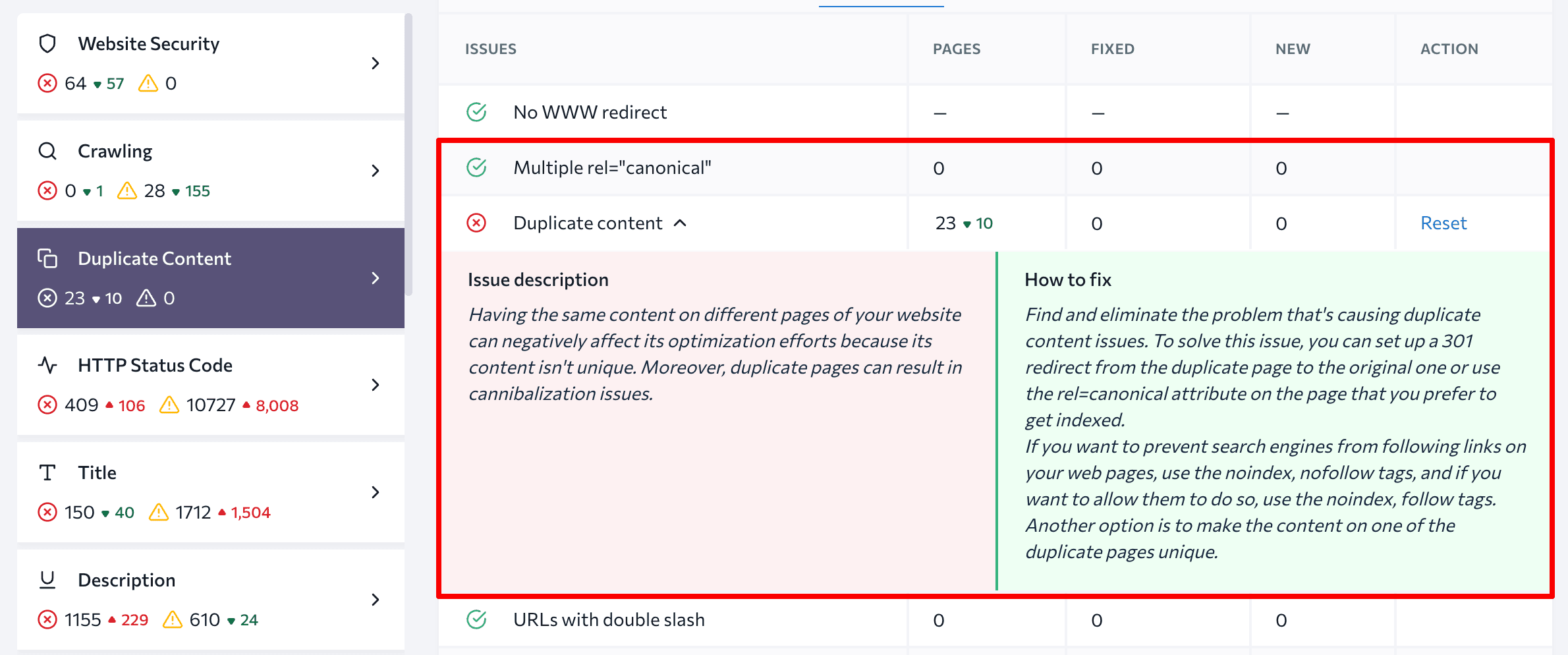

Special tools can help you perform comprehensive site checks for all technical parameters. SE Ranking allows you to conduct a website SEO audit and analyze more than 120 parameters. It also provides tips on how to address issues that impact your site’s performance.

This tool helps identify all pagination issues, including duplicate content and canonical URLs.

Additionally, the Website Audit tool will point out any title, description and H1 duplicates, which can be an issue with paginated URLs.

How do you optimize paginated content?

Let’s break down how to set up SEO pagination depending on your chosen approach.

Goal 1: Index all paginated pages

Having duplicated titles, descriptions, and H1s for paginated URLs is not a big problem. This is common practice. Still, if you choose to index all your paginated URLs, it is better to make every pagination component unique.

How to set up SEO pagination:

1. Give each page a unique URL.

If you want Google to treat URLs that are in a paginated sequence as distinct pages, use URL nesting on the principle of url/n or include a ?page=n query parameter, where n is the page number in the sequence.

Don’t use URL fragment identifiers (the text after a # in a URL) for page numbers since Google ignores them and does not recognize the text following the character. If Googlebot sees such a URL, it may not follow the link, thinking it has already retrieved the page.

2. Link your paginated URLs to each other.

To ensure search engines understand the relationship between paginated content, include links from each page to the following page using <a href> tags. Also, remember to add a link on every page in a group that goes back to the first page. This will signal to the search engine which page is primary in the chain.

In the past, Google used the HTML link elements <link rel=”next” href=”…”> and <link rel=”prev” href=”…”> to identify the relationship between component URLs in a paginated series. Google no longer uses these tags, although other search engines may still use them.

3. Ensure that your pages are canonical.

To make each paginated URL canonical, you should specify the rel=”canonical” attribute in the <head> tag of each page and add a link pointing to that page (self-referencing rel=canonical link tag method) .

4. Do On-Page SEO

To prevent any warnings in Google Search Console or any other tool, try deoptimizing paginated page H1 tags and adding useful text and a category image (with an optimized file name and alt tag) to the root page.

To perform a complete on-page SEO audit and identify issues keeping you away from reaching top of the SERP, make sure to use SE Ranking’s SEO Page Checker . By utilizing this tool, you’ll obtain actionable insights on how to enhance your individual pages and surpass your competitors based on 94 parameters that impact search rankings.

Goal 2: Only index the View all page

This strategy will help you effectively optimize your page with all the paginated content (where all results are displayed) so that it can rank high for necessary keywords.

How to set up SEO pagination:

1. Create a page that includes all the paginated results.

There can be several such pages depending on the number of website sections and categories for which pagination is done.

2. Specify the View all page as canonical.

Every paginated page’s <head> tag must contain the rel= “canonical” attribute directing the crawler to the priority page for indexing.

3. Improve the View all page loading speed.

Optimized site speed not only enhances the user experience but can also help to boost your search engine rankings. Identify what is slowing your View all page down using Google’s PageSpeed Insights . Then, minimize any negative factors affecting speed.

Goal 3: Prevent paginated URLs from indexing

You need to instruct crawlers on how to index website pages properly. Only paginated content should be closed from indexing. All product pages and other results divided into clusters must be visible to search engine bots.

How to set up SEO pagination:

1. Exclude all paginated pages from indexing except the first one.

Avoid using the robots.txt file for this. It is better to apply the following method:

- Block indexing with the robots meta tag.

You should add the meta tag name=”robots” content=”noindex, follow” to the <head> section of every paginated page except the first one. This combination of directives prevents lists of pages from being indexed while allowing crawlers to follow the links held by these pages.

2. Optimize the first paginated page.

Since this page should be indexed, prepare it for ranking, paying special attention to content and tags.

Final thoughts

So, what does pagination mean? Pagination is when you split up content into numbered pages, which improves website usability. If you do it right, important content will show up where it should.

There are several ways of pagination implementation on websites:

- Indexing all the paginated pages

- Indexing the View All page only

- Preventing all paginated pages from being indexed, except for the first one

Special tools can help you detect pagination issues and check if you did it right. You can try, for instance, the Pages section of the Google Search Console or SE Ranking’s Website Audit for a more detailed analysis.