33+ technical SEO issues that affect most websites

Wise people learn from other people’s SEO mistakes.

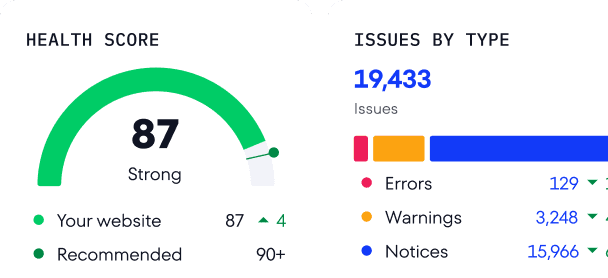

To make it easier to find the top errors made by many sites, we analyzed 418,125 unique site audits from our Website Audit tool over the past year. It allows us to detect common SEO issues and order them by prevalence and severity.

We included a section at the end that discusses less critical but commonly occurring technical SEO errors that deserve your attention. We strongly encourage you to read to the end.

-

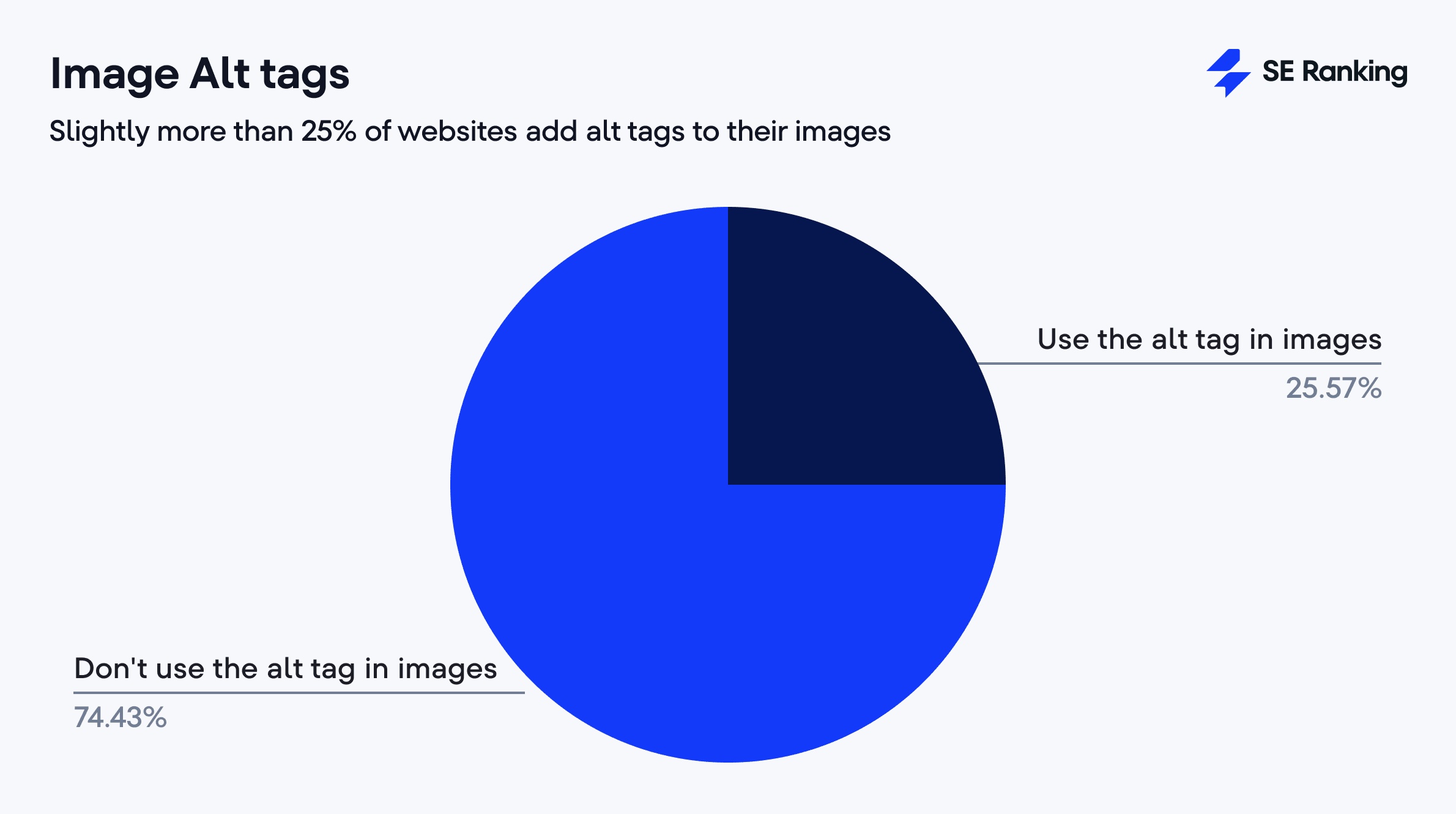

Alt text:

74.43% of websites have images that lack descriptions.

-

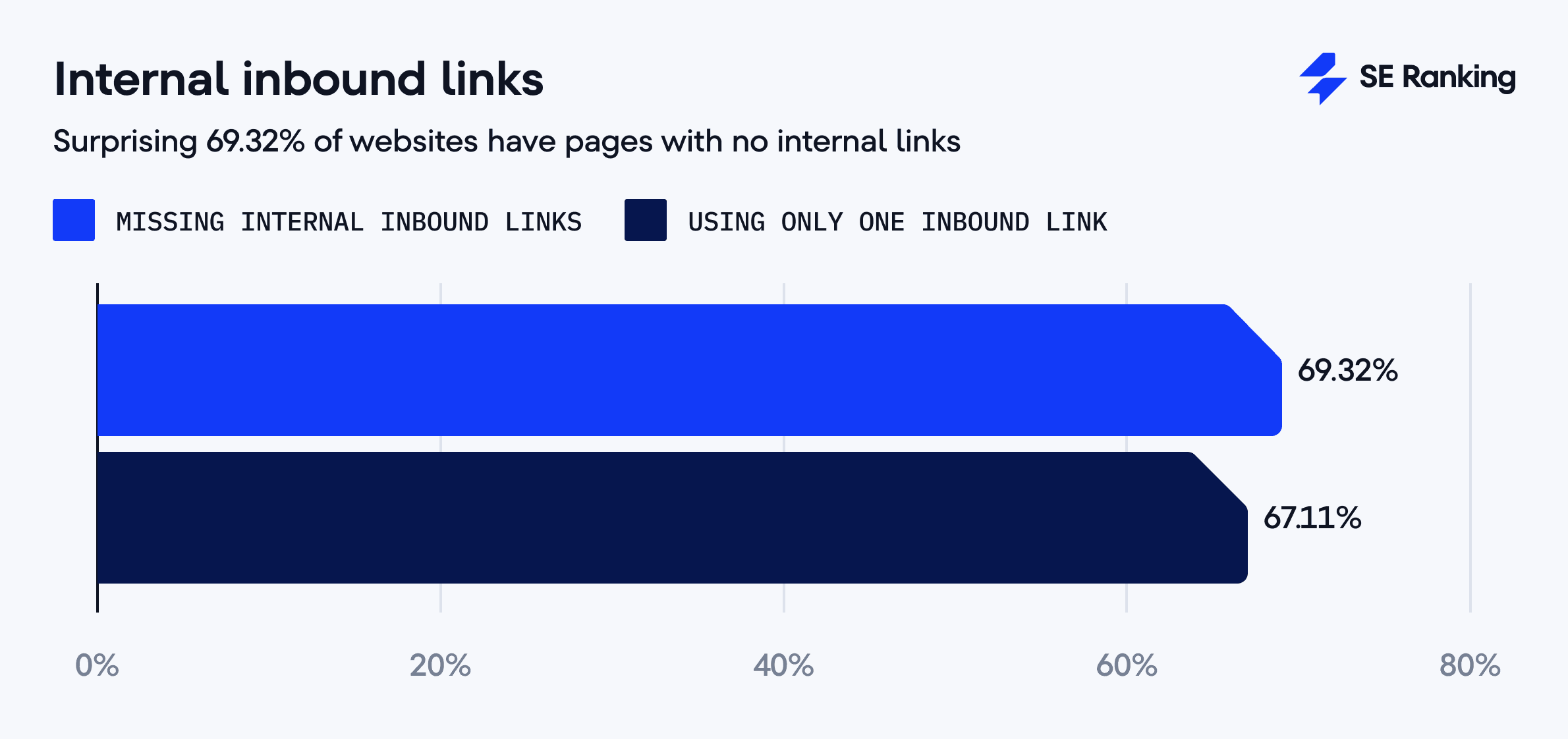

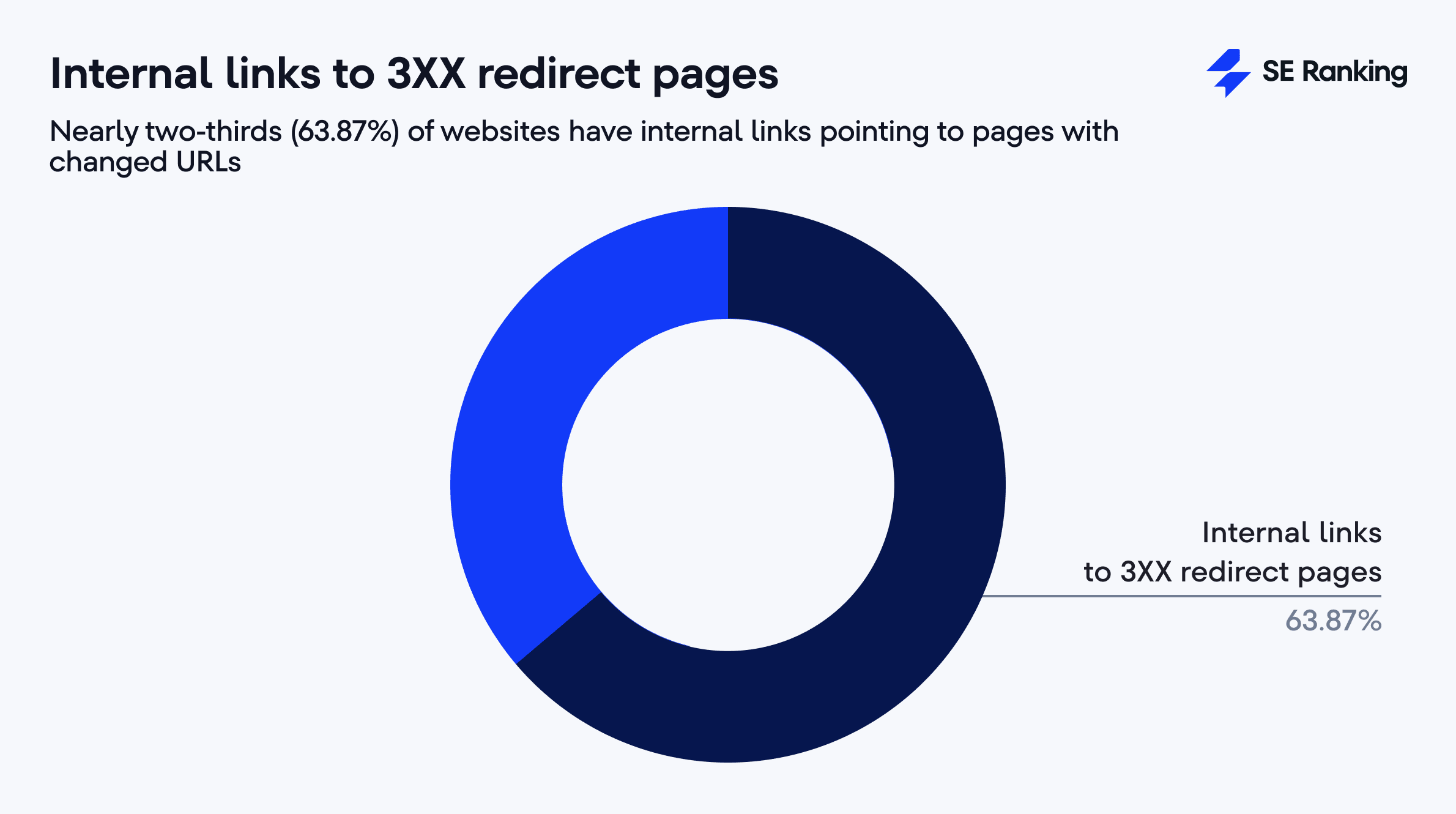

Internal linking issues:

69.32% have no inbound links, 68.09% lack descriptive anchors, 67.11% only have one inbound link, and 63.87% redirect to other pages.

-

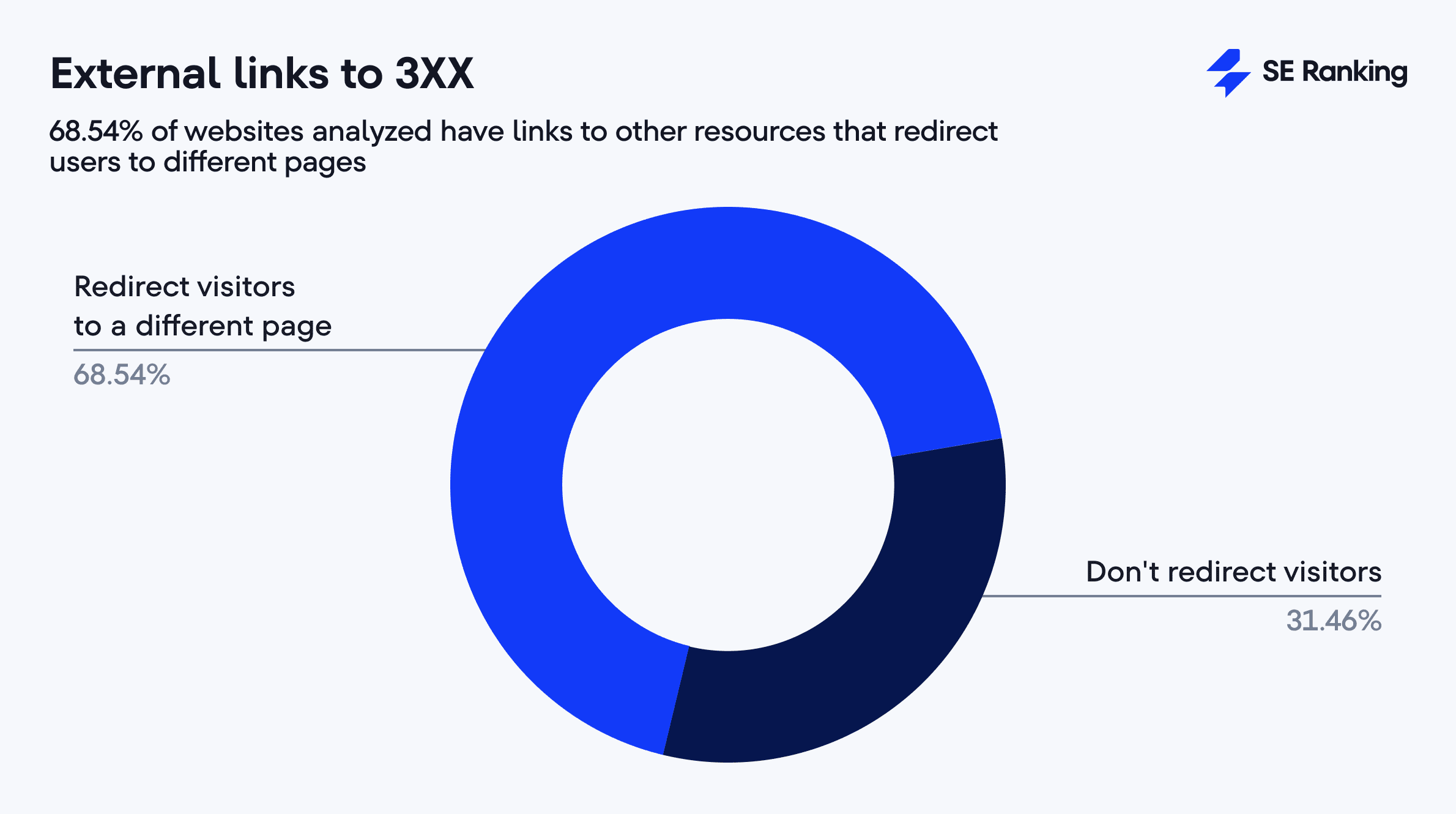

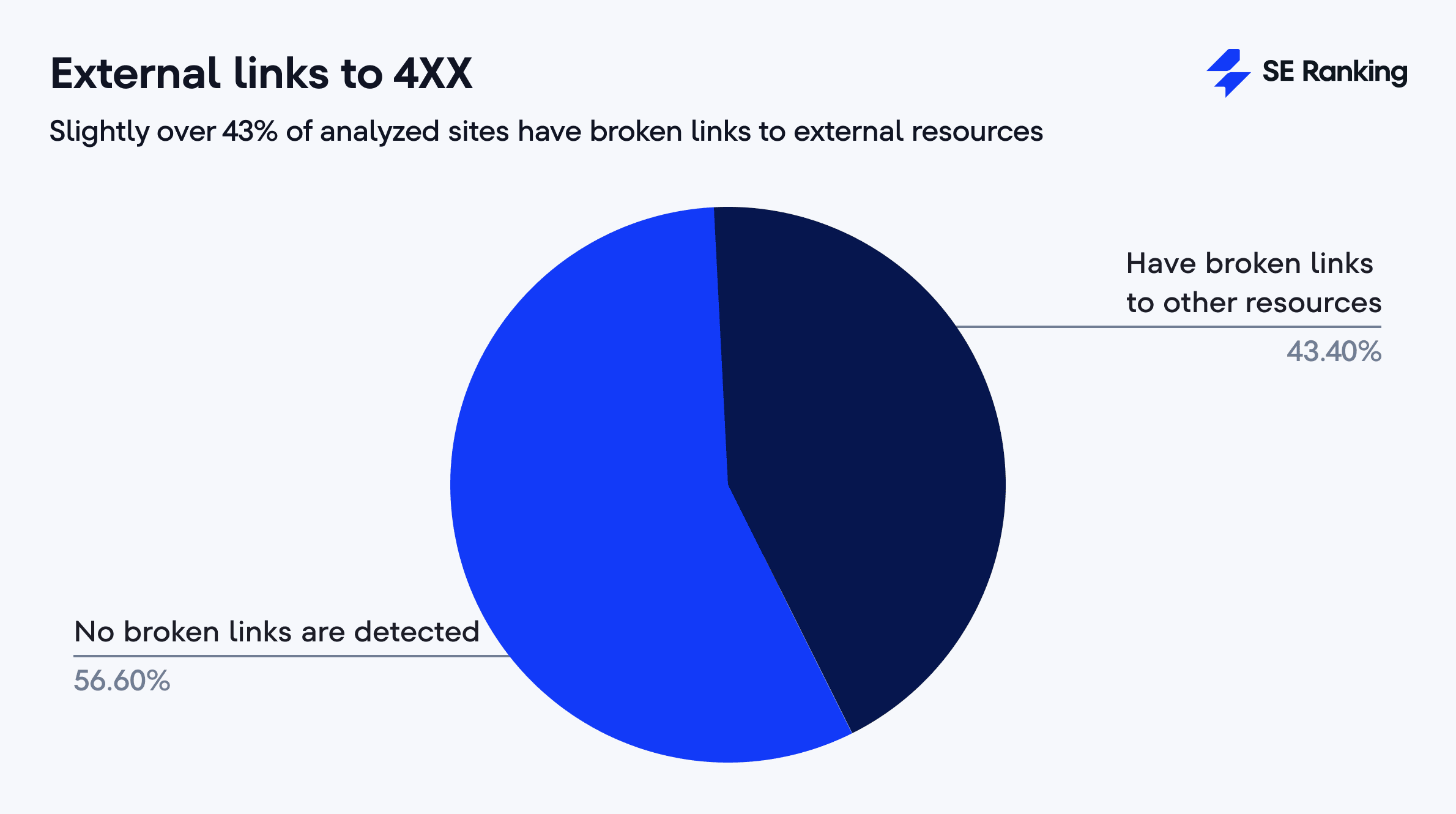

External link issues:

68.54% redirect to other pages, 54.58% lack descriptive anchors, 43.40% lead to broken pages.

-

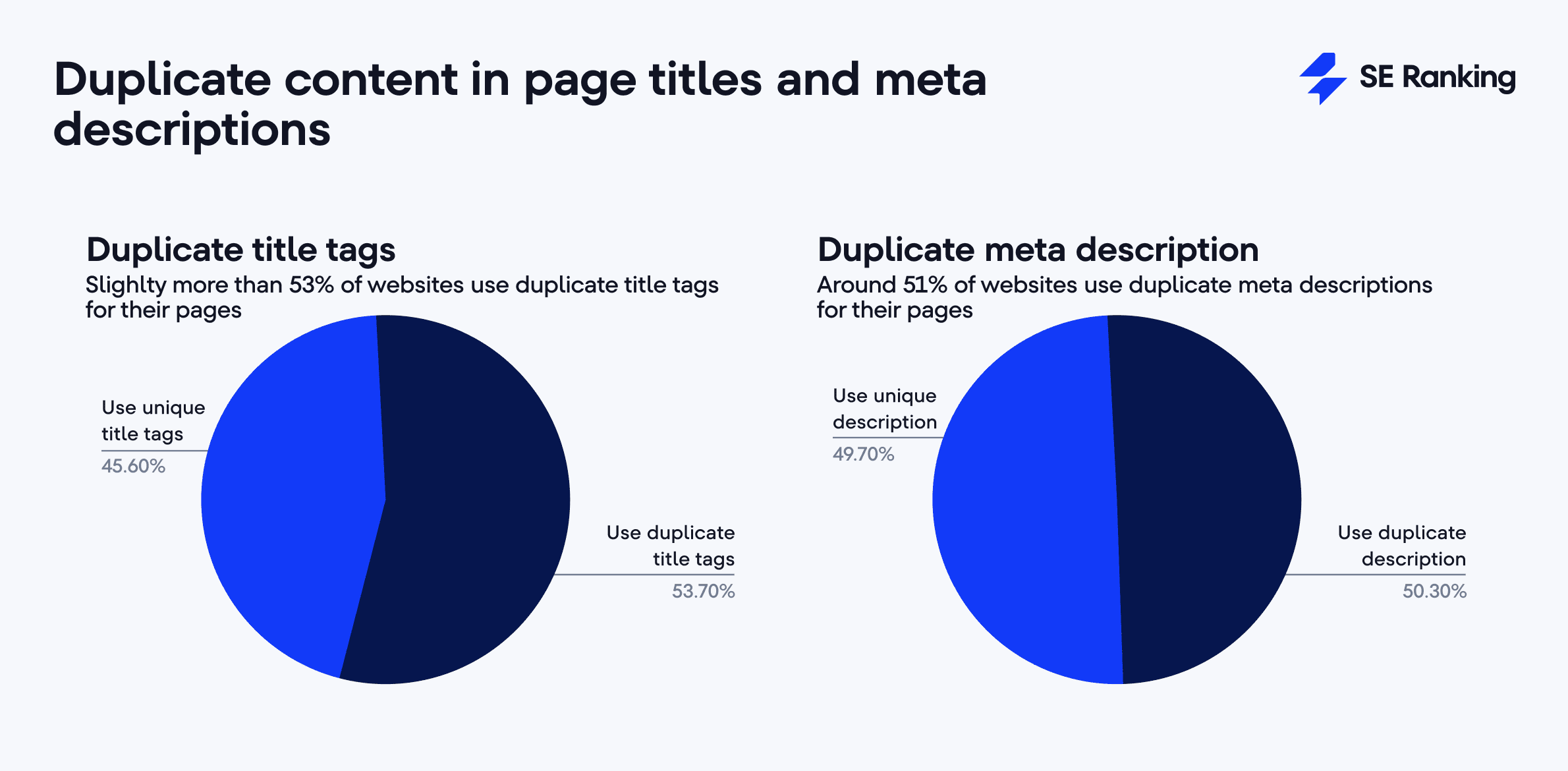

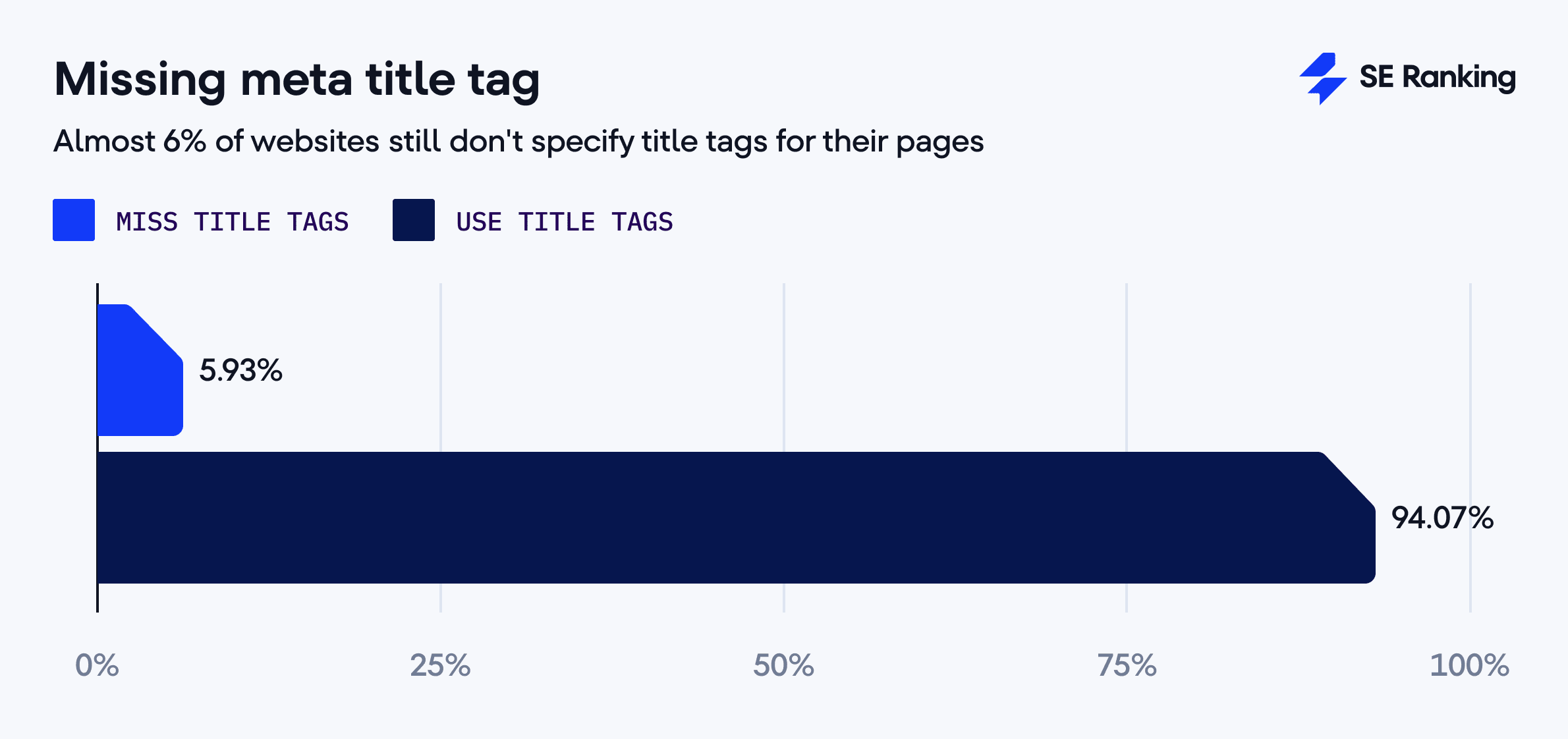

Title issues:

67.52% too long, 53.69% duplicate, 49.36% too short, 5.93% missing.

-

Meta description issues:

63.53% too long, 65.38% missing, 50.31% duplicate.

-

3XX HTTP status codes:

67.42% use redirects that hurt performance.

-

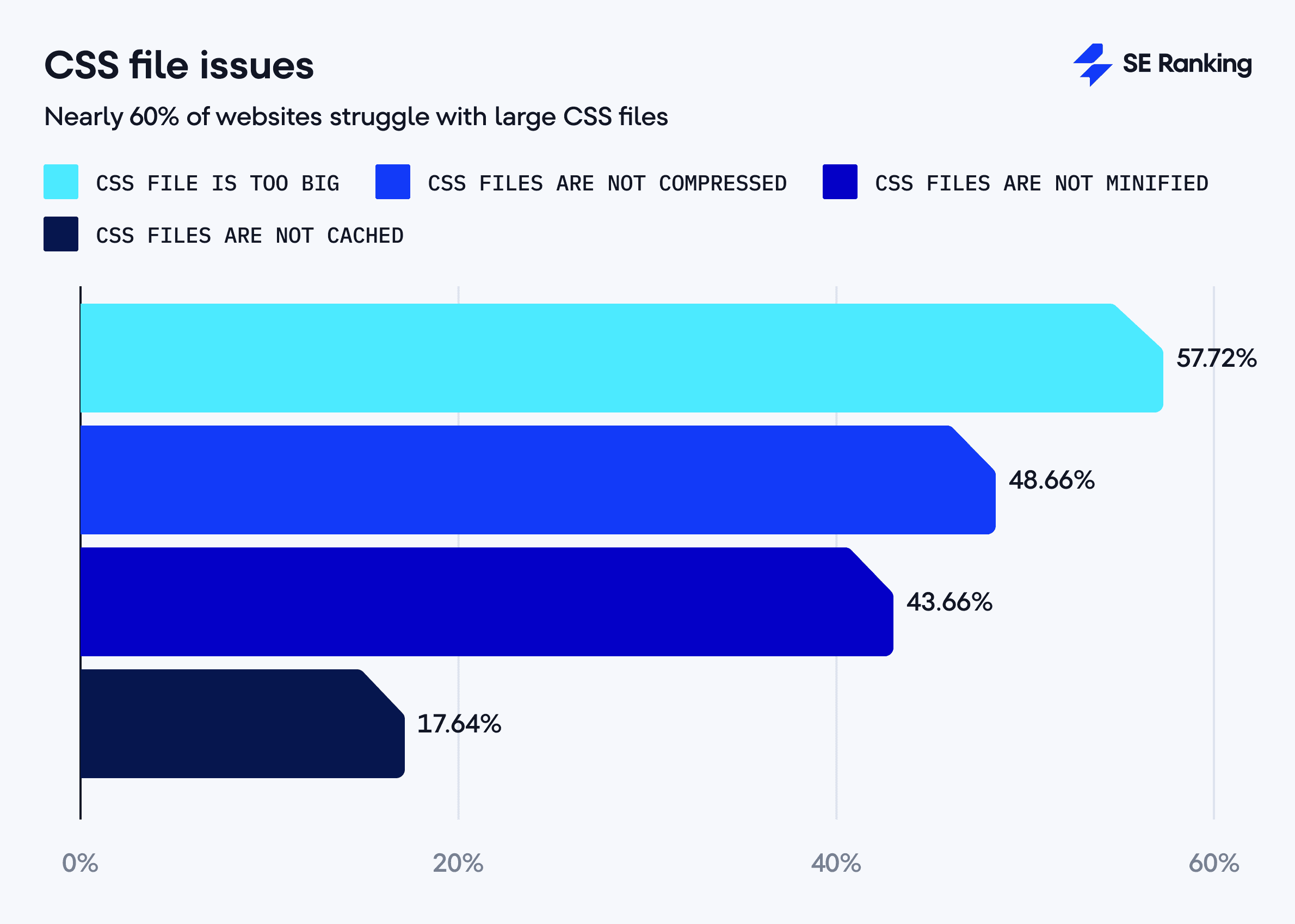

CSS files:

57.72% too large, 48.66% uncompressed, 43.66% not minified, 17.64% not cached.

-

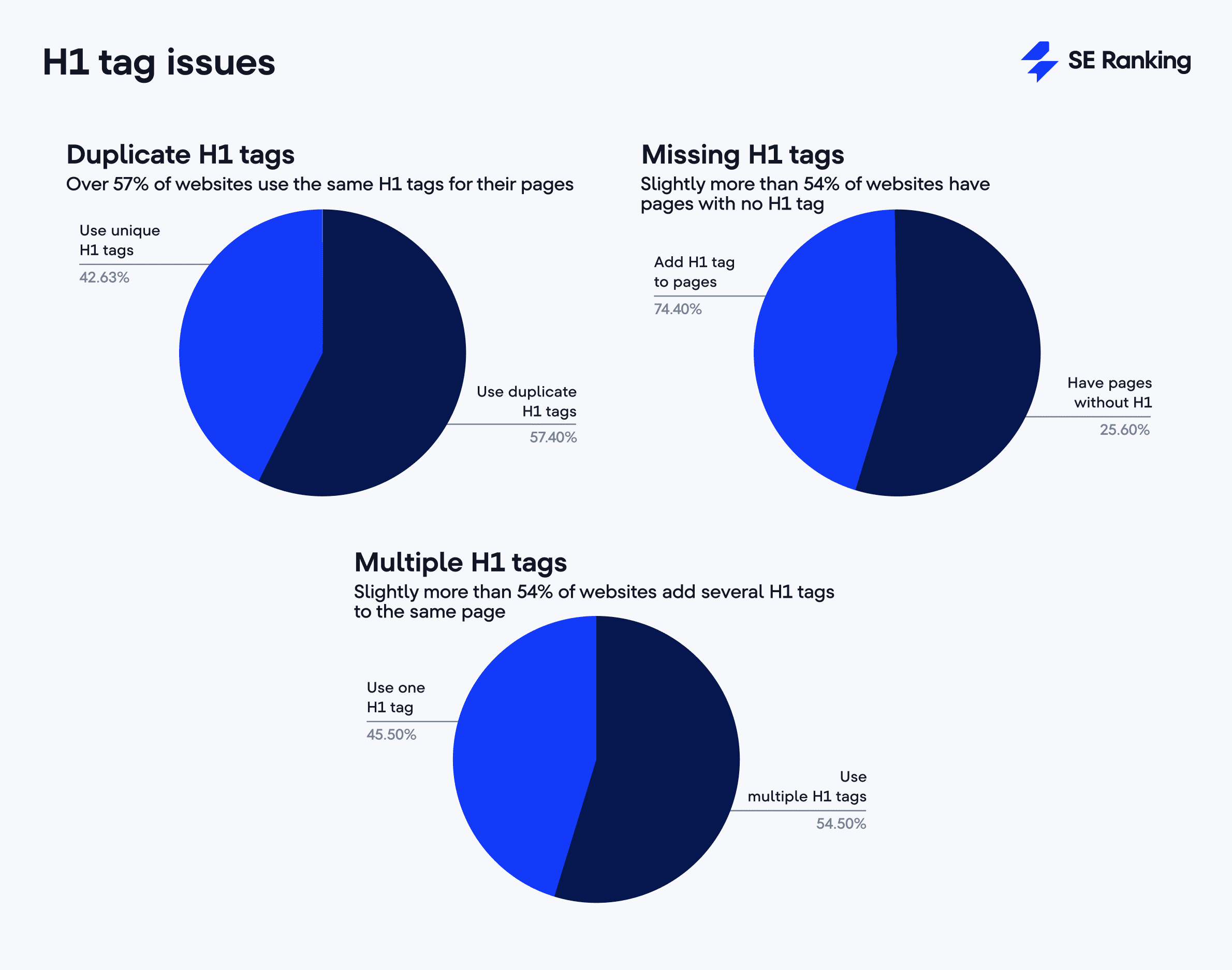

Headings:

57.37% duplicate H1s, 54.67% missing H1s, 54.52% multiple H1s.

-

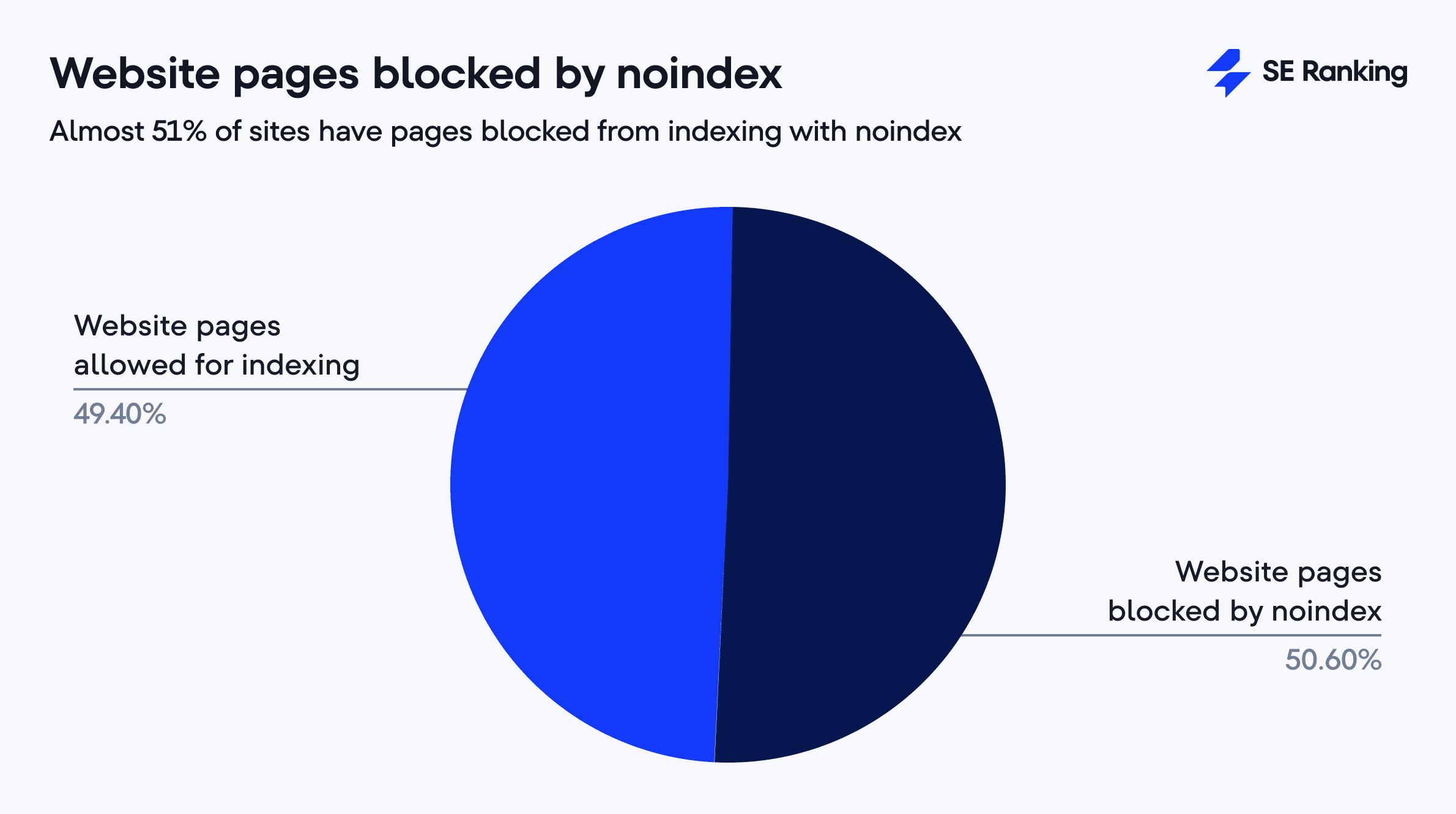

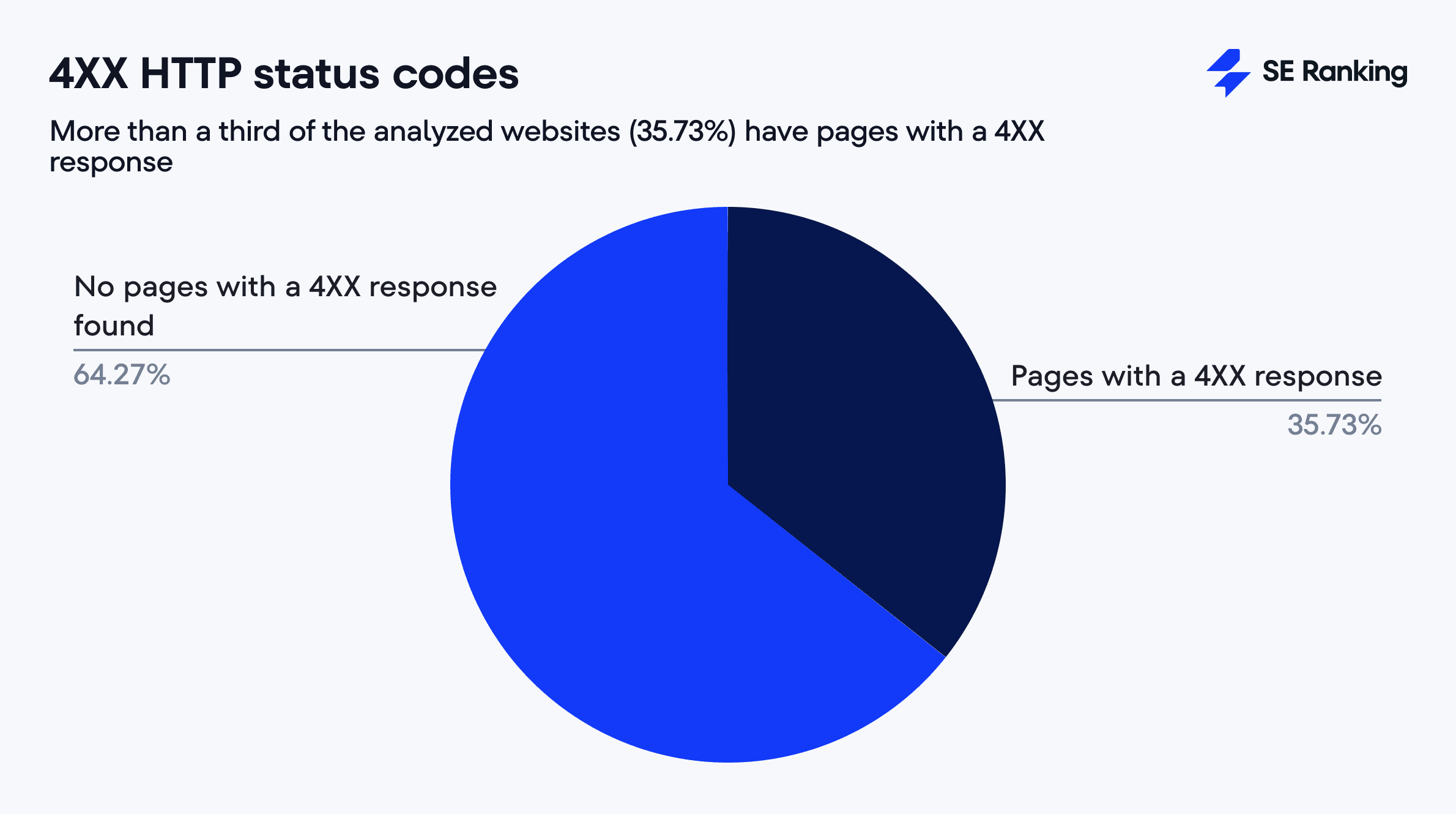

Crawling & indexing:

50.58% blocked by noindex, 35.73% have pages that return 4XX status codes.

-

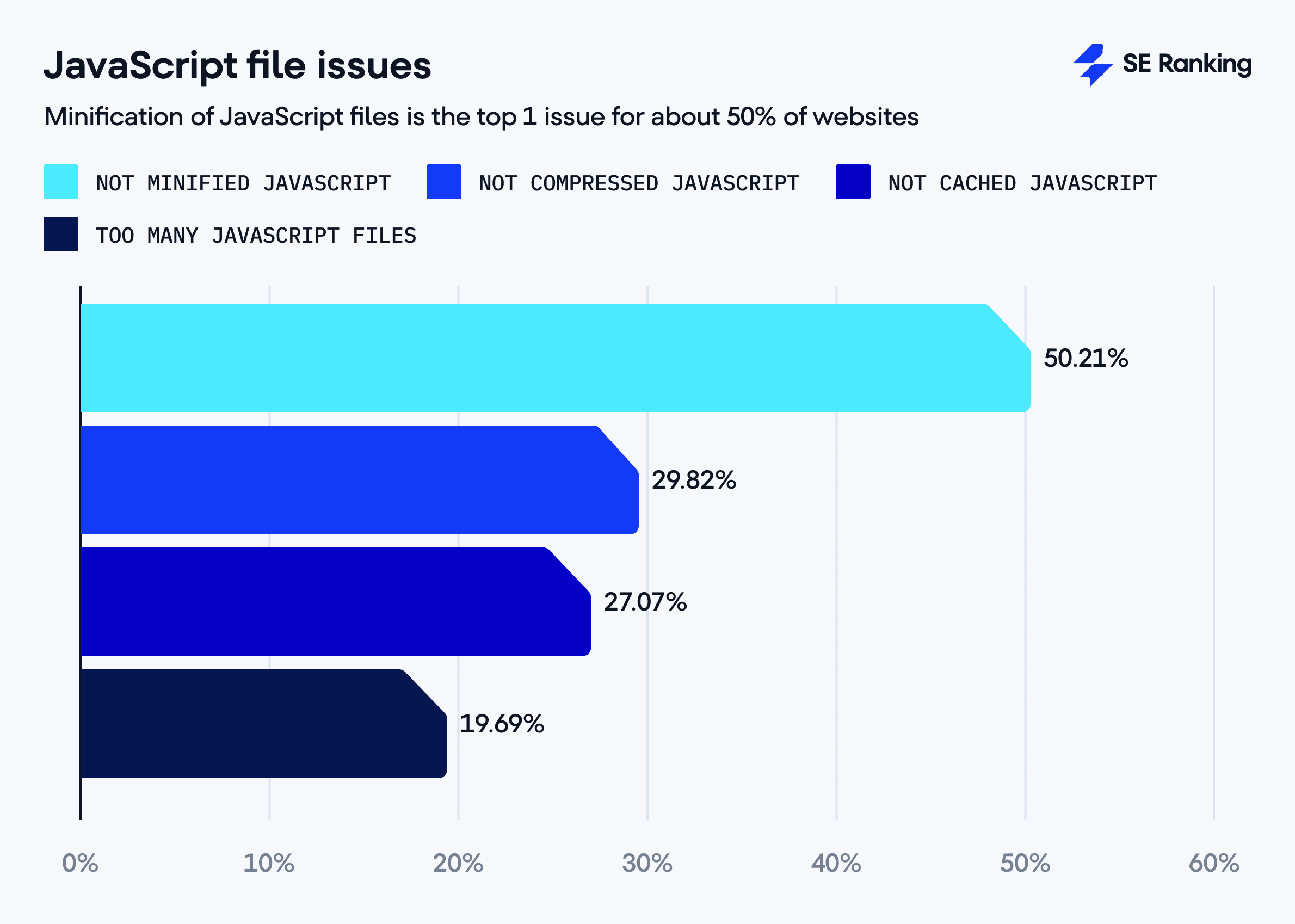

JavaScript files:

50.21% not minified, 29.82% uncompressed, 27.07% not cached, 19.69% too many, 6.84% contain broken external files, and 7.16% fail to load properly.

-

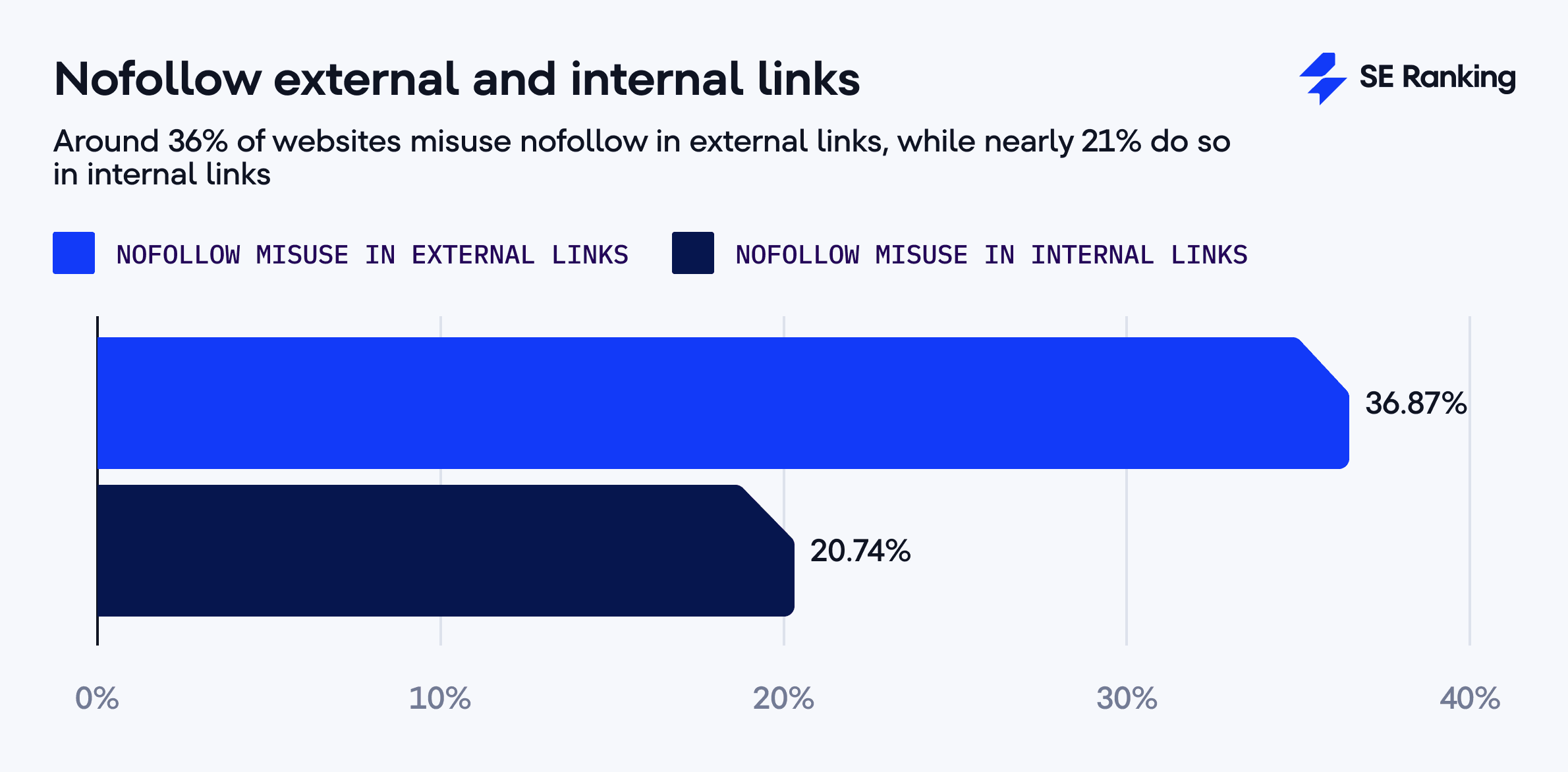

Links with nofollow attributes:

36.87% external and 20.74% have internal links that hurt the flow of natural link juice.

-

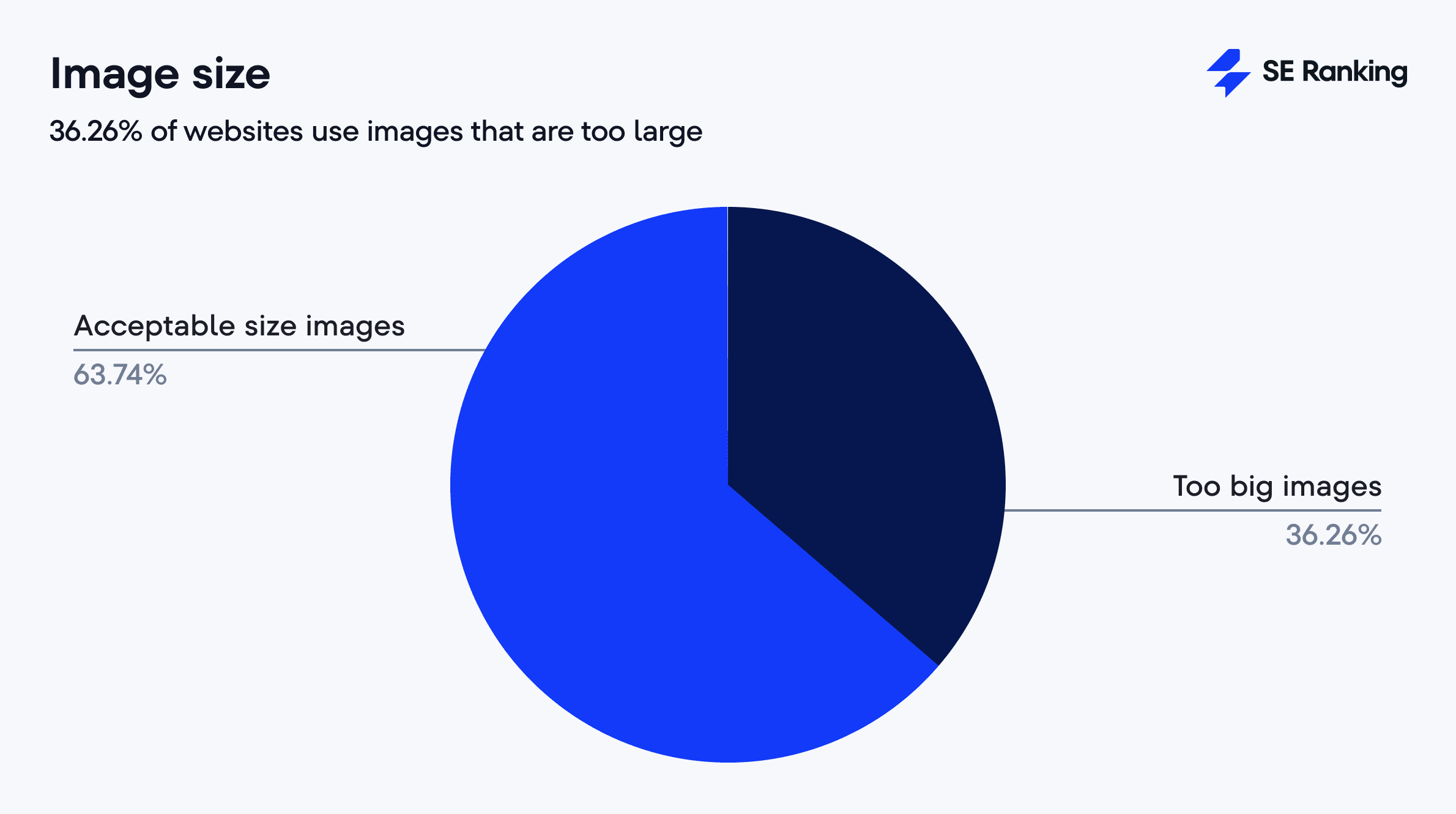

Image size issues:

36.26% oversized, increasing load time.

-

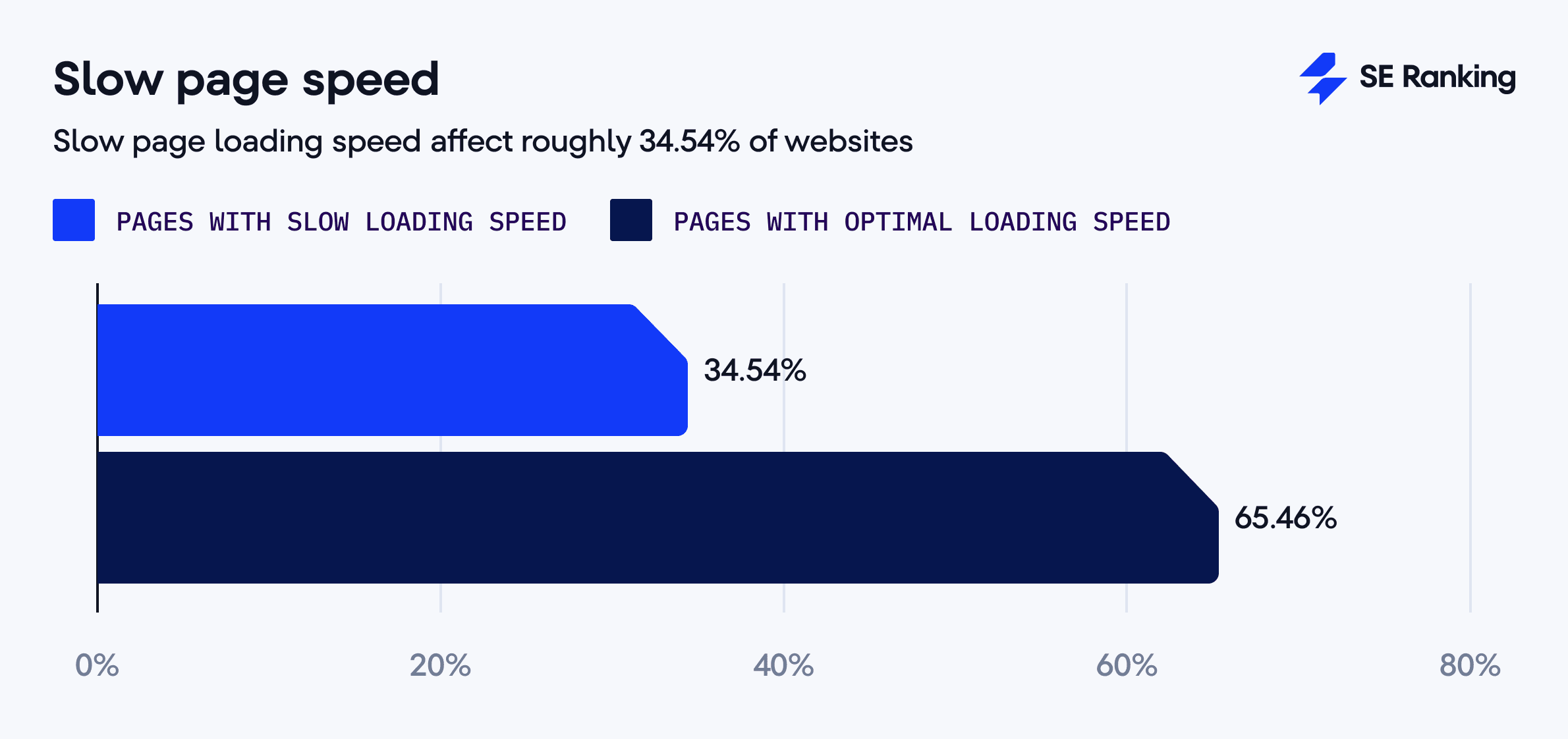

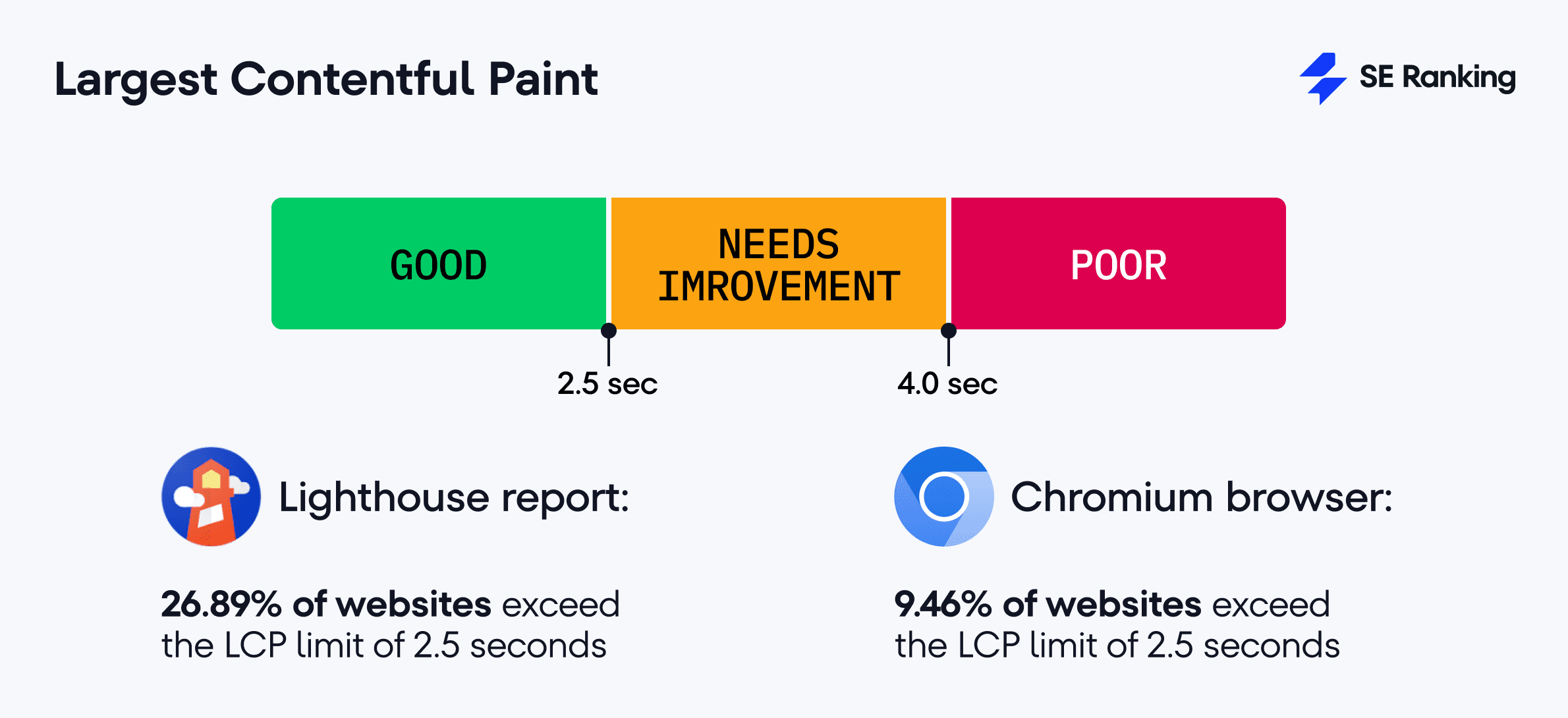

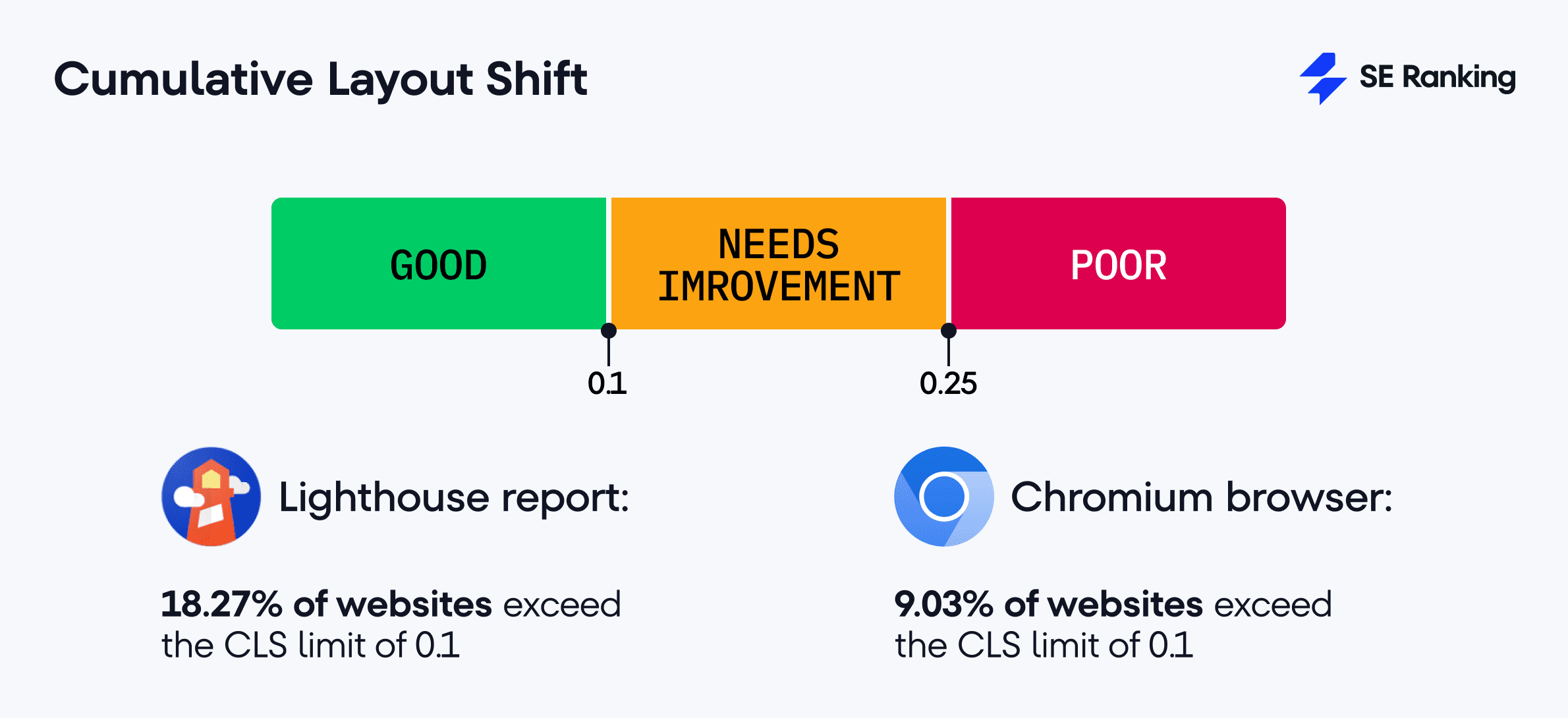

Speed and performance issues:

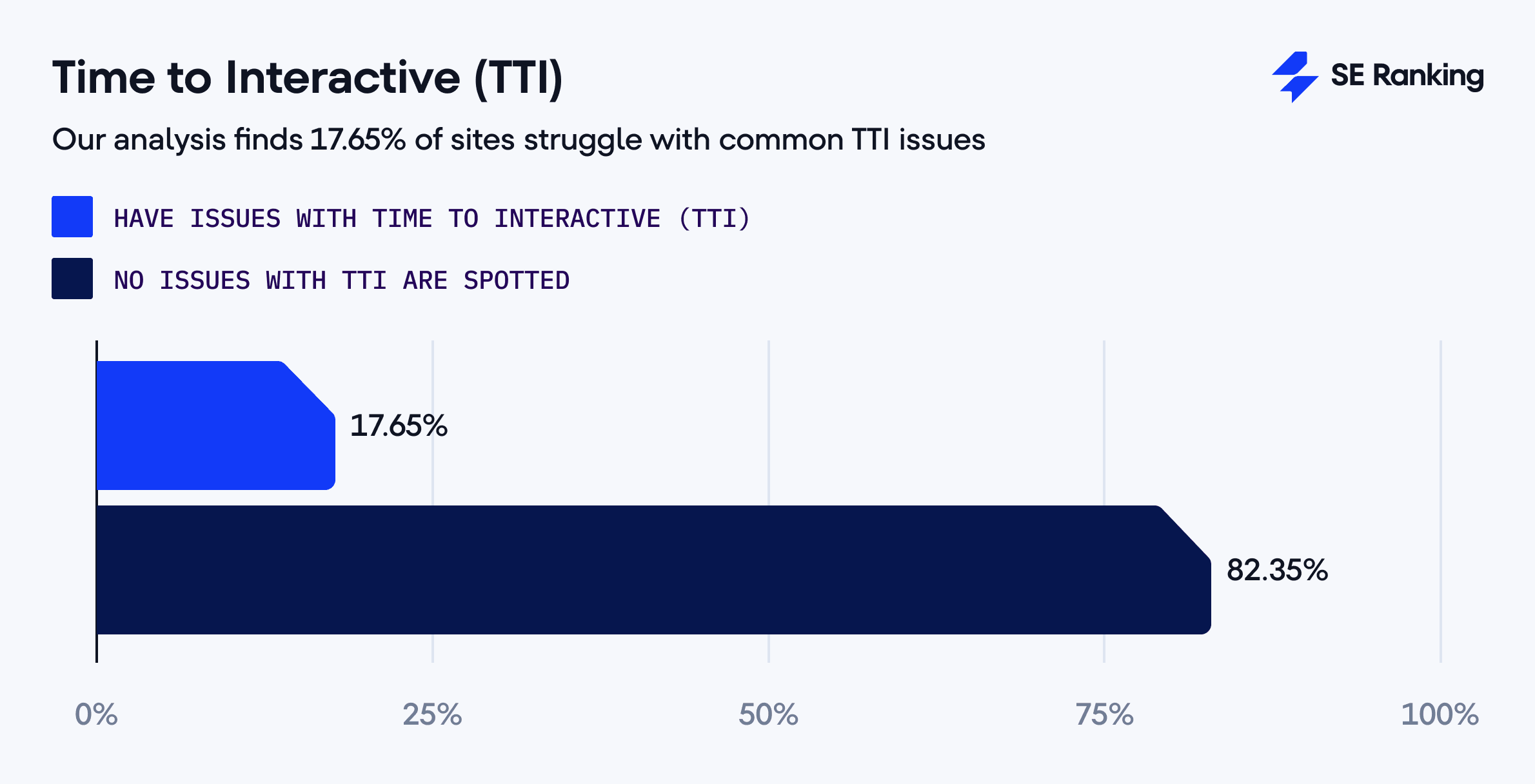

34.54% slow loading, 26.89% high LCP in lab environment and 9.46% in real-world conditions, 18.27% high CLS in lab environment and 9.03% in real-world conditions, 17.65% have long TTI.

-

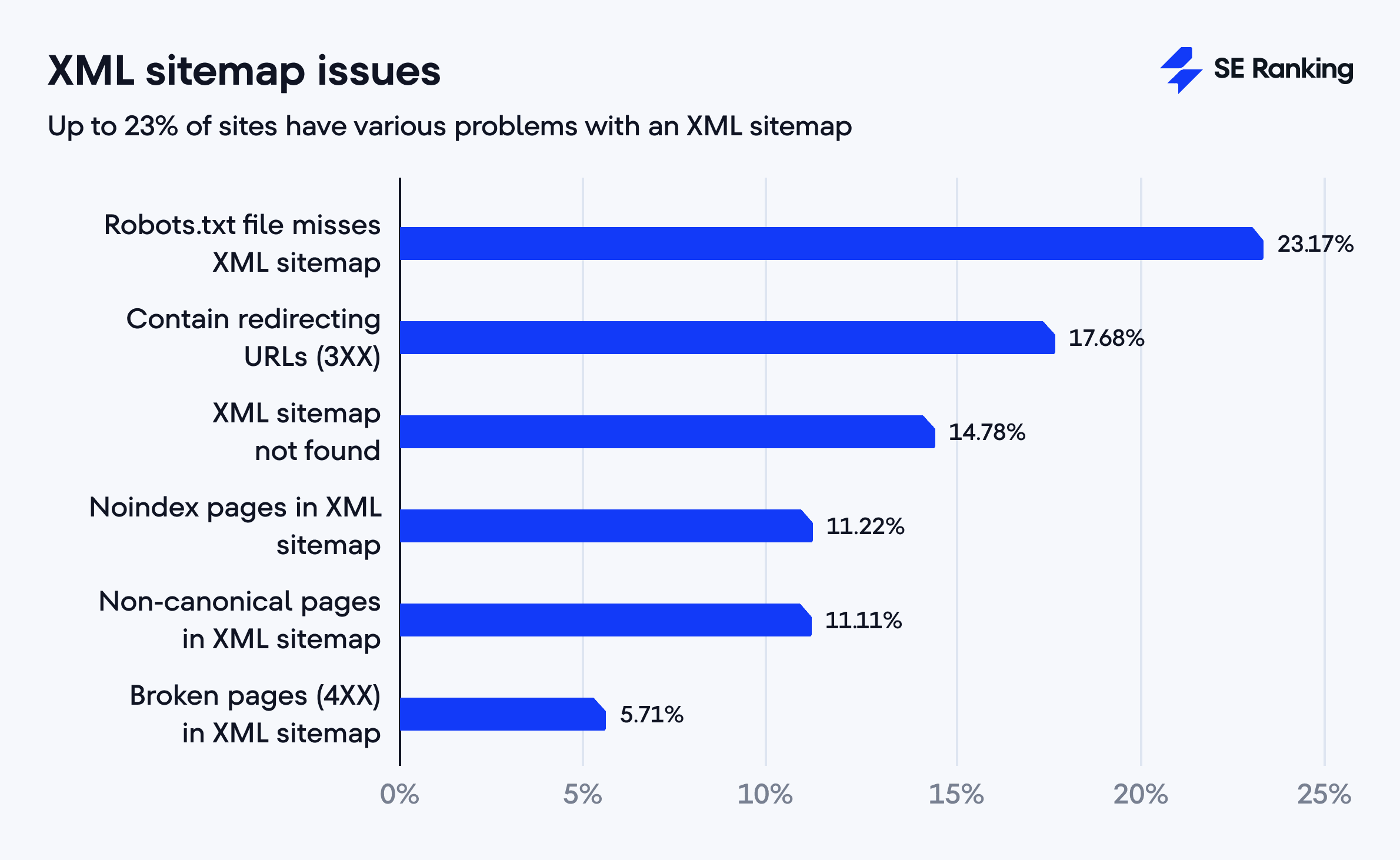

XML sitemaps:

23.17% are missing from robots.txt, 17.68% have redirects, 14.78% are missing entirely, 11.22% list noindex pages, 11.11% contain non-canonical URLs, and 5.71% include 4XX pages.

-

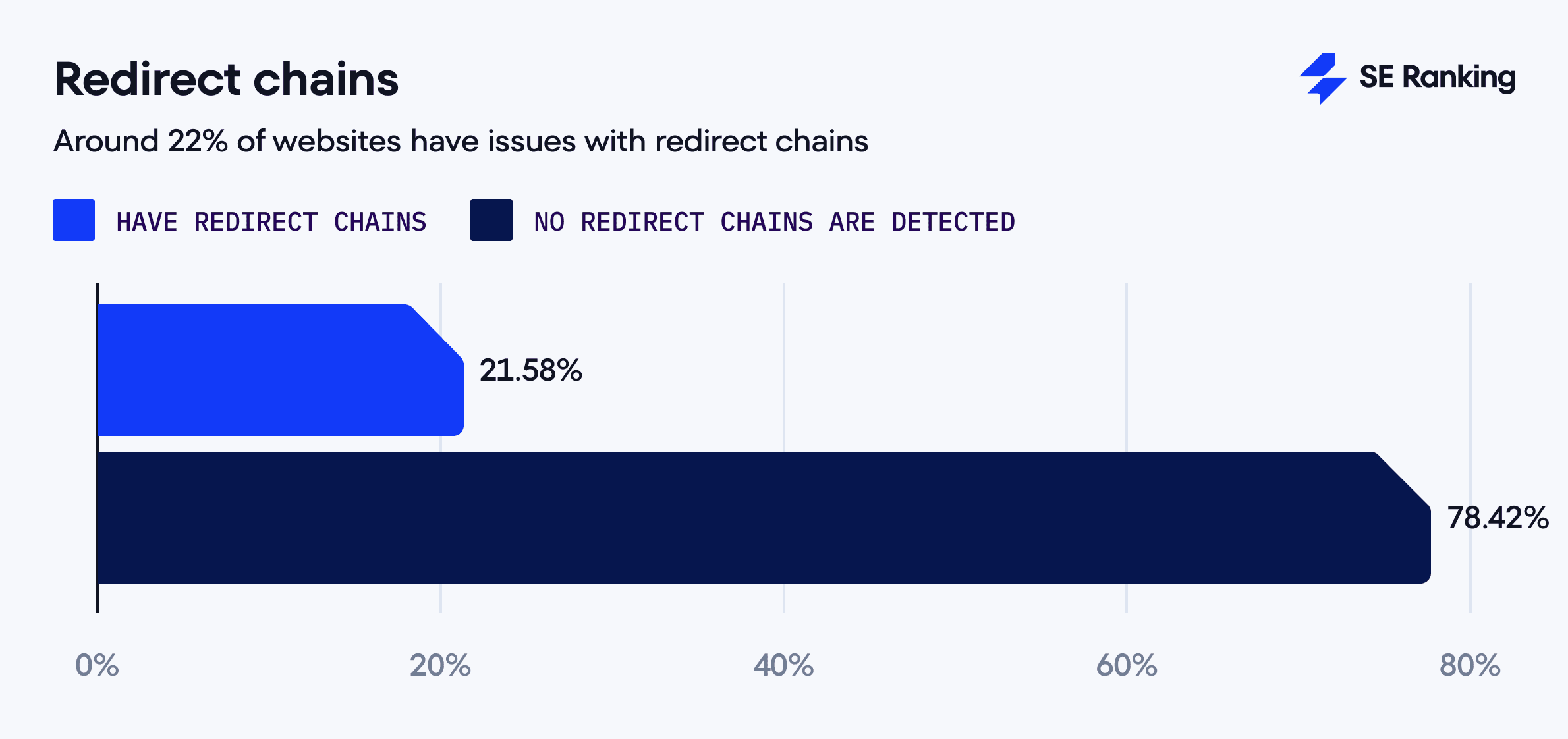

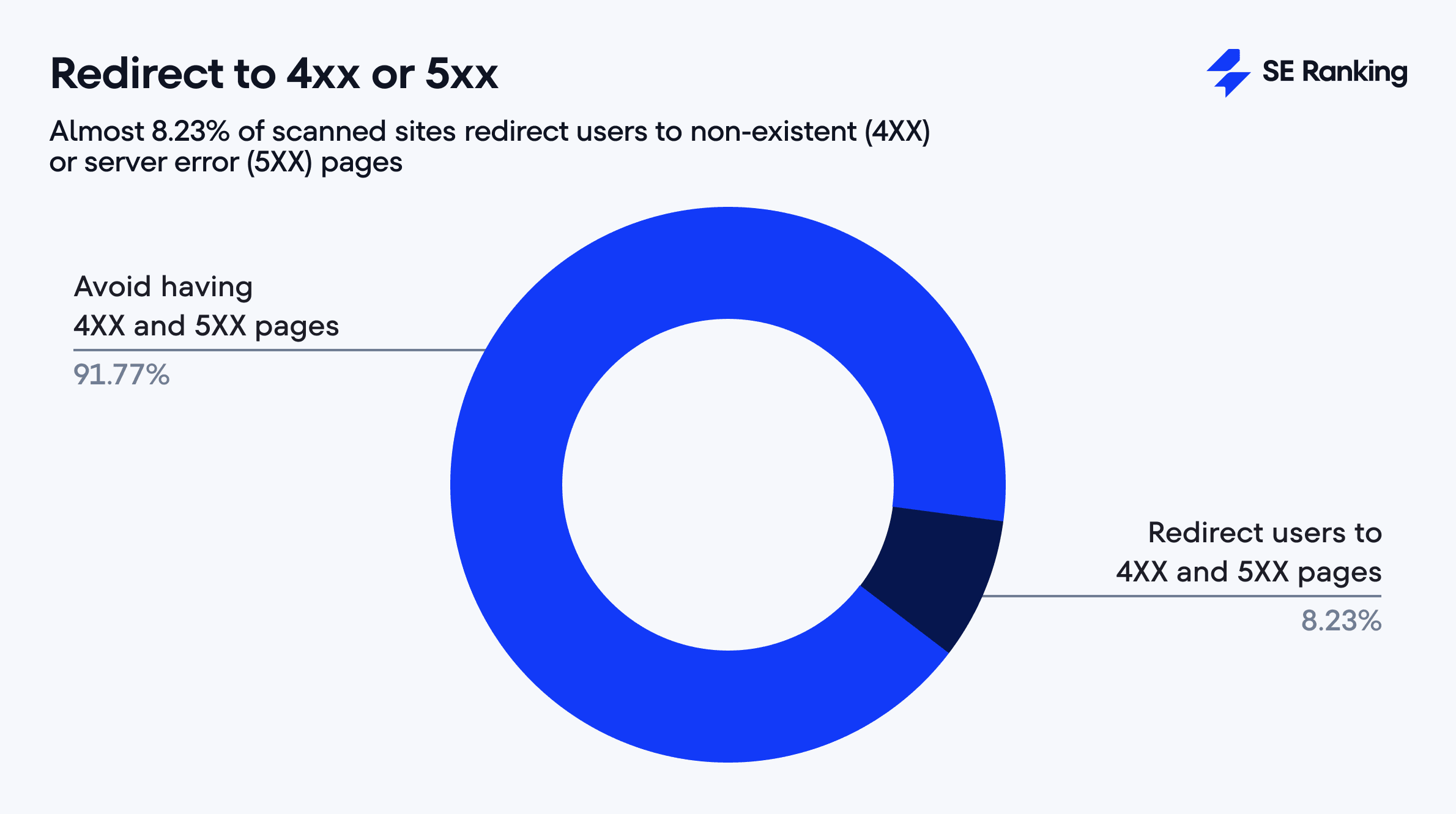

Redirect issues:

21.58% create chains wasting crawl budget, 8.23% lead to error pages.

-

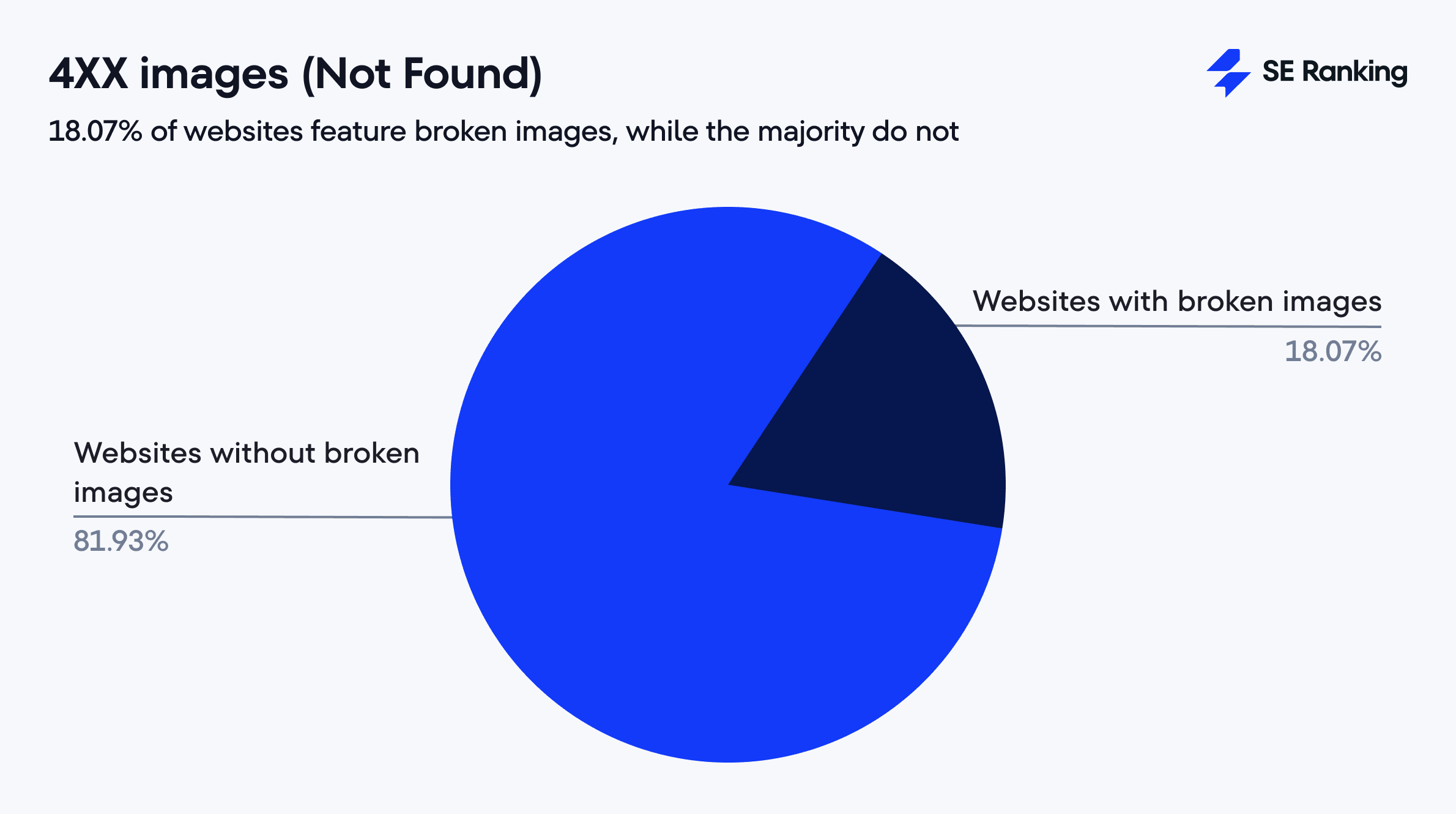

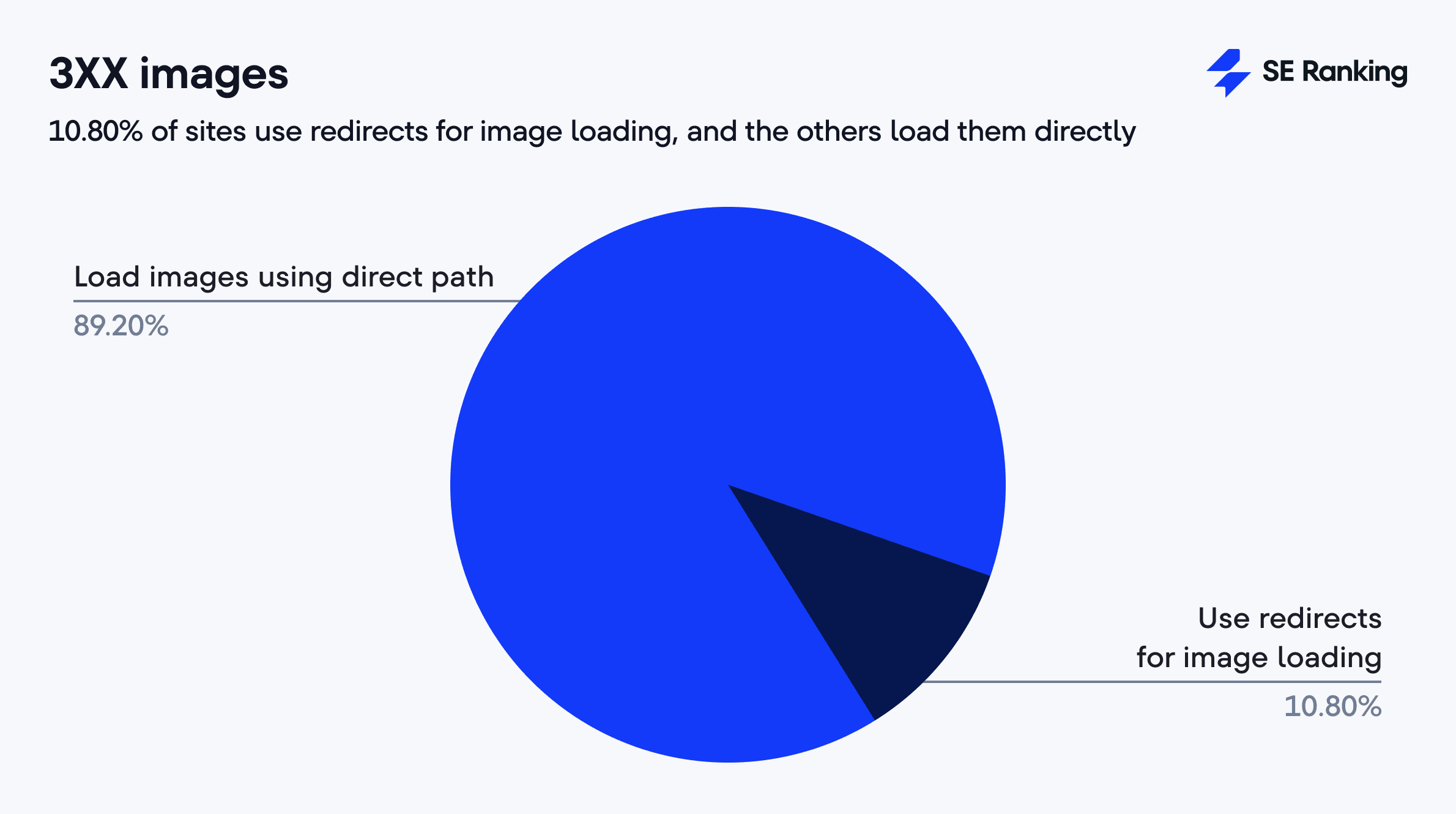

Images:

18.07% return 4XX errors, 10.80% have pages with redirects.

-

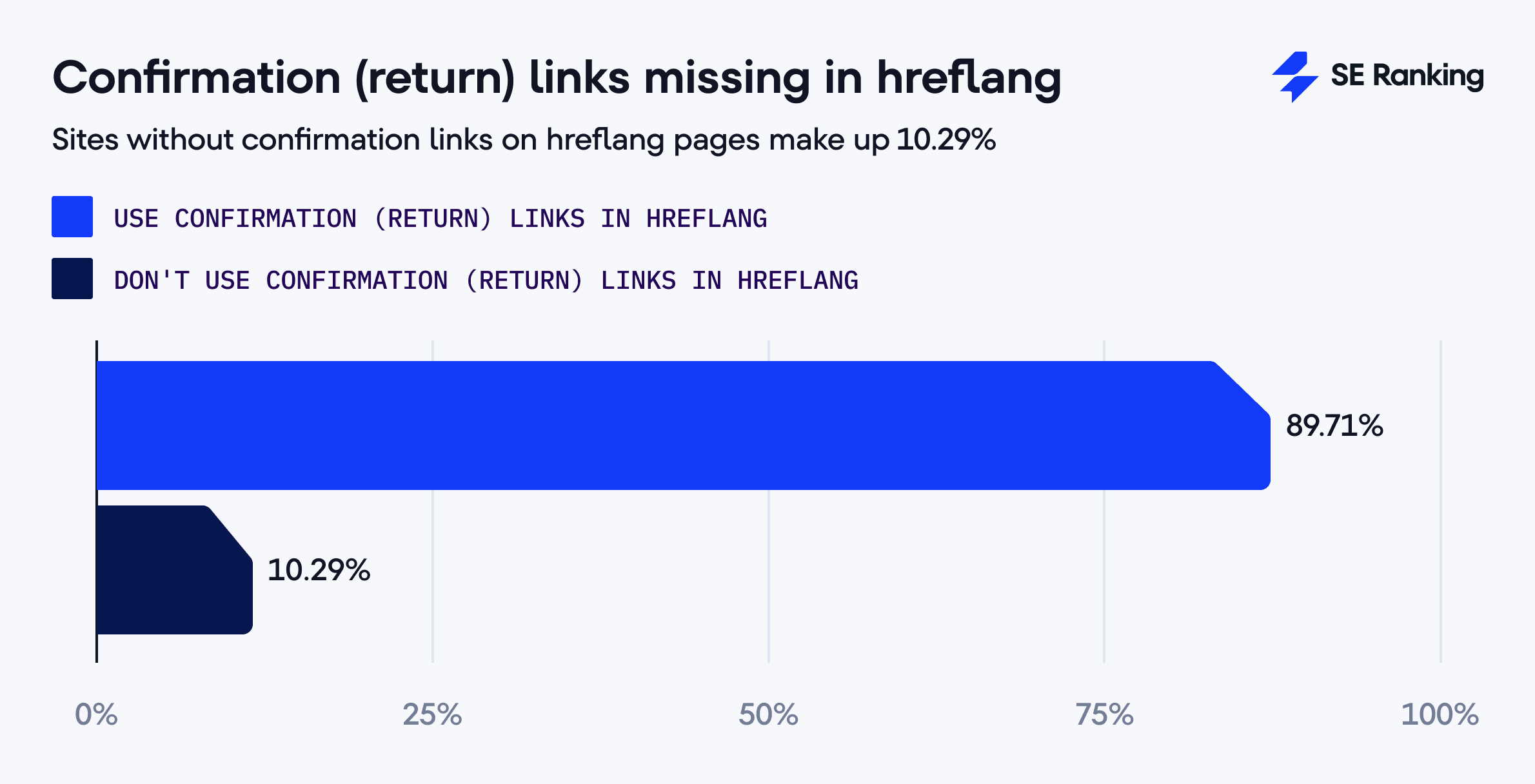

Localization issues:

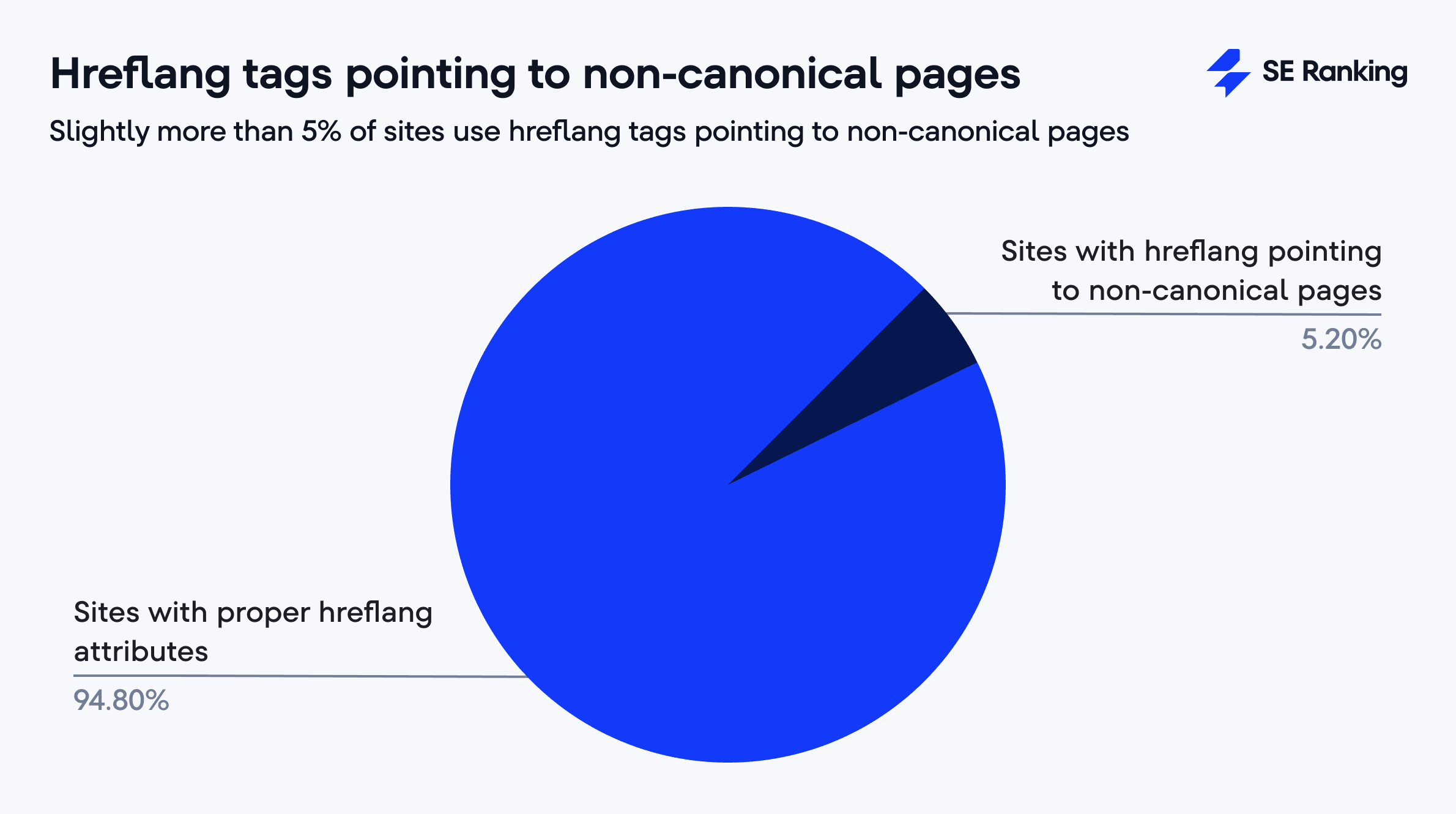

10.29% have missing return links, and 5.24% have hreflang tags pointing to non-canonicals.

-

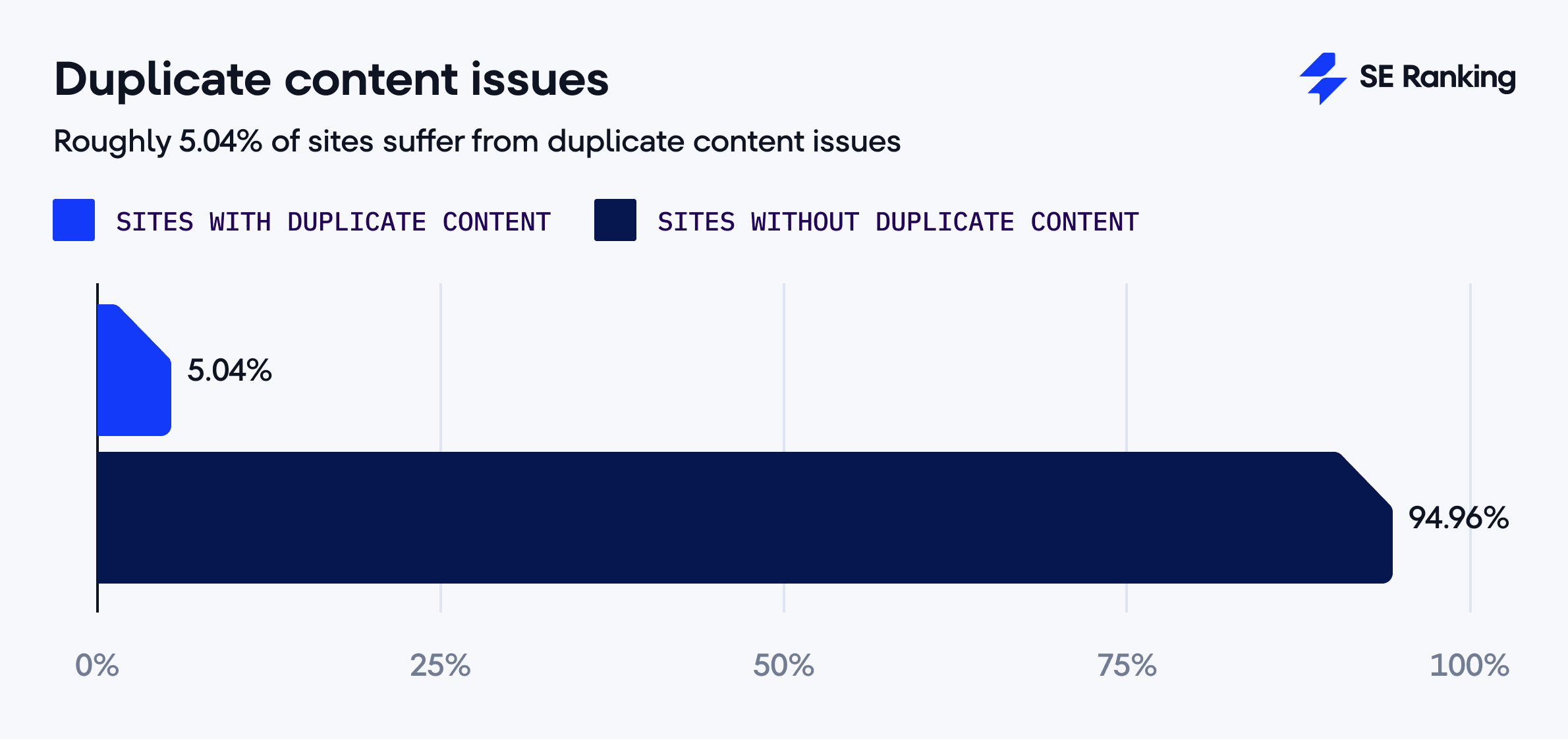

Duplicate content issues:

5.04% of sites show identical content across different pages.

Disclaimer: The percentages show the share of unique website reports we found each issue on. We arranged technical issues in descending order based on occurrence frequency and their severity across all audits. We grouped issues with similar solutions to help you see common to-do steps and how to fix them.

1. Missing alt text

The most common technical problem spotted during our site analysis relates to images. 74.43% of websites have missing alt tags in images.

The alt text (alternative text) is an HTML element designed to explain the meaning of images to search robots and make them more accessible to users. It’s among the main image optimization elements, and helps search engines like Google better rank and understand your picture.

Alt attributes for images also come in handy when (for whatever reason) the picture doesn’t load up. They can also help individuals with impaired vision.

How to approach: Run website audits regularly to identify images with missing alt text. If you want to make the most out of your images, add helpful, informative, and contextual alt text to them. You can also use keywords but avoid keyword stuffing as it causes a negative experience for your users and signals spam to search bots.

2. One or missing inbound internal links

7 out of 10 analyzed websites (69.32%) have pages with no internal links. The problem goes even further, with (67.11%) of websites whose pages have only one link connecting them to other pages.

This is a major issue because inbound internal links enable users and search bots to navigate your website. Links structure the website content hierarchy and help distribute link juice.

Some of your pages may not have internal links—for example, a promo landing page. This page might only exist for the duration of the sale and only enable access via a link posted on social media or a newsletter. Beyond exceptional cases like this, it doesn’t make sense from an SEO perspective to have isolated pages. Search engines can’t find them while scanning your website, and users can’t visit them when browsing your site.

How to approach: If you have isolated pages on your website, determine the page’s value. If you determine it’s worth the effort, revise your website structure and figure out how to build relevant links to this page from your site’s other sections and pages. By ‘the best way,’ we mean the most convenient way for users and Google to reach the page.

3. External links to 3XX

68.54% of websites analyzed have links to other resources that redirect users to other pages (not the ones you initially linked out to). If this new page is thematically related to the old one, or the transition is just as logical, there’s no problem. But if the link leads visitors to a page that doesn’t contain the necessary information, it can negatively impact your site’s user experience and SEO.

How to approach: Get in the habit of checking your link structure regularly and determining if you need to replace or delete the link. Another option is to use SE Ranking’s Webpage Monitor tool to track these changes automatically. The tool monitors your external links and alerts you if anything changes, and if broken links need fixing.

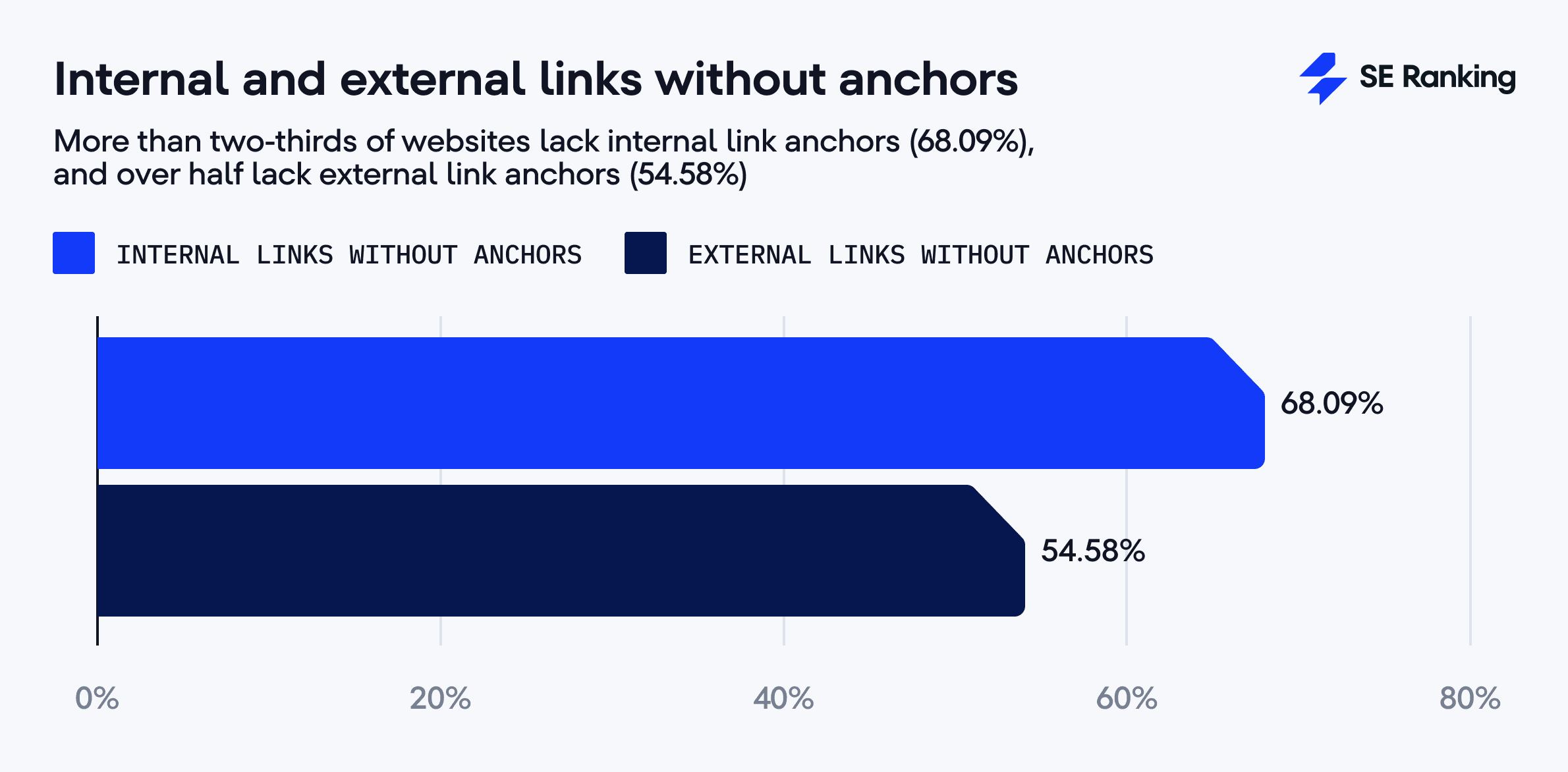

4. Internal and external links without anchors

Two more widespread issues include internal and external links without anchors attached. This happens to 68.09% and 54.58% of websites, respectively. It occurs when internal or external links have empty or “naked” anchors, meaning they display a raw URL. These links make the site harder to navigate while making it more difficult for search engines to understand what the page in the link is about. This hurts user experience and detracts from search engine optimization.

How to approach: Go through the links flagged in the report. Add clear, descriptive anchor texts where needed. Keep the text looking natural, concise, relevant to the target page, and straightforward. You can also use the Keyword Research Tool to find terms for your anchor texts.

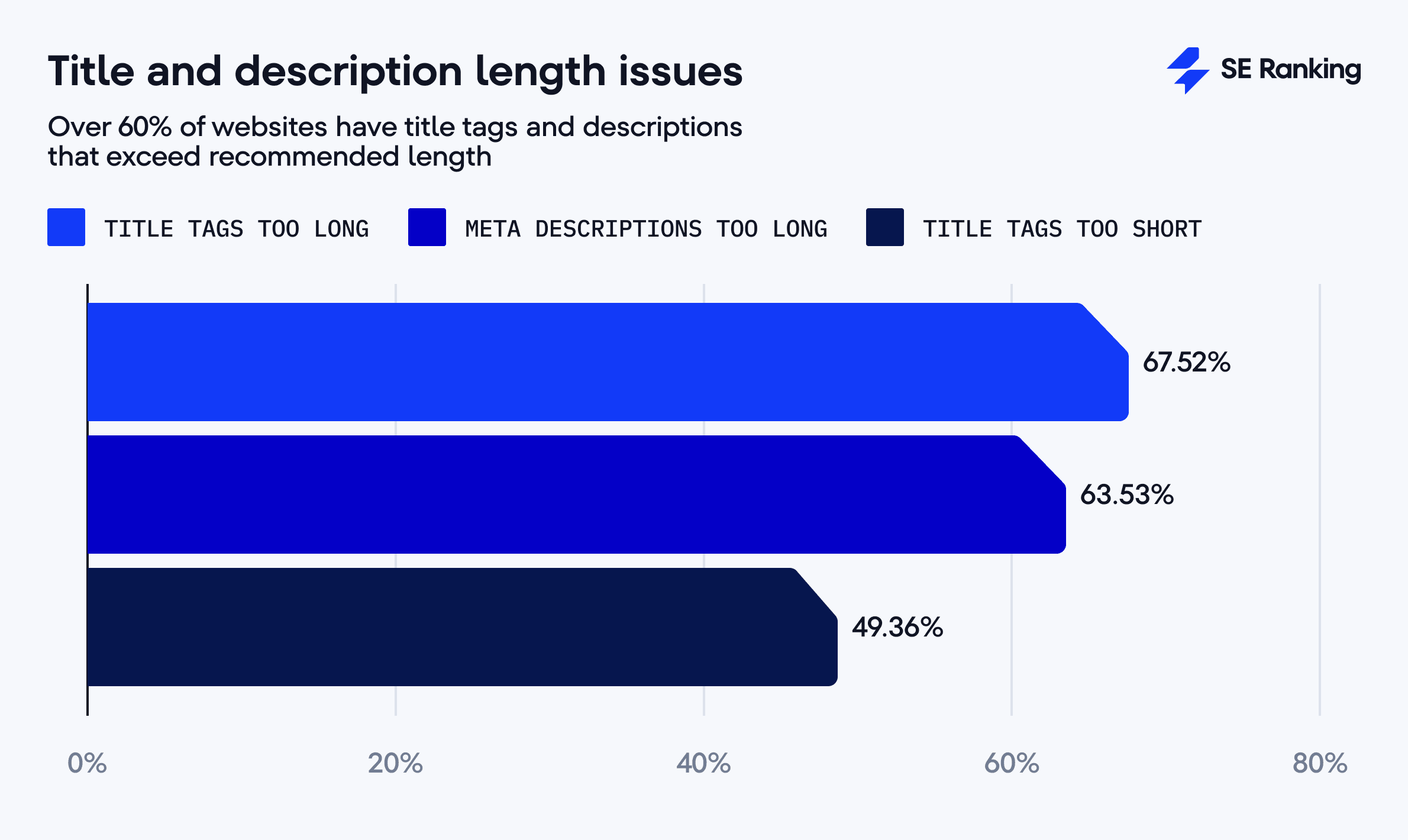

5. Title and description length issues

Meta tags are critical on-page SEO elements. They tell search engines and users important information about the web page. They also help search engines better understand how to present pages in SERPs.

One of the most common SEO issues involves meta title and description lengths. 67.52% of the websites we scanned have overly long title tags, 63.53% have lengthy descriptions, and 49.36% have titles that are too short.

This SEO mistake is two-sided:

- A too-short title can’t fully describe your page.

- A too-long title and description can get cut by the search engine in the snippet.

How to approach: The general recommendation is to keep your page titles between 20-65 characters and page descriptions under 158 characters. You can also use our Title and Meta Description Checker to review character limits and get a preview of their appearance in mobile or desktop SERPs.

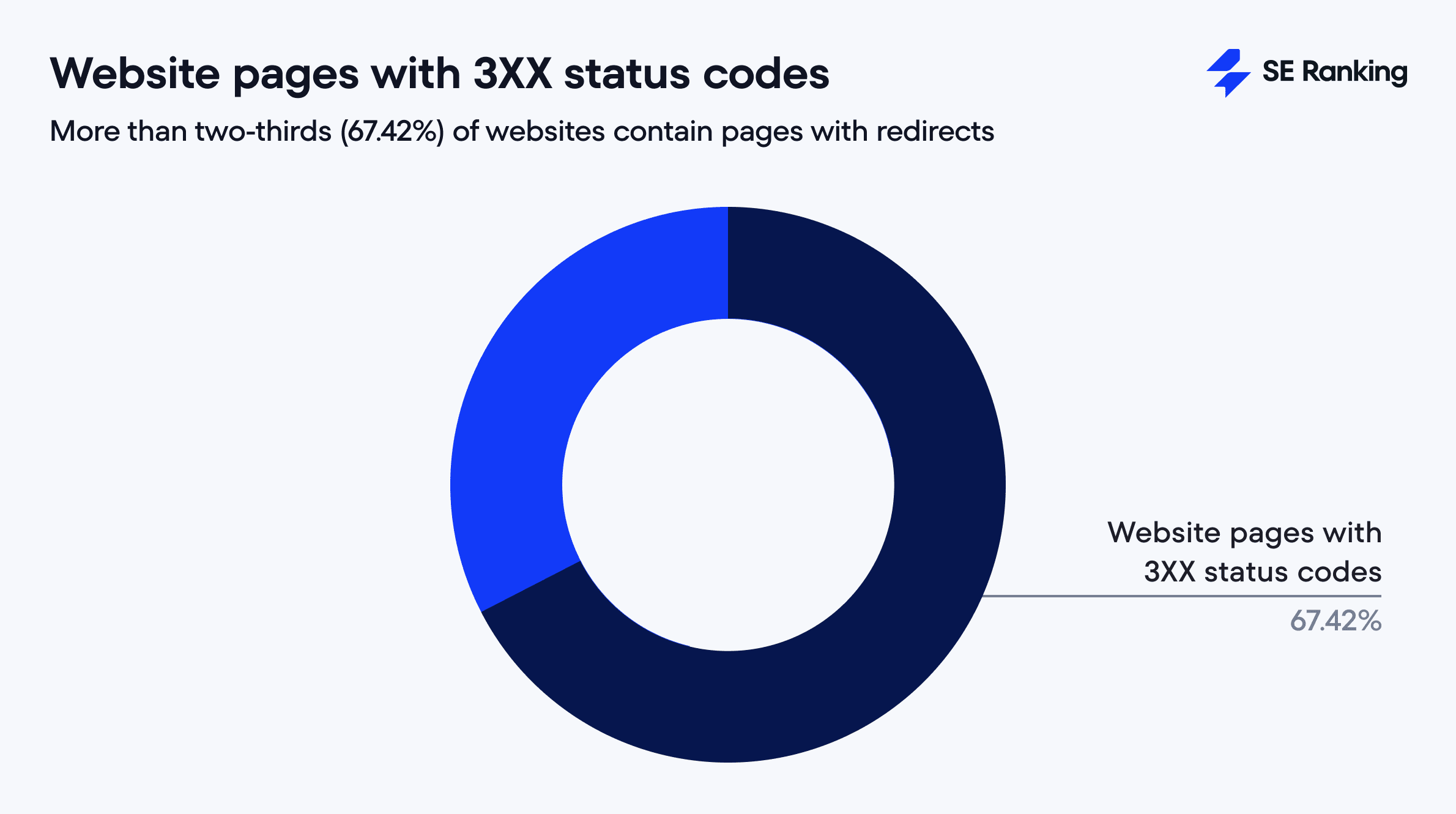

6. 3ХХ HTTP status code

Our analysis shows that 67.42% of the websites contained pages with 3XX status codes. The 3XX status code means pages have redirects. It’s okay to have redirects, but not if they are located in the incorrect spot.

How to approach: We recommend handling redirects thoughtfully. Use them to manage duplicate pages, remove content, and other scenarios. But try to prevent them from leading to broken pages or creating endless loops and redirect chains.

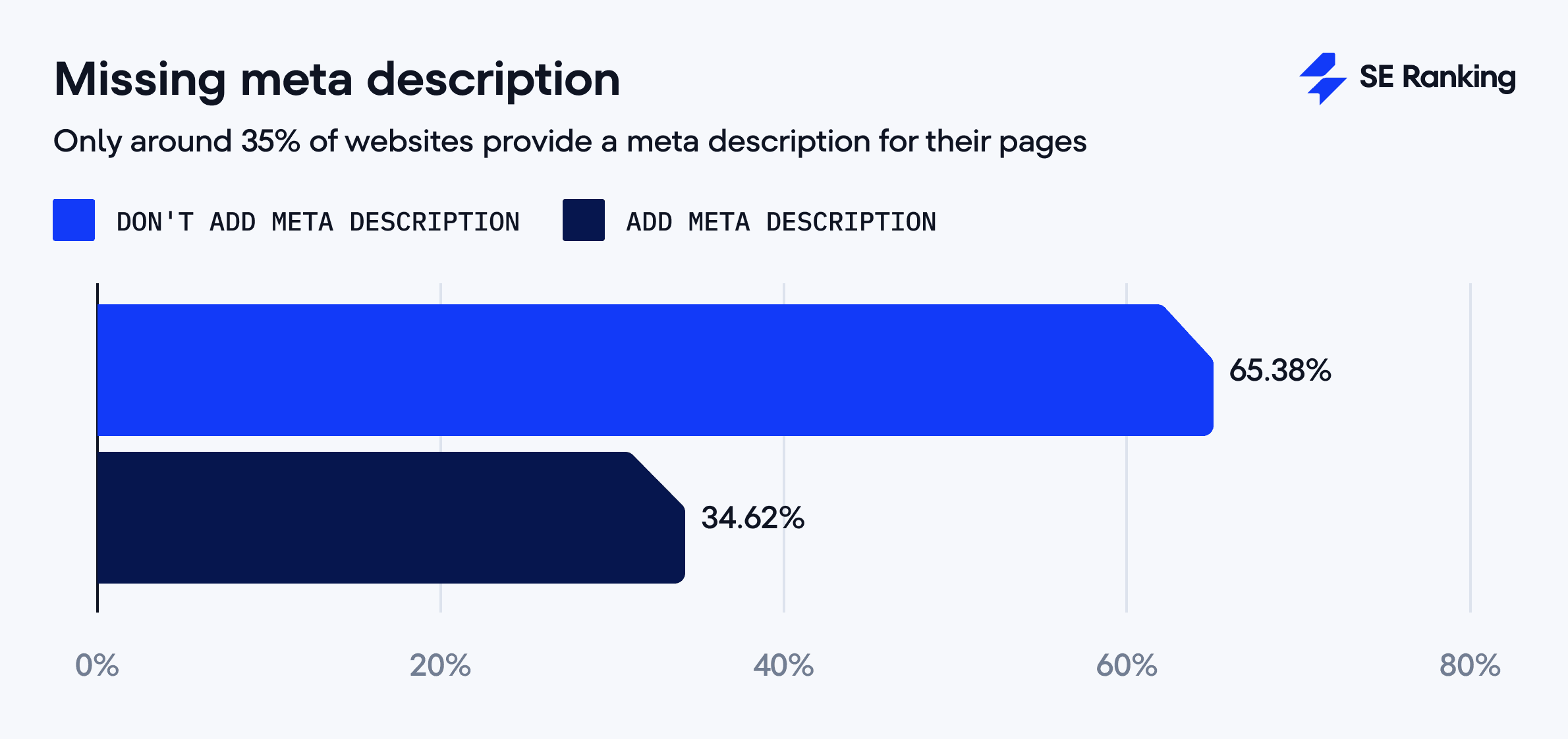

7. Missing description

This issue occurs on 65.38% of websites. But why is it so critical, given that Google doesn’t confirm or refute that meta descriptions affect search rankings?

The answer is that Google can still use them for search result snippets. If you don’t specify a page description, Google will use the available content on that page to generate a description.

How to approach: SE Ranking’s Website Audit tool is really useful here. Just go to the Issues Report and review the Meta Tags section. You’ll see the number of pages where a description should be added, and by clicking on that number, you’ll get the list of pages. Add unique descriptions to each page, explaining what your page is about to people and search engines.

8. Internal links to 3XX redirect pages

This problem, occurring in 63.87% of analyzed websites, is similar to its external links counterpart, but happens on your site. The negative effect for both cases is the same. If the page isn’t relevant, it hurts your user’s UX, which can be a bad signal for Google. It also wastes your crawl budget, which can prevent important new content from being crawled and reduce the budget for future crawls.

How to approach: Go through the internal links highlighted in the report and update them with correct, active URLs. Keeping your internal links up-to-date helps users and search engines navigate your site.

9. CSS file issues

The CSS file is responsible for the visual design of page elements. Unoptimized CSS files can slow page loading, hurting user experience and search engine rankings.

Here is how SEO issues are distributed in this category:

- CSS file too large: 57.72%

- Uncompressed CSS files: 48.66%

- CSS files not minified: 43.66%

- Uncached CSS files: 17.64%

How to approach: Compress and minify CSS files to reduce their size and improve page load time. Also enable caching to store CSS file copies. The next time the user visits that page, the browser will serve them the saved copy instead of sending additional server requests.

10. H1 tag issues

HTML heading tags help people and search engines quickly understand page content. They outline the structure of the content and can affect page rankings.

The H1 tag is the top-level page heading. It briefly describes page content and helps readers determine if the page has what they need. Many related common issues can arise:

- Duplicate content in H1

57.37% of websites have pages with duplicate H1 tags. Like other duplicate items, repeat H1 headings make it more challenging for search engines to determine which site page to display in search results for search queries. Duplicate H1s can also cause several of your pages to compete for the same keyword. This results in page cannibalization, which makes duplicated H1 tags one of the most problematic SEO issues.

- Missing H1 tag

54.67% of websites have pages with no H1 tag. The H1 tag is the most important heading on the page and usually serves as the title for that content (don’t mix it up with the title tag!). Without it, the page is less reader-friendly and more difficult for Google crawlers to scan.

- Multiple H1 tags

54.52% of websites use multiple H1 tags on their pages. Given that Google’s John Mueller said you can use H1 tags on a page as often as you want, it is technically not an issue.

However, double check them to see if they have multiple H1 headings. Having multiple H1s can sometimes bring more good than harm to users as long as they don’t destroy usability or look spammy.

How to approach: Apply the same approach as with meta tag errors. Run a website audit to identify pages with multiple H1 headings and consider page structure improvements where needed.

11. Duplicate content in page titles and meta descriptions

Slightly more than half of the analyzed websites have duplicate title tags (53.69%) and duplicate descriptions (50.31%).

Having multiple pages with duplicate titles and descriptions can confuse search engines, making it difficult to determine which page is the primary option for search queries. These pages are less likely to rank well, so try to make their titles and descriptions unique.

How to approach: First, ensure there are no pages with duplicate intent. If there are, handle the duplicates accordingly. Then, update the remaining pages’ titles. The Issue report will help you identify pages with duplicate titles and descriptions. All you need to do is rephrase duplicate content using your target keywords. If you’re struggling, use the AI Writer feature in SE Ranking’s Content Marketing Module to generate new titles and descriptions from scratch. It can also generate different types of quality content and is a quick way to build SEO-friendly content.

12. Blocked by noindex

We found that 50.58% of sites block some of their pages from search indexing by adding the noindex directive to the page’s <head> section. Search bot will drop these pages and won’t display them in the SERPs. While it’s okay to block some pages from indexing (like pages with filtered results for ecommerce sites or pages with personal user data or checkout pages), it’s not okay if it causes search engines to ignore your important pages. The indexing issues outlined in this article are the most critical.

How to approach: First, review all your noindexed pages to confirm each tag is being used intentionally. Make sure you didn’t accidentally block all the pages you want to have appear in search results. It’s also crucial to check that noindexed pages aren’t blocked by robots.txt. This prevents search engines from seeing the noindex directive altogether. If other sites link to these pages, they might still appear in search results.

13. JavaScript file issues

JavaScript adds dynamism and interactivity to your website. JavaScript file-related issues are almost the same as with CSS–they are “heavy” and affect page loading.

Here are the issues you should pay attention to and their occurrence rate:

- Not minified JavaScript: 50.21%

- Not compressed JavaScript: 29.82%

- Not cached JavaScript: 27.07%

- Too many JavaScript files: 19.69%

Another SEO mistake that we didn’t observe in the CSS category is using too many JavaScript files. Since the browser sends a separate request for these files, loading the page can take longer. The consequences of this issue are obvious: pages with poor UX, higher bounce rates, and possibly lower rankings.

How to approach: Since JavaScript files are just as large as CSS files, you should compress and minify them. Also, if you have a JS-based website, enable JS (client) rendering in SE Ranking’s Website Audit tool. This can help you audit JS websites effectively and capture any related issues.

14. External links to 4XX

43.40% of analyzed sites have broken links to other resources. When following a link, the user expects to see the next page but instead lands on a non-existent page. This is a poor user experience.

How to approach: Review all external links flagged in your audit and either remove or replace broken ones. Every outbound link should lead to a live page (returning a 200 OK response code).

15. Nofollow external and internal links

Our analysis shows that nofollow attributes are present in both external (detected in 36.87% of websites) and internal (20.74%) links. For external links, using nofollow prevents link juice from passing to other sites. This is sometimes intentional but generally misapplied. But the larger issue here is with internal nofollow links, which can disrupt your site’s natural link flow and create indexing challenges.

How to approach: For internal links, only use nofollow attributes when necessary. To control how Googlebots crawl your website, use the Disallow rule in the robots.txt file instead. For external links, apply nofollow in specific cases. Use precise attributes like rel=”sponsored” for paid links or rel=”ugc” for user-generated content.

16. Image is too big

Images are often too resource-heavy, which can cause website speed issues that hurt your SEO and UX.

Our inspection showed that this problem occurs with 36.26% of websites.

The image file size can affect page speed. The chain of logic here is simple: the bigger the size, the longer it takes to load, the longer the user has to wait, the worse the user experience is, and the lower the page’s position in search results.

How to approach: One way to reduce the image file size is to compress it but maintain the image’s quality. The ideal compression level depends on the image’s format, dimension, and pixels, but you should keep them under 100 KB or less whenever possible.

17. 4XX HTTP status codes

More than a third of the analyzed websites (35.73%) have pages with a 4XX response.

In most cases, a 4XX response appears in response to a 404 error. Having pages with the 404 error isn’t that terrible in itself. However, you should take care of every internal link to such pages because linking to dead pages provides a poor user experience. In addition, it drains your crawl budget. This means you should manage every internal link that goes to these pages.

How to approach: You can delete internal links to 404 pages and/or set up a 301 redirect. You should also get rid of any backlinks to them.

18. Slow page speed

34.54% of websites indicate problems with low page loading speeds. These websites are likely to notice negative behavioral signals. For example, users might go to other sites instead of waiting for the page to load.

Page load speed is a Google ranking factor. It’s a staple of Core Web Vitals.

How to approach: Optimize your HTML code to prevent slow page speeds. If your pages still load slowly after optimizing their code, check your server performance. You may need to upgrade to a faster web server.

19. Largest Contentful Paint

The Largest Contentful Paint (LCP) measures the primary content’s loading speed. It looks at how fast the largest images, text blocks, videos, etc, load on the page and become visible to the user. LCP is measured in real-world conditions based on data from the Chromium browser and in a lab environment based on data from the Lighthouse report.

Although you should generally strive to load the largest elements in under 2.5 seconds, our research shows that it’s not always a realistic goal for some websites.

- 26.89% of websites have LCP higher than 2.5 seconds when measured in a lab environment.

- 9.46% of websites have LCP higher than 2.5 seconds when measured in real-world conditions.

How to approach: To improve performance, use preloading on pages with static content, optimize the top-of-page code, reduce image file sizes, and get rid of render-blocking JavaScript and CSS.

20. XML sitemap issues

An XML sitemap is a file with a list of a website’s URLs. It helps search engines like Google spot the most important pages on your website, even isolated pages, or pages without external links.

And although it plays a vital role in crawling, some XML-related issues still occur.

- 23.17% of websites don’t include a link to their XML sitemap to the robots.txt file. However, including one is ideal because it can help Google determine your sitemap’s location.

- 17.68% of websites have sitemaps containing redirecting URLs (3XX). This wastes the crawl budget and forces search engines to follow redirects to find the actual content.

- 14.78% of websites have no XML sitemap. This can hinder crawling effectiveness. Without a sitemap, it can be more difficult for search bots to find and access isolated pages of the site.

- 11.22% of websites have pages that feature the noindex meta tag in their XML sitemaps. This is confusing to Google. Your XML sitemap should only include URLs that you want search engines to crawl and index.

- 11.11% of websites show non-canonical pages in XML sitemaps. Your sitemap should only include canonical URLs to avoid confusing search engines about which version to index.

- 5.71% of websites contain broken pages (4XX) in XML sitemaps. Including non-existent pages misleads search engines and wastes the crawl budget.

How to approach:

- Create an XML sitemap and add it to your website. Then, send the link with its location to search engines. You can also create separate XML sitemaps for URLs, images, videos, news, and mobile content. Add your XML sitemap file link to the robots.txt file.

- Replace redirecting URLs in the XML sitemap with their destination URLs. If the destination URL is already listed, remove the URLs with 3XX redirects from the sitemap.

- Remove pages with the noindex meta tag from your XML sitemap, or remove the noindex tag from these pages. The decision depends on your goals.

- Make sure your XML sitemap only contains canonical URLs.

- Take out any URLs with 4xx errors from your XML sitemap. Ensure it only includes URLs that return a 200 OK response.

21. Redirect chain

Hardly any site can operate without redirects, but if they aren’t implemented correctly, they can hurt your SEO. Redirect chains, which our tool detected in 21.58% of the websites in our study (i.e., redirects from one page to the second, third, fourth, and so on) can waste your crawl budget and create unnecessary load on the server. Remember that the longer the chain, the longer users must wait for the page to load.

How to approach: Remove unnecessary steps in the chain by directly redirecting each URL in the chain to the final target.

22. Cumulative Layout Shift

The Cumulative Layout Shift (CLS) measures how long it takes for your web page to stabilize. It looks at how long it takes for all elements on the page to appear if fresh content, like an image, loads longer than other page elements. It’s also measured in real-world and lab environments. The goal here is to maintain a CLS of 0.1 or less.

Our research shows that:

- 18.27% of websites exceed this value in a lab environment.

- 9.03% of websites exceed this value in real-world conditions.

How to approach: One possible solution to this issue is to use size attributes for images and videos. It’ll help you book space for them in the final layout rendering.

23. 4XX images (Not Found)

Pages aren’t the only elements that can return 4XX HTTP errors. It also applies to images and files. We noticed that 18.07% of websites contain broken images. And beyond the fact that broken images hurt user experience, Google can’t index them.

How to approach: Replace the URLs of all the broken images with working ones, or remove the links to the broken images from your website.

24. Time to Interactive (TTI)

Our analysis shows that 17.65% of sites have common issues around TTI. This metric measures the time it takes for a page to become fully interactive after loading. Long TTI frustrates users who try to interact with elements that aren’t ready to respond.

How to approach: Optimize your JavaScript code by removing unnecessary JavaScript libraries and splitting code into smaller bundles. Load only the portions necessary first.

25. 3XX images

We found that 10.80% of websites use redirects to load their images. This means browsers and search engines have to send an additional HTTP request to download the image instead of directly landing on the site. Every redirect adds loading time, and delays add up quickly when you have tons of files like this on your website.

How to approach: Specify the direct path to the image files instead of redirects. If you’re using images from external sources and can’t avoid redirects, keep the images uploaded through the new URL relevant to your content. SE Ranking’s Website Audit spots these image redirects so you can fix them and improve your site speed.

26. Confirmation (return) links missing in hreflang

Hreflang tags are important if you’re running a multilingual website. They tell search engines which language version to show to visitors from particular regions. Our analysis shows 10.29% of sites neglecting to use confirmation (return) links on hreflang pages.

When using hreflang to indicate a page’s language or regional versions, all versions must link back to each other. So if Page A links to Page B, Page B must link back to Page A. Otherwise, search engines may ignore or misinterpret these attributes, and hurt your local SEO.

How to approach: Use the same set of URL, rel=”alternate” and hreflang values on all language or regional versions of your pages. This keeps each version properly linked back to all other versions.

27. Redirect to 4xx or 5xx

8.23% of scanned sites redirect users to pages that don’t exist (4XX) or have server errors (5XX). This means leading visitors to a dead end and causing search engines to hit a wall. This prevents search engines from crawling them and frustrates users, making them more likely to leave your site.

How to approach: For 4XX errors (like 404s), update the target redirect page address so that it points to relevant pages. If you’re seeing 5XX server errors, check your server logs. These errors might be temporary due to maintenance, but if they persist, you’ll need to identify and fix the underlying server problem.

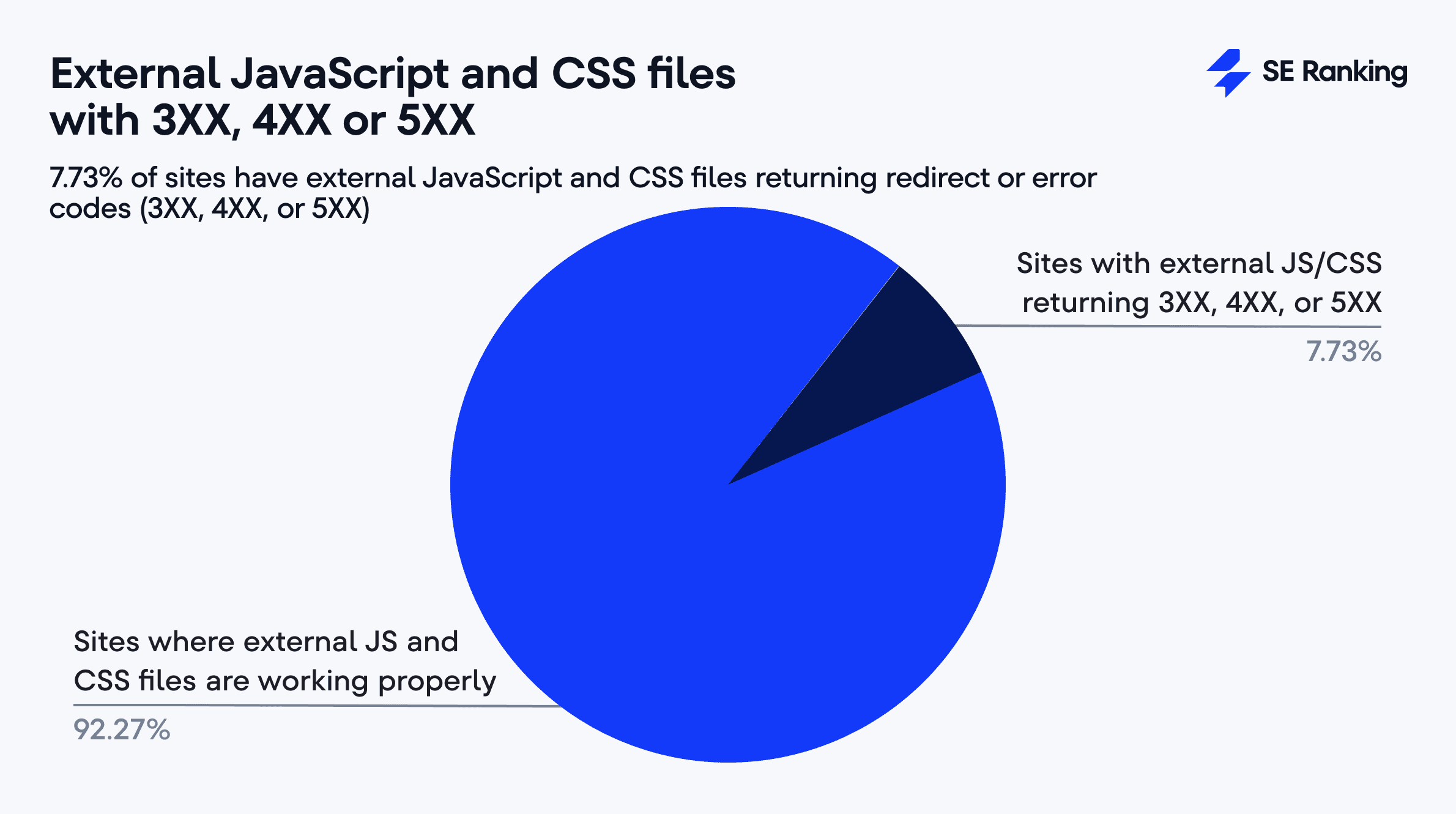

28. External JavaScript and CSS files with 3XX, 4XX or 5XX

We found that 7.73% of sites have external JavaScript and CSS files that return redirect or error codes (3XX, 4XX, or 5XX). While this might seem like a minor technical issue, broken JS code on your website can lower its rankings. This is because it results in improperly displayed pages that search engines can’t index.

How to approach: Check all external JavaScript and CSS files to ensure they’re loading correctly. Use SE Ranking’s Website Audit to identify problematic external JS and CSS files and either fix their paths or host the files directly on your server for better control.

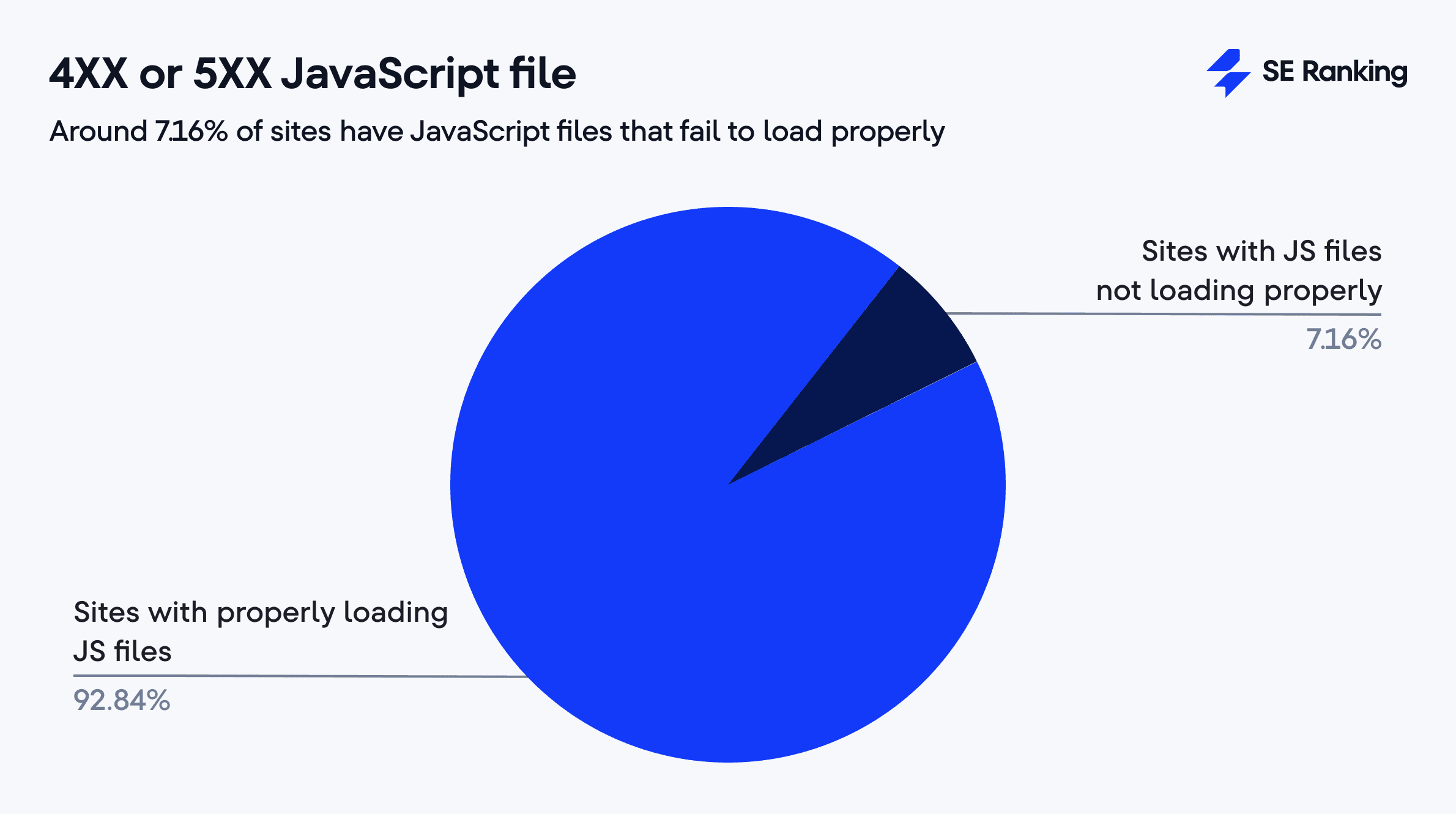

29. 4XX or 5XX JavaScript file

About 7.16% of sites have improperly loaded JavaScript files. When JavaScript breaks, your pages don’t respond properly to user actions, resulting in buttons that don’t click and forms that don’t submit. As a result, they can’t fully interact with the page. Search engines also struggle to understand these pages.

How to approach: Verify that the paths to your JavaScript files are correct, and ensure your server is responding as expected.

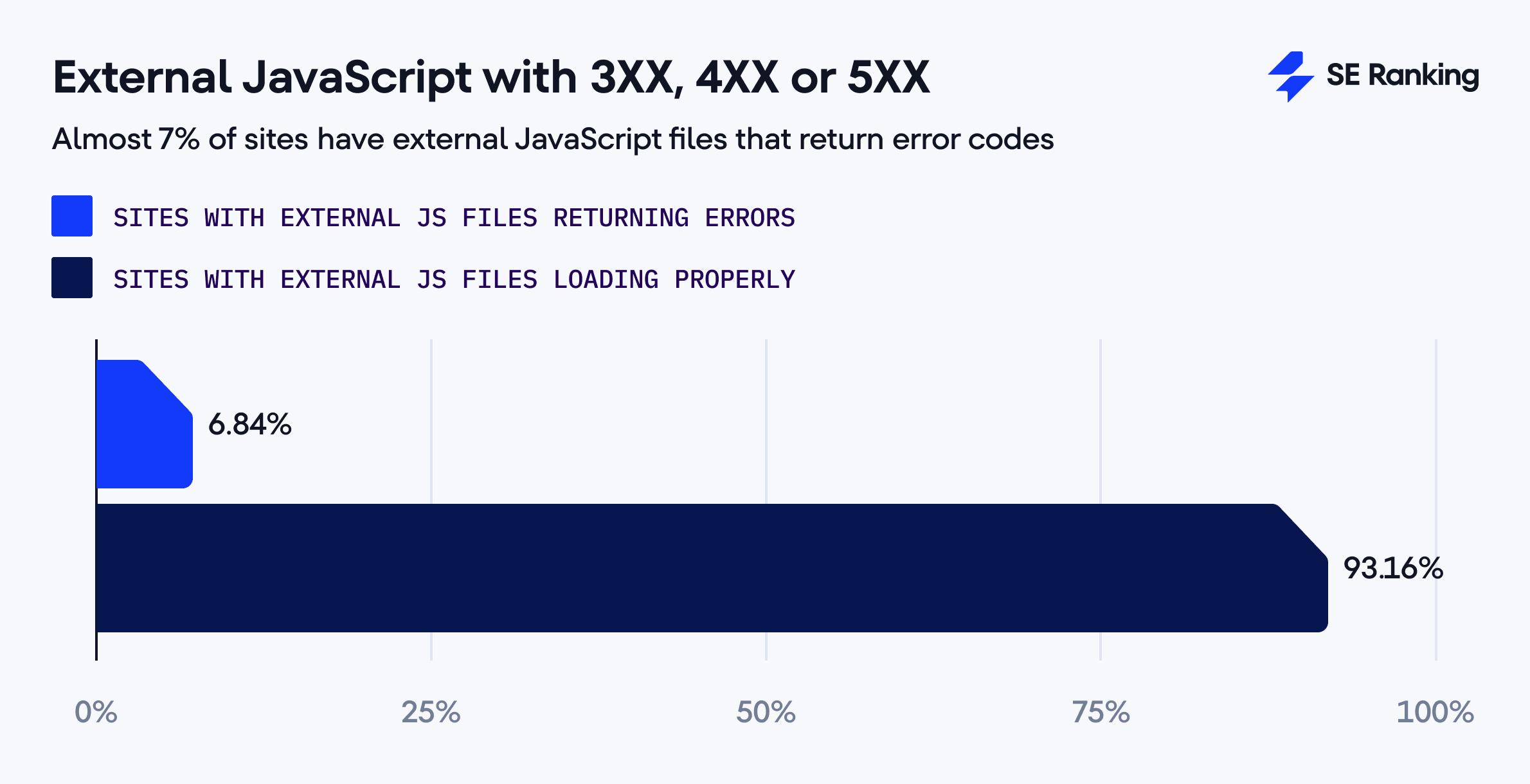

30. External JavaScript with 3XX, 4XX or 5XX

About 6.84% of sites have external JavaScript files that return error codes. But they must load correctly to function. Broken JS code on your pages hurts user experience and tanks your rankings. It causes your pages to appear improperly in the browser. Not only that, search engines still index them even if important parts of content are missing.

How to approach: Verify all external JavaScript files are accessible and return proper response codes.

31. Missing title tag

A rare but still existing issue (5.93%) is the full exclusion of the title tag. This prevents you from being able to tell search engines and users what your page is about. Google will try to solve this issue by using your page content to create its own title. And it may not always match your goals or your keywords.

How to approach: Add unique, relevant titles to your pages based on your target keywords. You can identify these pages with the help of website audit tools. After that you can write titles yourself or use content tools.

32. Hreflang to non-canonical

One in twenty sites (5.20%) risk confusing search engines by pointing the site’s hreflang tags to non-canonical pages. The set of rel=”alternate” hreflang=”x” values in these instances tells search engines “show this local version of the page,” but the rel=”canonical” attribute says “another page is more important than this one”. This confuses search engines about which instruction to follow.

How to approach: Send clear signals to search engines: change your hreflang attributes by pointing the URL to canonical (main) versions of your pages. If you find conflicts, you can either update the hreflang so that it points to the canonical version, or change the canonical attribute if the URL that the hreflang attribute points to is canonical. Both the hreflang and rel=”canonical” attributes should agree on the primary version. If the page doesn’t have alternative versions, point the canonical attribute to the page itself.

33. Duplicate content

About 5.04% of sites have duplicate content issues. Though the number may appear low, this is a serious issue. When you have the same content on different pages, search engines can’t figure out which version to index and display in search results. It causes your pages to compete with each other, which leads to cannibalization issues.

How to approach:

- Set up 301 redirects to send users from duplicate pages to the original version.

- Add rel=canonical tags to tell search engines which page is the preferred version.

- Use noindex, nofollow tags to prevent search engines from crawling duplicate pages, or use the noindex/follow tags to enable crawling.

- Make content unique by rewriting duplicate pages or adding fresh information to them.

- Check for technical issues that may cause unintended duplication (URL parameters, pagination, etc.)

Other technical SEO issues to avoid on your website

After solving the SEO issues described above, your chances of reaching the top of the SERP will be much higher.

Fixing the following technical SEO issues, which appeared in around 15.37% of scanned reports, will further strengthen your site’s performance.

302, 303, 307 temporary redirects

Temporary redirects send your users and search bots to different pages. These pages tell users (and search engines) that the pages they are trying to get to are temporarily unavailable. These redirects can’t transfer link juice from the old page to the new one. Given their temporary nature, it’s best to avoid using them for extended periods.

Missing redirect between www and non-www

Many websites use both www and non-www in their address to indicate the existence of two versions of the same page. This can lead to duplicate content problems that lower page rankings. It’s better to set up redirects to the main version.

The H1 tag is empty

Your H1 tag is the top-level page heading. Users see it first when they land on your page. Search engines also use H1 to understand what your page is about. It is the second most important tag after the title, so it needs clear, relevant text to communicate the topic of your content effectively. While some pages might have multiple H1s, it’s much less convenient for users if you have empty ones. Plus, you’re missing an opportunity to give search engines an extra signal about your page’s relevance.

Fixed width value in viewport meta tag

When your viewport meta tag doesn’t include the device-width value, your pages can’t properly adapt to different screen sizes. This downgrades user satisfaction. Users might even end up seeing a zoomed-out version of your desktop site instead of a properly scaled mobile view. To fix this, set your viewport meta tag to keep your site working well on all devices.

Blocked by nofollow

A nofollow directive in the head section of the page’s HTML code prevents search engines from following any links on that page. While this might be ideal in some cases, double-check its intentionality. Also, make sure your robots.txt isn’t blocking them. If it is, search engines won’t see your nofollow instruction, which could lead to inappropriate crawling.

Blocked by robots.txt

Robots.txt provides crawling recommendations. If it blocks your page, search bots can’t access or index it. It’s similar to how the noindex directive works, albeit one caveat. If other pages or resources link to the page blocked by the robots.txt, bots can still index it. Make sure it only blocks unnecessary or private parts of your site, not essential pages that you intend to have indexed.

Uncompressed content

When your page’s response header doesn’t include Content-Encoding, your pages are being served without compression. Uncompressed pages mean larger file sizes, which slow loading times. Slow-loading pages frustrate users and hurt your search engine rankings, as page speed is a key ranking factor. Enable compression by properly setting the Content-Encoding entity, so your pages load faster.

Total Blocking Time (TBT)

Total Blocking Time measures how long users are unable to interact with your page because it’s busy processing scripts. A high TBT means visitors face delays when trying to click, type, or scroll. This typically occurs due to large or heavy scripts. Splitting these scripts into smaller chunks and loading them incrementally can help reduce these delays.

X-default hreflang attribute missing

When running a multilingual site, you need a backup page for visitors whose language isn’t supported on the canonical version. The x-default hreflang tag serves this purpose: it tells search engines which version to show when there’s no match for a visitor’s language preference. While x-default tag is optional, it gives users from different regions a better experience.

Summing it up

Remember that the biggest errors in SEO are always the ones you don’t know about. Combat this by auditing your website regularly. This helps you keep even minor health problems in check while also preventing them from becoming bigger issues later on.

Beyond technical factors, content quality plays a pivotal role in SEO performance. Google’s Page Quality Guidelines highlight the importance of E-E-A-T—Experience, Expertise, Authoritativeness, and Trustworthiness—in evaluating web pages. Ensuring your content aligns with these principles can significantly impact your site’s search rankings. For a comprehensive understanding, refer to our article about Page Quality Rating Guidelines.

By the way, you can check any website’s SEO and see how well-optimized it is for both search engines and users with our SEO Analyzer tool.