Taking control: Removing your content from AI Overviews [and switching them off]

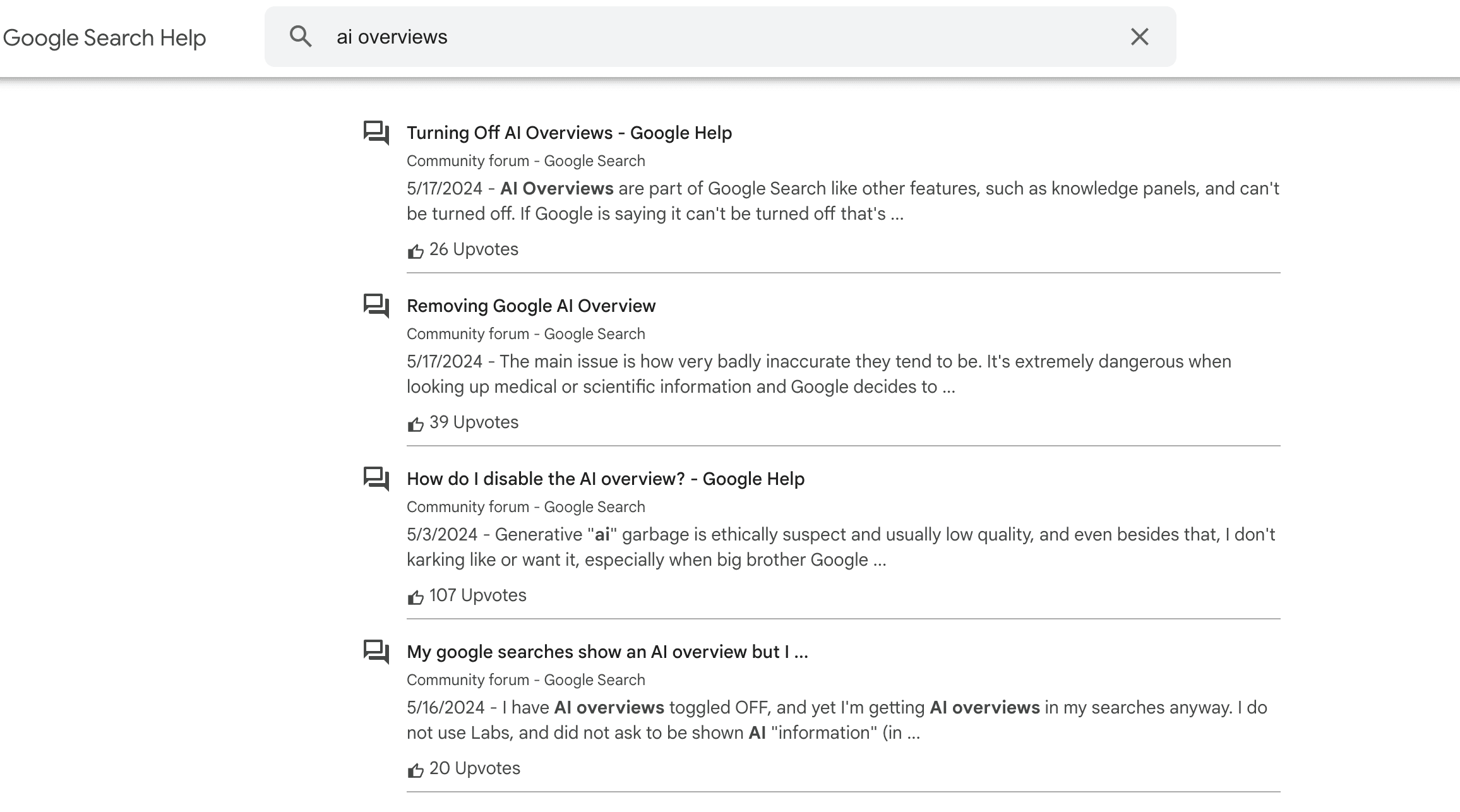

Google recently announced that AI-generated Overviews (ex-SGE) went live on search results in the US. While the search engine claims that users are satisfied with the quality of these AI-generated responses, frequent complaints about the new feature’s poor accuracy, lack of usefulness, and content scraping tell a different story. Here are just a few examples:

It’s no surprise that website owners and content creators are trying to figure out how to avoid this new Google feature. Some aren’t satisfied with AI Overviews and want to switch them off on SERPs, others are looking into ways to prevent their content from appearing in AI-generated answers and from plagiarism. Even searching for AI Overviews on the Google Search Community page yields many threads where users ask such questions.

Let’s explore how to handle each scenario.

How to stop your website from appearing in AI Overviews

As stated in Google’s Privacy Policy, the search engine “may collect information that’s publicly available online or from other public sources to help train Google’s AI models.” This means that Google can use any of your published content to generate AI Overviews.

The only way to completely opt out of having your website’s content used for AI-powered answers is to remove your site from Google’s index. But wait. This action also removes your website from search! Pretty drastic, right?

While we’ll still explain how to do it, we’ll also share some of the less radical techniques in this post. They can assist you in partially protecting your content from being shown in Google AI snippets without plummeting your search visibility.

Use preview controls

Google ultimately decides what to include in search snippets and how to display them. However, you can use preview control directives to tell Google how you want your snippets to appear or if you want them shown at all.

You can use the following directives to limit or remove your content from AI Overviews:

- Data-nosnippet: This HTML attribute prevents certain pieces of your content from appearing in the search snippet.

You can add it to span, div, or section elements, but make sure your HTML is valid and all tags are closed. Here’s an example:

<p>This text can be shown in a snippet

<span data-nosnippet>This text should not be shown in a snippet</span>.</p>

- Nosnippet: This HTML attribute completely removes the snippet for a URL from appearing in the search results.

You have two options for implementing the nosnippet attribute:

As meta tag:

<meta name="googlebot" content="nosnippet">

As X-Robots tag:

X-Robots-Tag: googlebot: nosnippet

Specify the “googlebot” user agent. Otherwise, this directive will apply to all search engines.

We’ve already covered these directives on our blog. For more information about them, read our guide on robots meta tags and X-Robots-Tag.

Unfortunately, even if your snippet disappears from search results after using these methods, your content can still resurface in AI Overviews. Glenn Gabe highlighted an instance where the link to his post reappeared in an AI Overview about a week after implementing the nosnippet attribute.

He reported this to Google. In response, John Mueller said that the content/links would eventually disappear, but it may take time for Google to process.

Trapped – Part 2 🙂 -> Based on my case study, and finding my content BACK in the AI Overview (even after using nosnippet), @johnmu checked with the team working on AI Overviews. They explained that my link should be removed from the AI Overview over time and that aspect just… pic.twitter.com/VDu95eOYCd

— Glenn Gabe (@glenngabe) May 22, 2024

This suggests that while preview controls help minimize how often your content appears in AI Overviews, there are no guarantees. If you’re looking for a surefire way to protect your site and don’t mind taking more drastic measures, consider limiting the indexing or noindexing of your content entirely.

But think twice before doing this.

Disallow crawling for Googlebot

Use disallow directives in robots.txt to close either your entire website or specific folders from crawling (and therefore indexing) Note that these are recommendations to search engines, meaning bots can choose not to obey them. Also, the Disallow directive has no control over content that has already been crawled and indexed. Pages previously crawled will remain in the search index and can appear in AI Overviews.

Instruction for the website level:

User-agent: Googlebot

Disallow: /

Instructions for the folder level:

User-agent: Googlebot

Disallow: /folder name/

Specify the search engine you want to block (user-agent) and carefully choose the parts of your site to exclude from crawling. This prevents you from inadvertently closing important ones.

Check our post on robots.txt to get detailed instructions on using this file and setting directives.

Noindex for Google

The most drastic method for removing your content from Google’s AI Overviews is to noindex your site or pages. However, this approach will also remove your content from traditional Google Search, Discover, and News results.

To close specific pages from indexing, use meta tag or X-Robots. It works similarly to preview controls but uses the noindex directive at the page level instead.

As meta tag:

<meta name="googlebot" content="noindex">

As X-Robots tag:

X-Robots-Tag: googlebot: noindex

Whatever approach you choose, remember that it can take time before Google fully processes your directives and for you to see the results. The timeframe depends on how frequently Google recrawls your page. You can also request that Google recrawls your pages. However, until that happens, your content can still appear in AI Overviews.

Note on Google-Extended!

Blocking the Google-Extended user agent in robots.txt won’t prevent your content from appearing in AI Overviews. This crawler was initially designed to train Google’s AI tools, but Google later clarified that this user agent only affects Gemini Apps and Vertex AI, not Search (AI Overviews inclusive).

How to stop AI bots from scraping your content

Website owners are also increasingly concerned about AI bots scraping their content. This can potentially devalue their original work and reduce traffic to source websites.

Some companies use web scrapers openly and honestly. For instance, OpenAI and Google have agreements with Reddit to use their users’ posts to train their AI systems. But others aren’t as transparent. So users are searching for ways and solutions to protect their.

One method is through the robots.txt file, which allows you to block specific AI bots. For instance, to prevent OpenAI’s ChatGPT from accessing your site, add the following lines to your robots.txt:

User-agent: ChatGPT-User

Disallow: /

However, many AI bots don’t follow these recommendations and continue scraping sites’ content.

Solutions like Cloudflare address this issue. They have developed a feature that blocks all AI bots—even those that claim to adhere to scraping protocols. This ensures that your original content remains exclusive and protected against unauthorized content scraping.

How to turn off AI Overviews

Will Google offer its users a way to hide AI Overviews? Even though Bing let you deactivate Copilot and Google previously offered an option to disable SGE in Search Labs, it’s doubtful that the trend will continue with AI Overviews.

Let’s see what options you have as of now:

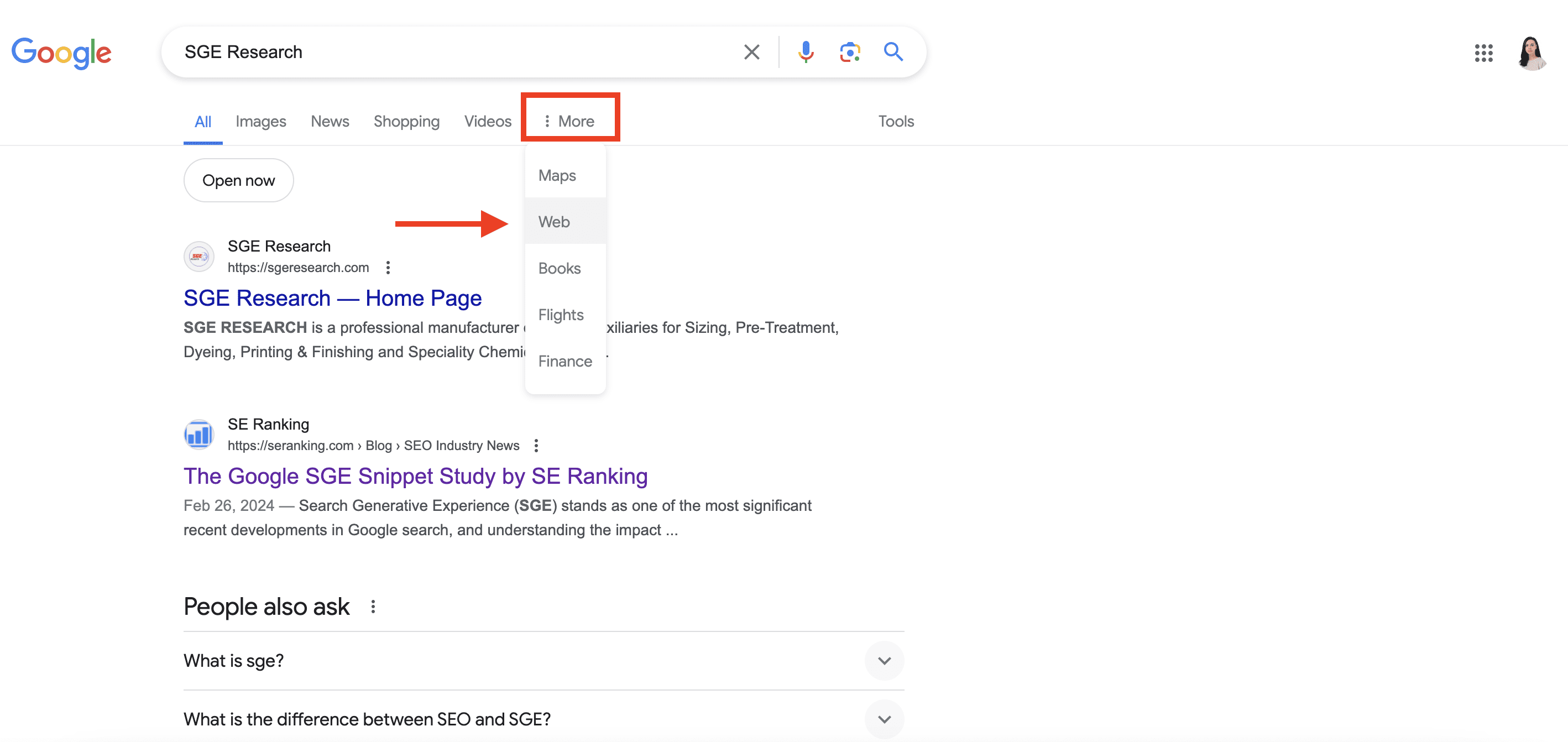

“Web” filter

To hide the AI-generated Answers as well as some other elements (knowledge panels, featured snippets, etc.) on Google Search results, use the ‘Web’ filter located just below the search bar. In some cases, it can be hidden under the More button. This way, you’ll only see traditional blue links without any elements.

Chrome extensions

As another option to hide AI-generated answers from your SERPs, you can find browser extensions like Hide AI Overviews and Bye Bye, Google AI on the Chrome Web Store.

We don’t recommend using extensions to hide AI Overviews. Think of them as potential options to consider if turning off AI Overviews is a non-negotiable for you.

Consider that hiding AI Overviews won’t stop Google from generating them or using your website’s content. Google will still create these AI-generated answers behind the scenes. They just won’t be visible in your SERPs.

Bottom line

Unfortunately, your options for protecting your content from appearing in Google’s new AI Overviews are just as few as they are limited. There is no perfect solution.

You can control your snippets, but this won’t stop Google AI from seeing and using your content. Going nuclear with a full noindex ensures you stay out of AI Overviews but completely removes you from traditional search results. If you just want to hide AI Overviews from your search results, unofficial browser extensions can help. However, this only hides the symptom and fails to treat the cause.

The approach you take, if any, is ultimately up to you. It depends on your priorities surrounding content “safety” from AI versus discoverability.