Review platforms lost 90% of traffic over the past 2 years, yet they top AI Overview citations for commercial queries

To be mentioned in AI answers as a recommendation, you obviously need a strong website with clear, structured information. But your own site isn’t enough. LLMs also look at external sources when forming their responses. And among these, review platforms often play a role.

And now G2 has made things even more interesting by acquiring Capterra, Software Advice, and GetApp from Gartner. This gives G2 even more influence over which products show up in AI answers.

To understand exactly how visible review platforms are in AI Overviews, we analyzed 30,000 commercial keywords (i.e., those used to evaluate products/services) and tracked how 23 major review platforms appeared in AI answers.

Here’s a closer look at what we discovered.

-

Review platforms appear in roughly one-third of AI Overviews.

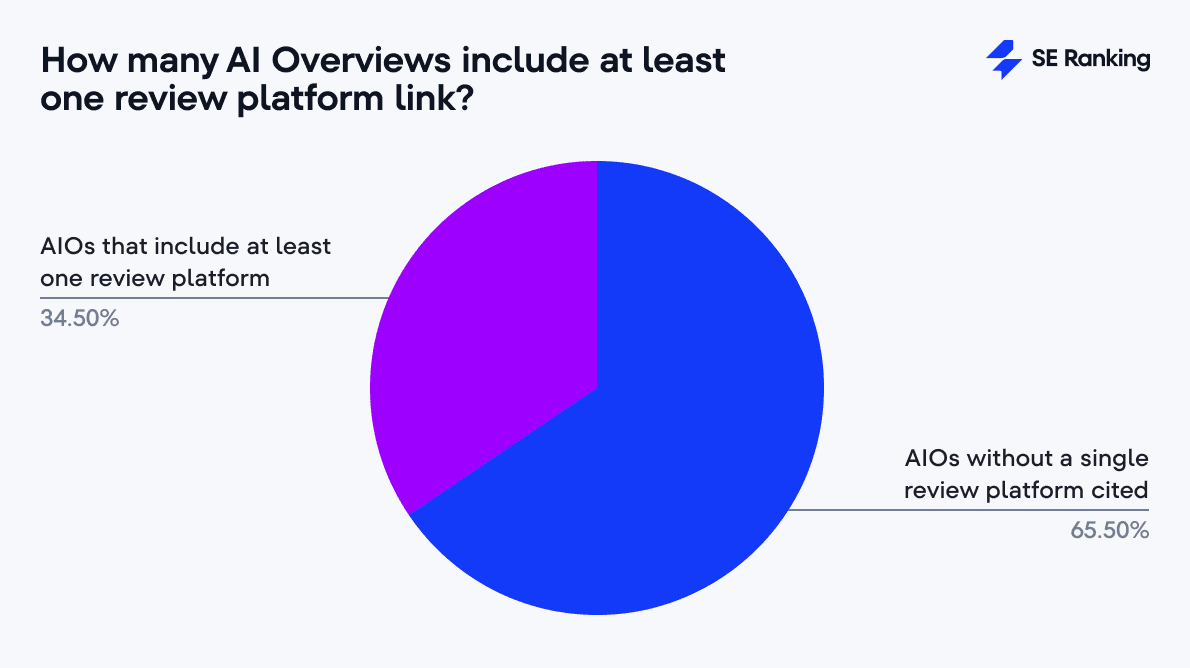

Out of all AI Overview responses, 34.5% cited at least one review platform. Still, two-thirds of AIOs rely on other types of sources (like vendor websites, e-commerce platforms, corporate blogs, social media platforms, and so on).

-

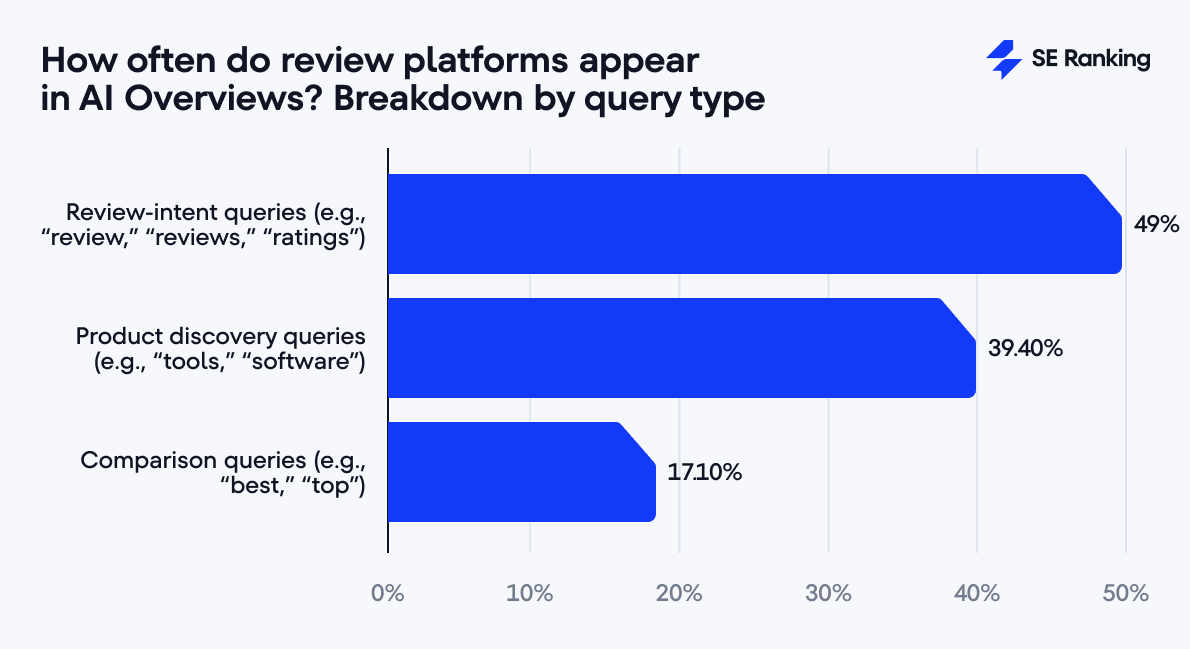

Query wording strongly affects the likelihood of review platforms appearing in AI Overviews.

Explicit review searches (those including terms like “review,” “rating,” and so on) have the highest share: 49% of such AI Overviews include at least one link to review platforms. In contrast, list-style queries like “best” or “top” trigger review platforms in only 17.1% of Overviews (around 3x less).

-

A small group of review platforms dominates citations in AI Overviews.

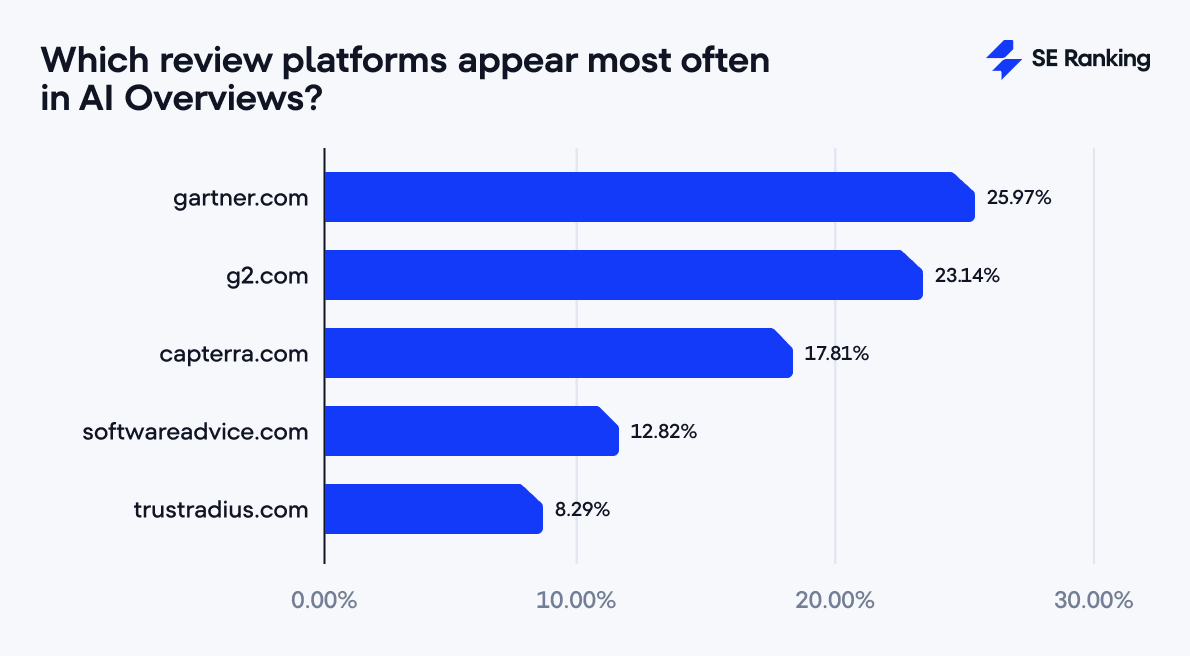

Gartner Peer Insights, G2, Capterra, Software Advice, and TrustRadius account for 88% of all review platform links. The gap between them is small, which shows that Google treats these platforms as roughly equivalent.

-

Beyond the top five, other review platforms have minimal visibility.

Platforms like GetApp and Clutch appear occasionally (2.51% and 2.38%, respectively), while others such as Product Hunt, SiteJabber, and PeerSpot are nearly invisible. Three platforms (AlternativeTo, SaaSHub, and FinancesOnline) were not cited at all.

-

There is no clear correlation between AI Overview citations and organic traffic retention.

Even platforms with the highest share of AI citations lost most of their organic traffic between early 2024 and the end of 2025. Declines ranged from 76.5% for Gartner Peer Insights to 92.2% for TrustRadius.

-

Review platforms are a small fraction of total links but earn disproportionate trust in AI Overviews.

They represent just 8.5% of all links, yet three of the top five cited domains are review sites (Gartner Peer Insights, G2, and Capterra). This shows that Google treats top review platforms as authoritative sources despite their limited overall presence.

-

When AI Overviews include review platforms, it is often more than one link.

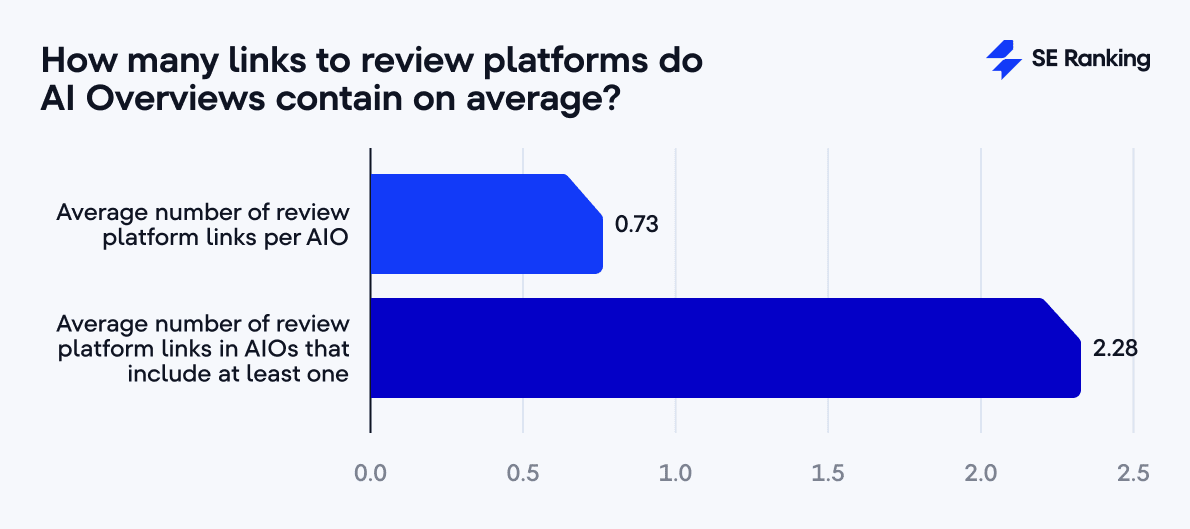

In nearly 40% of cases, multiple review platforms appear together, with an average of 2.28 review platform links per response. What this means is that Google often prefers to compare perspectives rather than rely on a single review platform.

-

Textual mentions of review platforms without links are rare.

Only 4.3% of AI Overview responses feature a review platform in the text by name without linking. The most likely explanation for this is that Google sometimes references platforms in the AI text for credibility alone.

Overall presence of review platforms in AI Overviews

You might think that Google’s AI would lean heavily on well-known review sites like Gartner Peer Insights, G2, and Capterra whenever it answers questions about products. After all, these are the sites we’ve relied on for years to check user experiences, ratings, and expert insights before buying.

But the reality is a bit different.

We analyzed 22,729 AI Overview queries, expecting review platforms to appear in the majority of cases. Instead, only 34.5% of AI Overviews cited at least one review platform.

Put another way: roughly every third AI Overview pulls information from a review site. That’s significant, but it also means two-thirds of AIOs rely entirely on other types of sources.

This tells us that Google’s AI is pretty selective. It doesn’t just pile on the “obvious” sources. It looks for trustworthiness, authority, and structured information, and sometimes, the big review platforms aren’t the first stop.

Now, let’s look beyond mere presence and examine the actual contribution of review platforms when they do appear.

The average AI Overview includes 9.29 total links, yet review platforms contribute only 0.73 links per response. That’s a tiny fraction, right? But here’s the catch: when a review platform does show up, it often appears multiple times.

In AI Overviews that include review sites, there are 2.28 review platform links per response on average. This counts every link, even when multiple links point back to the same review site.

So, AI Overview might link to G2 to summarize user ratings, then to Capterra for feature comparisons, and finally to TrustRadius for pros and cons. Or, it could pull all this information from the same platform. Which approach it takes depends on factors like the quality, completeness, and structure of the available sources.

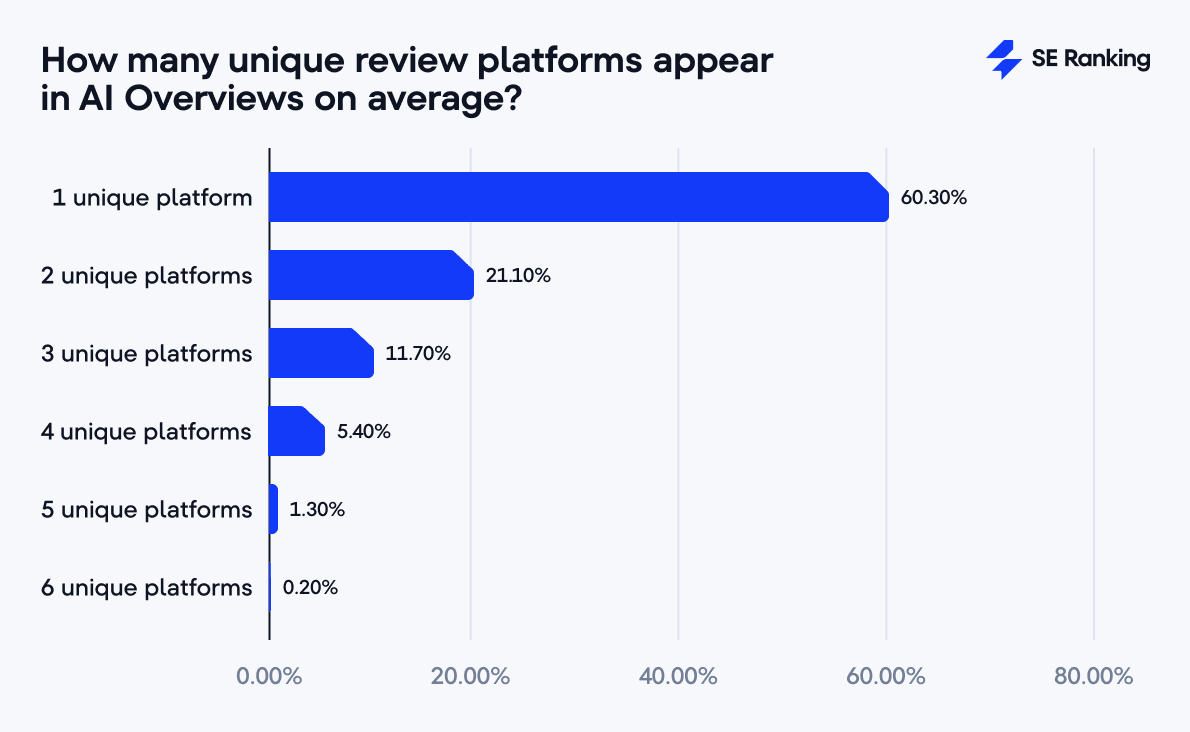

Now here’s where it gets interesting. If we stop counting total links and instead look at unique review platforms, the pattern changes.

Most AI Overviews stick to a small number of unique review sites:

- 60.3% link to only one review platform

- 21.1% link to two

- 11.7% link to three

The most we saw was seven different review platforms in a single response (and that only happened once).

What does this tell us?

AI Overviews tend to lean on the same review platforms multiple times, rather than pulling in lots of different ones.

However, in nearly 40% of cases, they do include multiple unique review platforms, which gives users access to a wider range of perspectives.

Platform comparison: Gartner Peer Insights, G2, Capterra, and more

Our research shows that when Google’s AI pulls review platforms into AI Overviews, it doesn’t wander far. It relies heavily on a small group of trusted sources.

Specifically, five platforms dominate citations:

- Gartner Peer Insights – 26.0% of all review-platform links

- G2 – 23.1%

- Capterra – 17.8%

- Software Advice – 12.8%

- TrustRadius – 8.3%

Together, these five platforms account for 88% of all review platform citations in AI Overviews. That’s nearly nine out of every ten review platform links pointing back to the same handful of names.

What’s interesting is that the gap between them isn’t massive. Gartner Peer Insights leads, but only by a few percentage points over G2, and G2 isn’t far ahead of Capterra. So, it’s safe to say that Google sees several of these platforms as roughly comparable in authority (a kind of “tier one” circle of review credibility).

After the top five, however, visibility drops off sharply. Platforms like GetApp and Clutch appear occasionally, usually in cases where their content is uniquely relevant:

- Clutch, for example, is often cited for consulting firms, B2B services, or software with a strong agency or implementation component. If the AI is summarizing reviews of enterprise software providers rather than off-the-shelf SaaS, Clutch often becomes more valuable.

- GetApp might appear when pricing or feature comparisons are particularly well-documented for smaller tools.

Other platforms, such as Product Hunt, SiteJabber, or PeerSpot, are nearly invisible in AI Overviews. Their content may be less structured, less authoritative, or more community-driven than Google’s AI prefers. Some, like AlternativeTo, SaaSHub, and FinancesOnline, didn’t appear at all in our dataset.

Which keywords/prompts are most likely to trigger AI Overviews with review platforms?

For this part of the research, we wanted to see how much the wording of a query/prompt impacts the sources AI Overviews rely on, particularly when it comes to review platforms.

To understand this, we took 30,000 keywords and split them into three equal groups, each representing a different kind of search intent.

- The first group focused on explicit review searches, using terms like “review,” “reviews,” and “ratings.”

- The second group covered comparison-style searches, built around words like “best” and “top.”

- The third group included product discovery queries, such as searches for “tools” or “software,” without any direct reference to reviews.

In short, we wanted to see whether AI Overviews stick with official company sites and blogs when users don’t ask for reviews, or if large review platforms still find their way into the answers anyway.

A little spoiler: “best” and “top” searches (like “best project management tools”) might sound like perfect use cases for review platforms, but in reality, AI Overviews usually look elsewhere for such cases (mainly review blogs, media sites, and ranking-style aggregators).

Explicit “review” searches

No surprises here. When a user explicitly includes words like “review,” “reviews,” or “rating”, AI Overviews are most likely to include some review platform as a source of content.

- 49% of these AIOs include review platforms

- Review platforms make up 16% of all cited sources

- Each AIO includes 3.17 review platform links on average

It’s a classic case of “ask and you shall receive.” If the user signals that they want reviews, AI leans heavily on sites built to provide exactly that.

General “software” and “tools” searches

Still, even without explicit review intent, review platforms still appear surprisingly often for queries including words like “software” or “tools” (e.g., “email marketing software,” “project management tools,” “CRM software for small businesses”):

- 39.4% of AIOs still reference review platforms

- Review platforms account for 5.7% of sources

This is probably because these platforms provide structured info like pricing, features, and pros/cons (all things Google’s AI can summarize efficiently).

“Best” and “top” searches

For queries like “best project management tools”, you’d think review platforms would dominate. But no:

- Only 17.1% of AIOs include review platforms

- Review platforms account for just 2.7% of sources

Instead, AI prefers blogs and ranking-style content. This was a surprising insight. And it shows that “best of” content has its own kind of authority in AI systems.

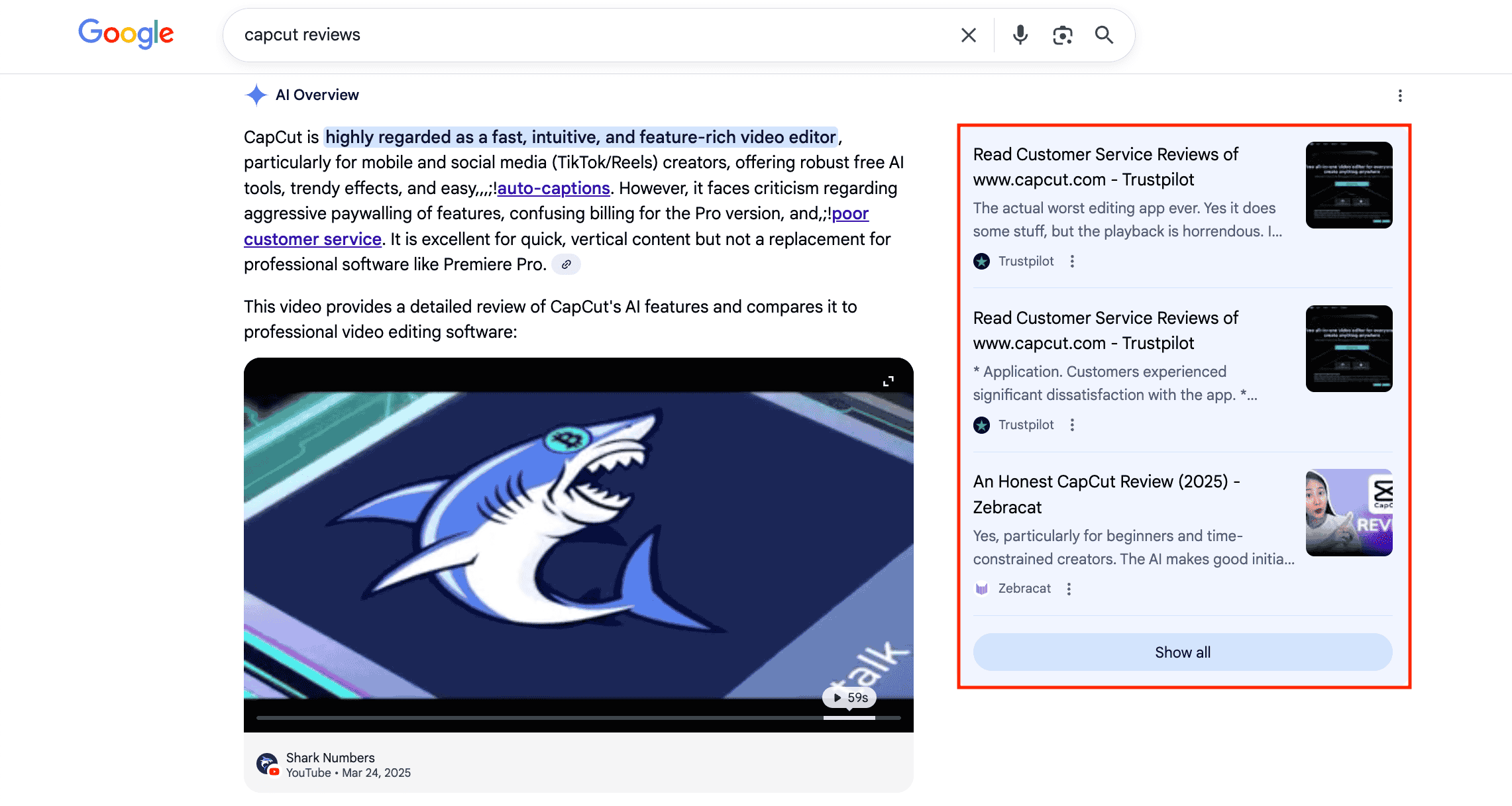

Where do review platforms appear in AI Overviews?

There are a few distinct ways that Google can use to present links in AI Overviews. And each placement has a different purpose and level of visibility.

Broadly speaking, review platforms can appear in two main ways:

- As links in the sources block on the right-hand side of the AI Overview (or at the bottom). These are the citations that Google lists to show where its information comes from. The main problem with them is that they don’t always get immediate attention (usually, just the first three sources are highly visible in AI answers).

- Directly within the AI text itself, either as clickable links (inline citations) or just as a textual mention without a link.

Each of these placements matters differently. Links in the sources block generally establish general credibility, while inline links highlight specific, relevant content (or signal contextual authority).

Let’s break down each case to see not just if review platforms appear, but how prominently they feature in AI Overviews.

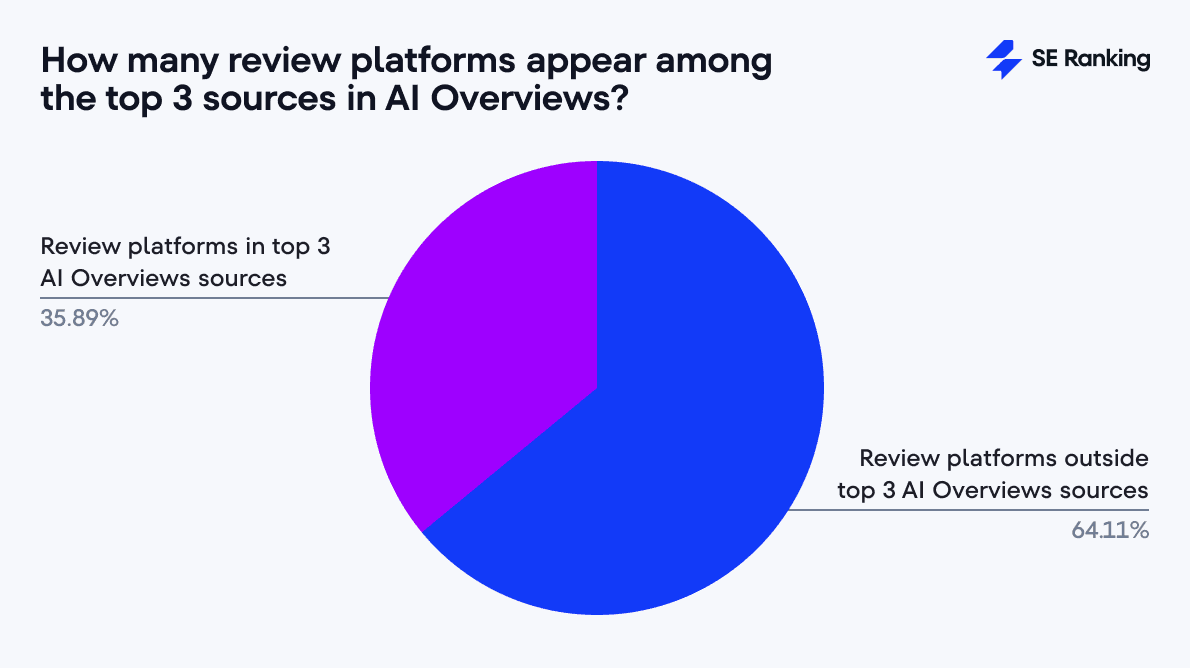

Links in the sources block

First things first, most review platform citations appear in the sources block of AI Overviews.

However, there’s an important nuance: when Google AI includes review platform citations in the sources block, only about 35.9% of links appear in the top three positions.

That means the majority of these citations are buried further down the list. Users need to scroll or expand the sources section to see them, which can reduce visibility and influence.

Even among the most cited platforms (Gartner Peer Insights, G2, Capterra, and TrustRadius) the median position is around 5 (with the share of appearances in the top three ranging from 33% for TrustRadius to 37% for Capterra).

This shows an interesting dynamic: being heavily cited doesn’t guarantee top placement in AI Overviews. For users, this means that even well-known, highly credible platforms can be “buried” in the sources block.

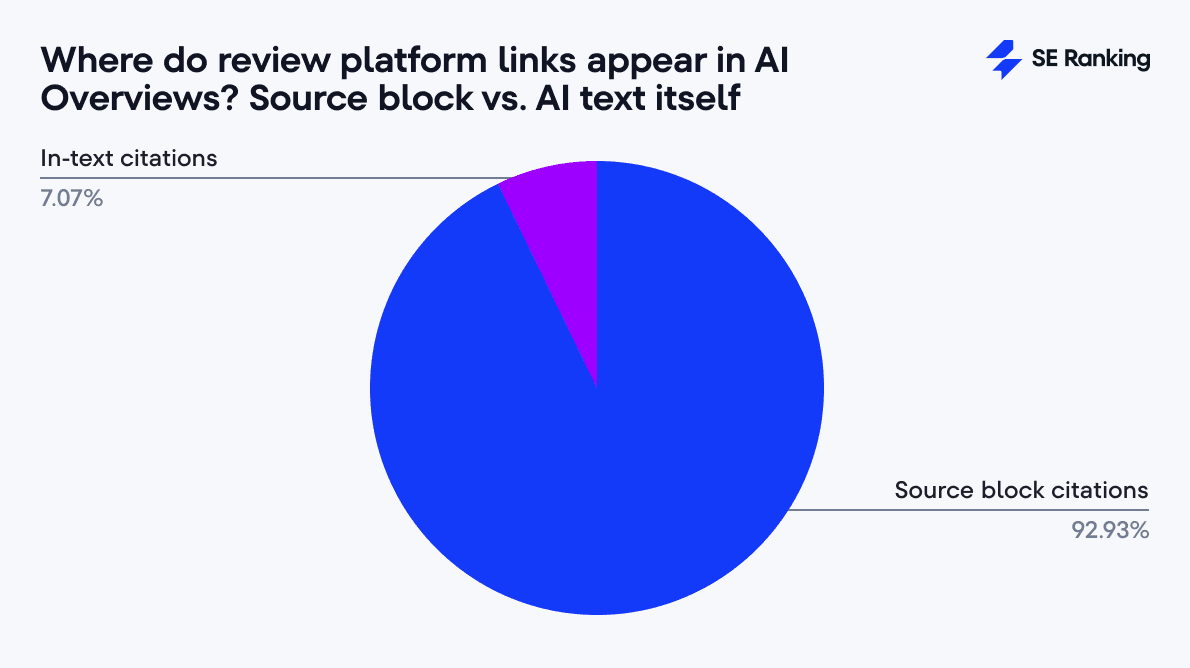

Inline citations in the AI text

Within the AI-generated text itself, review platforms are far less prominent.

Out of 17,916 detected links to review platforms, only about 7% appear directly in the text as inline links. The vast majority (roughly 93%) remain in the sources block on the right. This suggests that Google generally reserves inline links for content that is especially relevant or unique.

Interestingly, smaller platforms sometimes get more visibility inline than the big players:

- Clutch has 21.6% of its links inline

- Yelp has 15.8% inline

- Meanwhile, Gartner Peer Insights has only 6.2%, G2 8.1%, and Capterra around 6%

This creates an intriguing paradox.

The platforms that are most frequently cited in the sources block are less often linked directly in the text, while smaller platforms get more inline attention.

A likely explanation is that Google uses top platforms as general reference sources, while smaller platforms are highlighted when their content is particularly relevant to a specific point in the response.

Textual mentions without links

Review platforms can also be referenced without any link at all, simply by mentioning the platform’s name. Still, this is a fairly rare scenario.

Only 4.3% of AI Overviews contain textual mentions of review platforms, which is much lower than the 34.5% of AIOs that include links to these platforms in the dedicated sources block on the right.

In other words, review platforms are roughly eight times more likely to be cited in the sources block than simply mentioned by name in the AI response text.

When these mentions do occur, there are typically multiple per response, averaging 2.1 mentions in a single AI Overview.

So, it seems that Google sometimes references platforms purely for contextual authority, without providing a link. For example, a phrase like “According to Yelp reviews…” adds credibility even when no URL is included.

How do review platforms compare with other domain types in AI Overviews?

To put review platforms in context, we compared them to other sources (including both block and inline citations) in AI Overviews for the same set of keywords.

For this, we first classified over 211,000 links into four categories:

- Vendor, e-commerce, and other sites – official software pages, corporate blogs, e-commerce platforms, and documentation.

- Social and community platforms – Reddit, YouTube, Quora, Medium, LinkedIn, Stack Exchange, etc.

- Review platforms – Gartner Peer Insights, G2, Capterra, TrustRadius, and others.

- Media outlets and popular blogs – tech publications like PCMag, TechRadar, CNET, and general news media like Forbes, TechCrunch, The Verge.

Next, we compared the share and coverage of each category. The results are summarized in the table below:

Number of Links

153,044

Share of Total

72.5%

Coverage in AIOs

99.1%

Number of Links

28,346

Share of Total

13.4%

Coverage in AIOs

60.1%

Number of Links

17,916

Share of Total

8.5%

Coverage in AIOs

34.6%

Number of Links

11,653

Share of Total

5.5%

Coverage in AIOs

28.5%

153,044

72.5%

99.1%

28,346

13.4%

60.1%

17,916

8.5%

34.6%

11,653

5.5%

28.5%

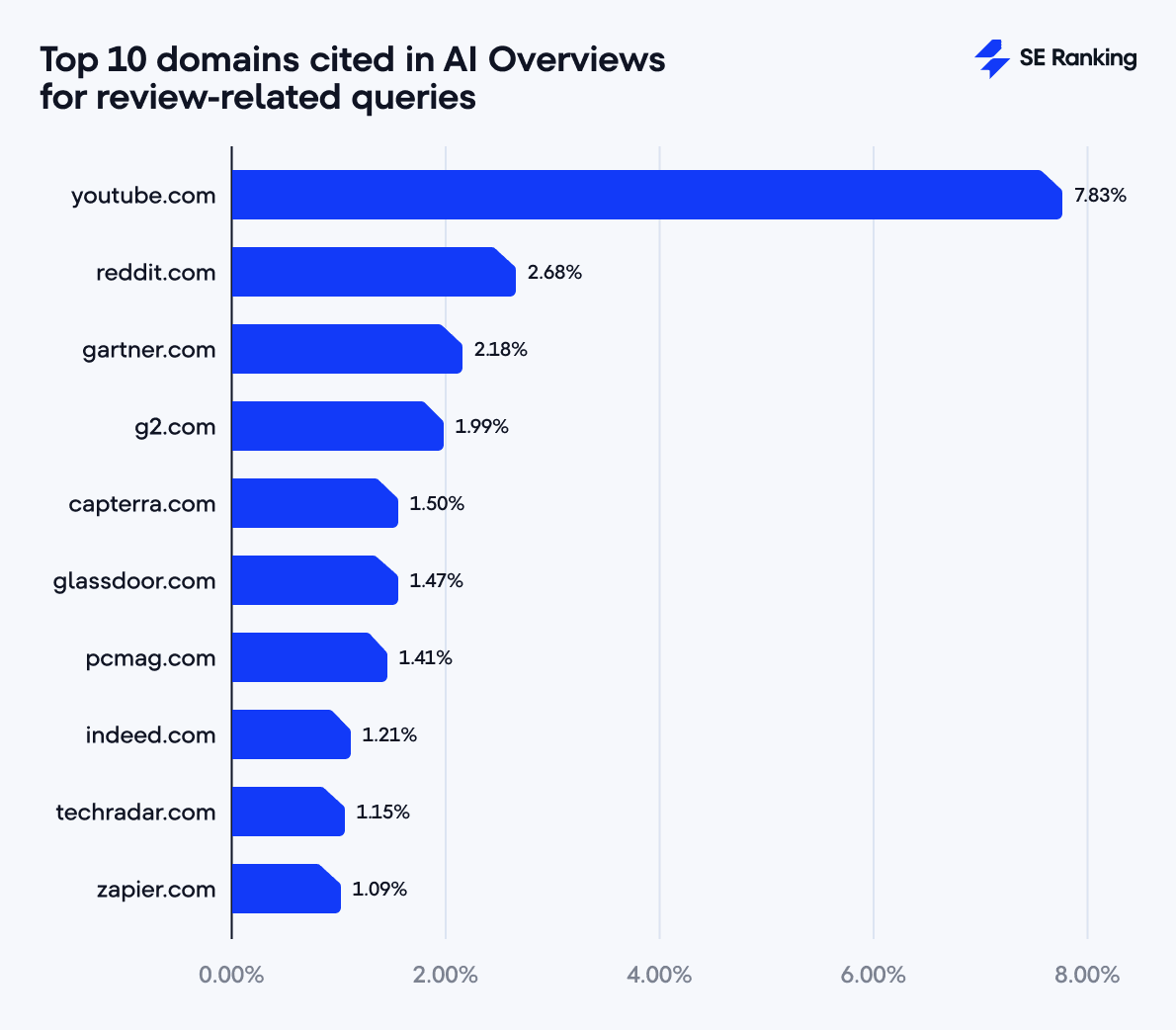

When we look at the top 10 most cited domains, review platforms hold a strong position:

Insights:

- Review platforms overall represent only 8.5% of all links, but three of the top five most cited domains are review sites (right after YouTube and Reddit).

- This shows that while review platforms are a minority in quantity, Google treats the top platforms as highly authoritative.

Where are review platforms more visible: AI Overviews vs. organic search?

To understand whether being cited in AI Overviews makes a difference for review platforms, we also analyzed US organic search traffic for 23 review platforms from 2024 through 2025. This is basically the period from when AI Overviews first appeared to the point when they became widely adopted.

So, let’s go through our research findings step by step.

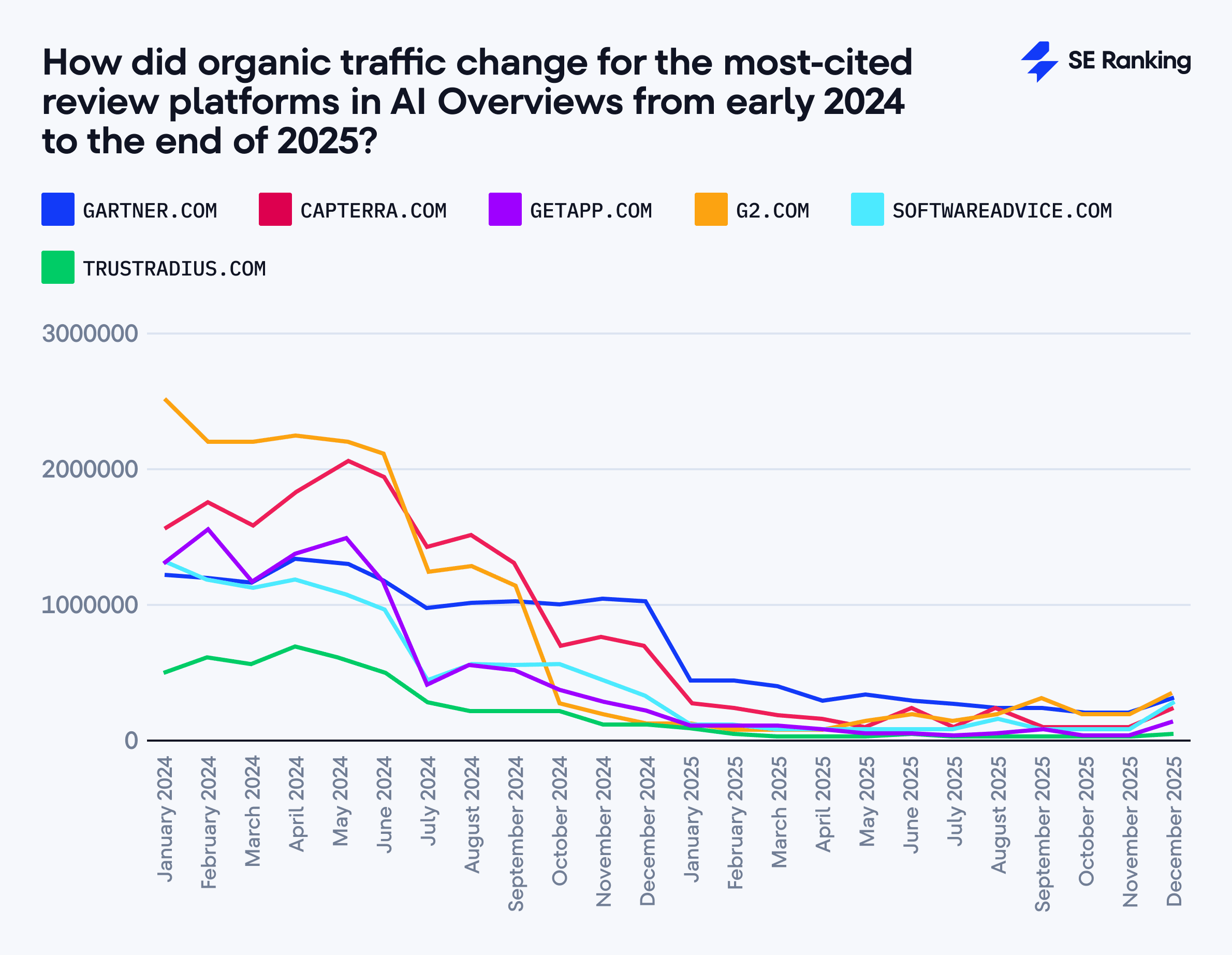

To begin with, almost all review platforms saw a sharp traffic decline in 2025, following smaller declines throughout 2024.

Even the five platforms most cited in AI Overviews (Gartner Peer Insights, G2, Capterra, Software Advice, and TrustRadius) all saw dramatic organic traffic declines:

- G2: From ~2.56M visits in January 2024 to ~397K in December 2025, an 84.5% drop. The worst month-over-month decline occurred in January 2025 (over 70%).

- Gartner Peer Insights: Starting at ~1.29M visits, it ended 2025 at ~304K, losing 76.5% of traffic. Even though its percentage drop was the smallest among the top five, the absolute loss was enormous.

- Capterra: Fell from ~1.63M to just ~179K visits, an 89% decrease, with a staggering 60% single-month drop at the start of 2025.

- Software Advice: Dropped 86.5% overall, from ~1.27M to ~172K visits.

- TrustRadius: Suffered the harshest decline, falling 92.2%, ending 2025 with less than 8% of its early 2024 traffic.

Even platforms with a moderate AI Overview presence experienced huge declines:

- GetApp: -89% (from 1.36M → 149K)

- Clutch: -87% (from 1.78M → 231K)

If you’re wondering whether your platform (or others in your niche) is experiencing the same declines, SE Ranking’s Competitive Research Tool can help you spot those organic visibility trends. It also lets you track keyword performance, search volumes, CPC, new and lost keywords, and even country-specific search results.

Yelp presents an interesting example.

Although it appeared in AI Overviews only 165 times (less than 1% of citations), it received 300 brand mentions (accounting for 14.56% of all review platform mentions and ranking third in that metric).

Yet even this recognition didn’t help its traffic. After peaking at about 867 million visits in November 2024, it fell to roughly 122 million by December 2025 (a 77% drop).

This shows that even being frequently mentioned by name in AI Overviews doesn’t protect a platform from broader drops in search traffic.

For a fast, simple look at your traffic patterns, you can check it out for free and get an instant feel for how things are moving.

What does this mean for review platforms?

Yes, organic traffic to review platforms is falling. Drastically. Gartner Peer Insights dropped 76.5%, G2 84.5%, Capterra 89%, and TrustRadius over 92% between early 2024 and the end of 2025. And the trend isn’t likely to reverse anytime soon. Zero-click searches are on the rise, and users increasingly get their answers directly from AI Overviews without visiting the original site.

But traffic alone doesn’t tell the full story. Review platforms are still being cited extensively by AI systems. In our dataset, Gartner Peer Insights, G2, and Capterra remain in the top five most cited sources overall. LLMs continue to rely on them for structured product info, user reviews, pricing, feature comparisons, and even pros/cons summaries.

In practice, this means that review platforms still affect purchasing decisions even when fewer users click through.

What does this mean for SEOs?

Previously, SEOs focused almost entirely on optimizing their own websites. That’s no longer enough. Review platforms now influence visibility in AI search, even though users don’t always visit those platforms directly.

As a result, declining organic traffic to review sites doesn’t mean they’ve lost value. They’re still being used, but increasingly through AI systems rather than traditional searches.

So, what should businesses and SEOs do in this transitional period?

- Maintain listings on major platforms. Gartner Peer Insights, G2, and Capterra still dominate AI citations. If your product isn’t listed (or the listing is weak), AI Overviews will likely pull from competitors instead. So, make sure your listings are complete, accurate, and structured (features, pricing, reviews, and comparisons all matter).

- Prepare for new metrics. Traditional SEO metrics like traffic and impressions may no longer tell the full story. Track brand mentions, AI citations, and visibility as new indicators of influence.

All in all, review platforms remain essential for product visibility. The way users interact with them is changing, but being present, structured, and AI-ready helps your product stay visible (even in a world of zero-click search).

Research methodology

To understand how review platforms appear in AI Overviews, we ran an analysis using 30,000 keywords. We split them evenly into three categories of intent:

- Review-focused queries – keywords that included terms like review, reviews, ratings, and so on.

- Listing-style queries – keywords with phrases like best or top.

- General software queries – terms like tools or software without any explicit review intent.

This research was a one-time check, capturing the state of AI Overviews in the US on December 1, 2025. Out of the 30,000 keywords, AI Overviews appeared for 22,729 queries, which became the foundation for our deeper analysis.

We examined 23 major review platforms, including Gartner Peer Insights, Capterra, GetApp, G2, Software Advice, TrustRadius, SourceForge, Slashdot, SiteJabber, Clutch, GoodFirms, FeaturedCustomers, PeerSpot, Serchen, Product Hunt, AlternativeTo, Crozdesk, SaaSHub, TechnologyAdvice, FinancesOnline, Business Software, SoftwareSuggest, and Yelp.

To get a clear picture, we looked at three types of presence in AI Overviews:

- Block citations – links are listed in a separate Sources section on the right (or at the bottom) of the AI Overview.

- Inline citations – links embedded directly in the text as clickable references.

- Textual mentions – cases where the platform’s name is referenced in the AI Overview text itself (for example, “according to G2” or “Capterra reviews show”), even if no link is provided.

This approach let us see not just whether platforms were cited, but how Google chooses to present them to users (as background sources, highlighted references, or just name-dropped for context).

Disclaimer: While this study provides a detailed snapshot of AI Overviews at a specific point in time, other interpretations or analyses are possible. Different keyword sets, timeframes, or methodological choices could lead to slightly different insights.

Final thoughts

Our research shows that review platforms show up in AI Overviews enough to matter, but they rarely dominate.

Google usually leans on a handful of big names like Gartner Peer Insights, G2, and Capterra, while smaller players get a spotlight only when their content is particularly relevant.

Still, AI search is changing fast, and LLMs are evolving all the time. We’ll keep tracking these shifts, so stay tuned!