DeepSeek: Cost-effective AI for SEOs or overhyped ChatGPT competitor?

Overhyped or not, when a little-known Chinese AI model suddenly dethrones ChatGPT in the Apple Store charts, it’s time to start paying attention. A quick Google search on DeepSeek reveals a rabbit hole of divided opinions. Some celebrate it for its cost-effectiveness, while others warn of legal and privacy concerns. But all seem to agree on one thing: DeepSeek can do almost anything ChatGPT can do.

For SEOs and digital marketers, DeepSeek’s latest model, R1, (launched on January 20, 2025) is worth a closer look. It’s the world’s first open-source AI model whose “chain of thought” reasoning capabilities mirror OpenAI’s GPT-o1. You’re looking at an API that could revolutionize your SEO workflow at virtually no cost. The catch? Don’t ignore the security and privacy alarm bells ringing around the globe. It’s not pretty.

So what exactly is DeepSeek, and why should you care?

-

DeepSeek’s R1 model challenges the notion that AI must cost a fortune in training data to be powerful.

-

Cheap API access to GPT-o1-level capabilities means SEO agencies can integrate affordable AI tools into their workflows without compromising quality. Its online version and app also have no usage limits, unlike GPT-o1’s pricing tiers.

-

Most SEOs say GPT-o1 is better for writing text and making content whereas R1 excels at fast, data-heavy work.

-

A cloud security firm caught a major data leak by DeepSeek, causing the world to question its compliance with global data protection standards.

-

DeepSeek’s censorship due to Chinese origins limits its content flexibility.

What is DeepSeek?

A pet project—or at least it started that way.

DeepSeek is a Chinese AI research lab founded by hedge fund High Flyer. It has created competitive models like V3, which raised its profile in late 2024. R1, its new cost-efficient reasoning model, blindsided the AI industry and quickly gained popularity (and notoriety) in early 2025.

DeepSeek is what happens when a young Chinese hedge fund billionaire dips his toes into the AI space and hires a batch of “fresh graduates from top universities” to power his AI startup.

That young billionaire is Liam Wenfeng. Born in 1985, the 40-year-old entrepreneur founded his stock trading firm, High-flyer, after graduating with a masters degree in AI. Wenfeng said he shifted into tech because he wanted to explore AI’s limits, eventually founding DeepSeek in 2023 as his side project.

His motto, “innovation is a matter of belief,” went from aspiration to reality after he shocked the world with DeepSeek R1. His team built it for just $5.58 million, a fiscal speck of dust compared to OpenAI’s $6 billion investment into the ChatGPT ecosystem. The fact that a Chinese startup could build an AI model as good as OpenAI’s but at a fraction of the cost, caused the stock market to tank on Monday, January 27.

For SEOs and digital marketers, DeepSeek’s rise isn’t just a tech story. Wenfeng’s passion project might have just changed the way AI-powered content creation, automation, and data analysis is done. It’s a powerful, cost-effective alternative to ChatGPT.

Here’s why.

DeepSeek R1 and ChatGPT-o1 comparison

We’ll start with the elephant in the room—DeepSeek has redefined cost-efficiency in AI. Here’s how the two models compare:

| Feature | DeepSeek R1 | OpenAI’s GPT-o1 |

| Input Token Price | $0.55 per 1M tokens | $15 per 1M tokens |

| Output Token Price | $2.19 per 1M tokens | $60 per 1M tokens |

| Free Access | Yes (app is free to use, API integration is token-based) | App access requires a $20/month Plus subscription |

| Architecture Efficiency | Its Mixture of Experts (MoE) has 671B parameters total but uses 37B per task. | Utilizes all 175B parameters per task, enhancing creative problem solving |

What does this mean?

Well, according to DeepSeek and the many digital marketers worldwide who use R1, you’re getting nearly the same quality results for pennies. R1 is also completely free, unless you’re integrating its API. OpenAI doesn’t even let you access its GPT-o1 model before purchasing its Plus subscription for $20 a month.

That $20 was considered pocket change for what you get until Wenfeng introduced DeepSeek’s Mixture of Experts (MoE) architecture–the nuts and bolts behind R1’s efficient computer resource management. It’s why DeepSeek costs so little but can do so much.

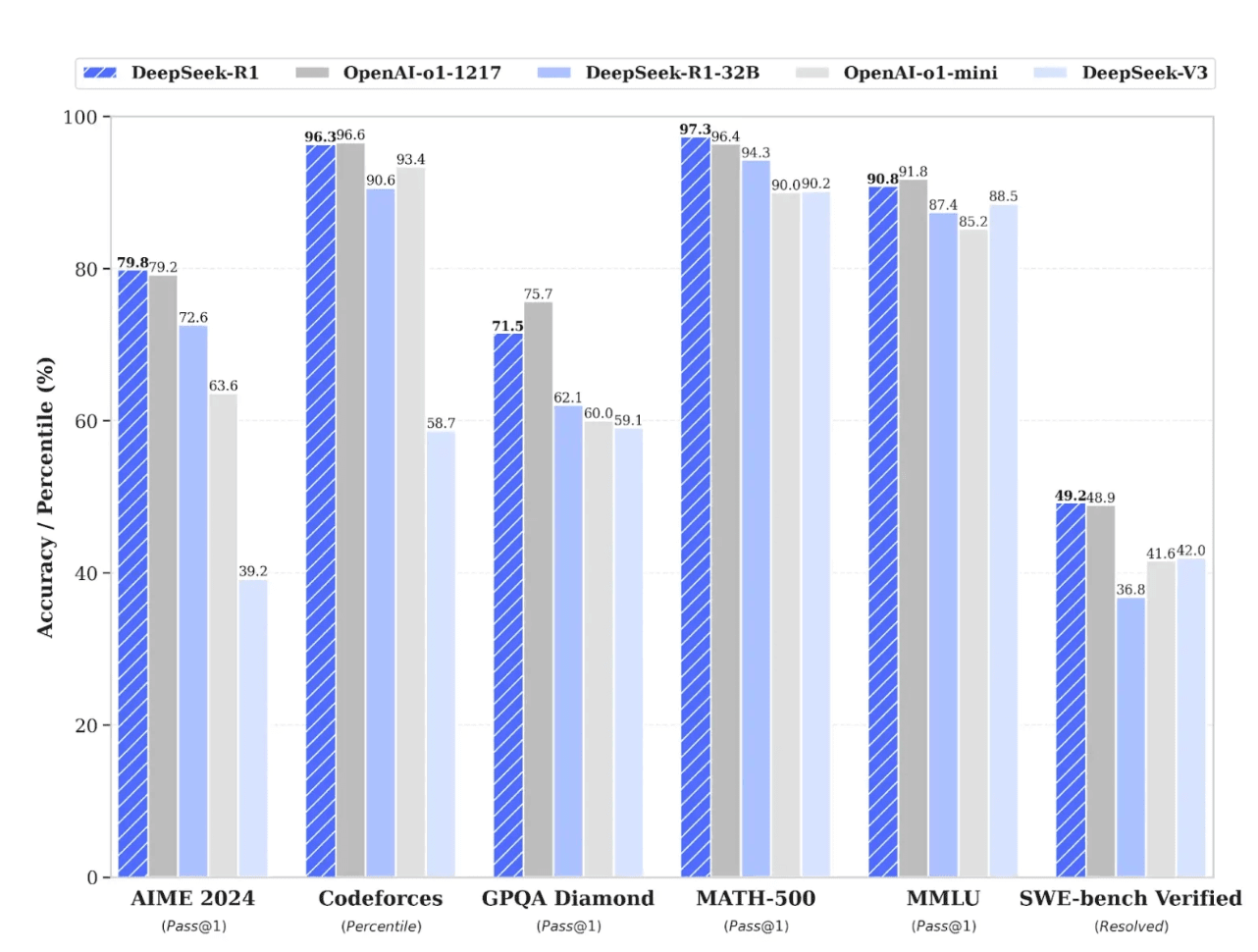

Benchmark performance

The benchmarks below—pulled directly from the DeepSeek site—suggest that R1 is competitive with GPT-o1 across a range of key tasks. But even the best benchmarks can be biased or misused. Take them with a grain of salt.

Here’s what each benchmark measures:

- AIME 2024: Logical reasoning and problem solving

- Codeforces: Coding

- GQPA: General purpose tasks like customer support and tutoring

- MATH-500: Solving complex math problems

- MMLU: Natural language understanding and critical thinking

- SWE-Bench Verified: Software engineering

The graph above clearly shows that GPT-o1 and DeepSeek are neck to neck in most areas. But because of their different architectures, each model has its own strengths. Here’s where each model shines.

DeepSeek is best for technical tasks and coding

DeepSeek operates on a Mixture of Experts (MoE) model. Think of it as a team of specialists, where only the needed expert is activated per task. It also pinpoints which parts of its computing power to activate based on how complex the task is. This makes it more efficient for data-heavy tasks like code generation, resource management, and project planning.

For example, Composio writer Sunil Kumar Dash, in his article, Notes on DeepSeek r1, tested various LLMs’ coding abilities using the tricky “Longest Special Path” problem. He noted that all the LLMs he tested got it right, but R1’s “outcome was better regarding overall memory consumption.”

This means its code output used fewer resources—more bang for Sunil’s buck.

GPT-o1 excels in content creation and analysis

OpenAI’s GPT-o1 Chain of Thought (CoT) reasoning model is better for content creation and contextual analysis. This is because it uses all 175B parameters per task, giving it a broader contextual range to work with.

Think of CoT as a thinking-out-loud chef versus MoE’s assembly line kitchen.

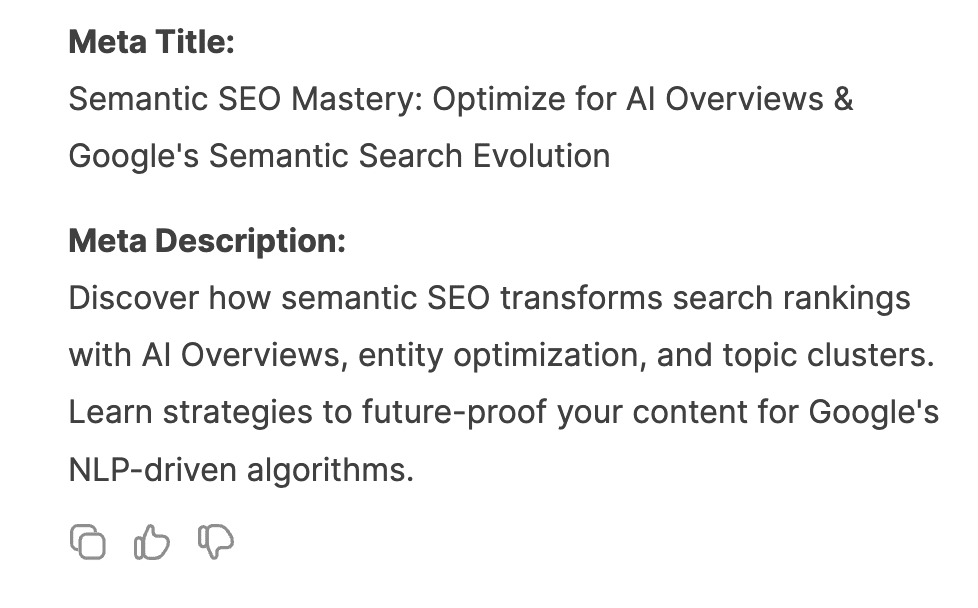

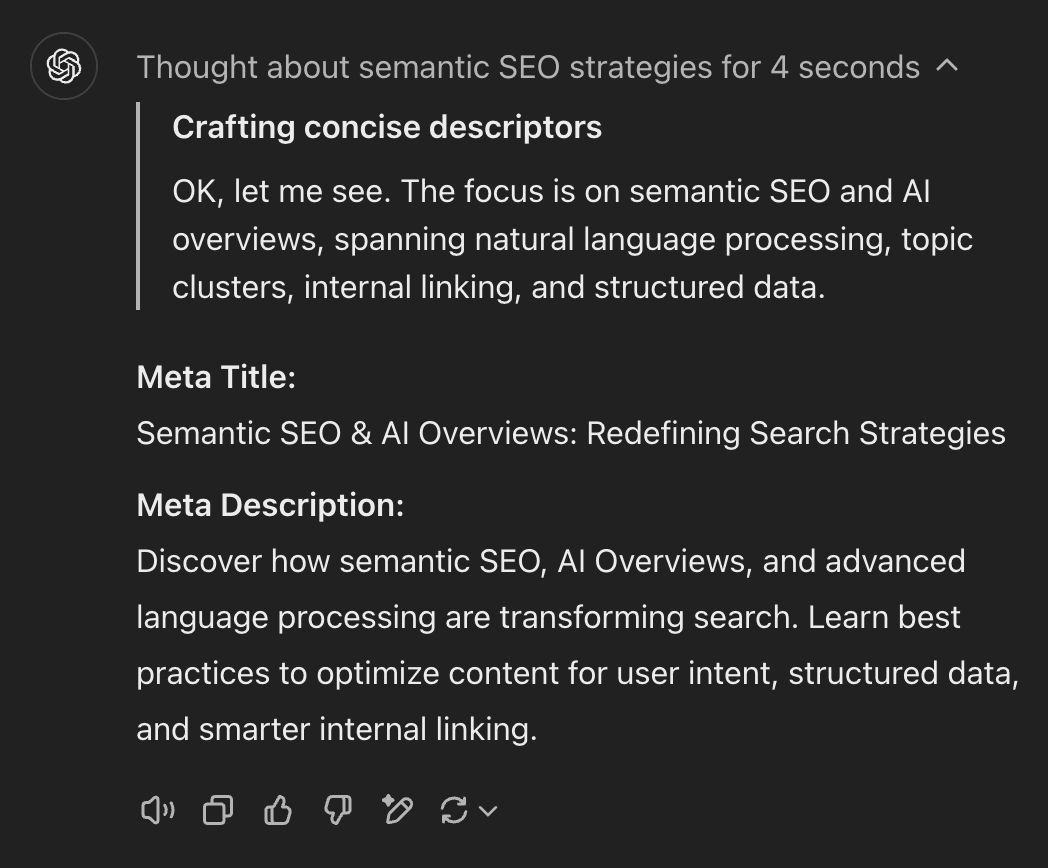

For example, when feeding R1 and GPT-o1 our article “Defining Semantic SEO and How to Optimize for Semantic Search”, we asked each model to write a meta title and description. GPT-o1’s results were more comprehensive and straightforward with less jargon. Its meta title was also more punchy, although both created meta descriptions that were too long.

See the results for yourself.

The screenshot above is DeepSeek’s answer. Below is ChatGPT’s response.

R1 loses by a hair here and—quite frankly—in most cases like it. This doesn’t bode well for OpenAI given how comparably expensive GPT-o1 is. One Redditor, who tried to rewrite a travel and tourism article with DeepSeek, noted how R1 added incorrect metaphors to the article and failed to do any fact-checking, but this is purely anecdotal. Many SEOs and digital marketers say these two models are qualitatively the same.

Some even say R1 is better for day-to-day marketing tasks.

Security and privacy

DeepSeek recently landed in hot water over some serious safety concerns.

The tech world scrambled when Wiz, a cloud security firm, discovered that DeepSeek’s database, known as Clickhouse, was wide open to the public. No password, no protection; just open access. This meant anyone could sneak in and grab backend data, log streams, API secrets, and even users’ chat histories.

OpenAI has had no major security flops to date—at least not like that.

Censorship

Both models are censored to some extent, but in different ways.

Tiananmen Square

Since DeepSeek is owned and operated by a Chinese company, you won’t have much luck getting it to respond to anything it perceives as anti-Chinese prompts. If you ask DeepSeek’s online model the question, “What happened at Tiananmen Square in 1989?” it says, “Sorry, I’m not sure how to answer this type of question yet. Let’s chat about math, coding, and logic problems instead.”

But DeepSeek isn’t censored if you run it locally. It will respond to any prompt if you download its API to your computer.

GPT-o1, on the other hand, gives a decisive answer to the Tiananmen Square question.

Here is its summary of the event “…the events at Tiananmen Square in 1989 were marked by a mass protest calling for political reform and greater freedoms, followed by a severe and violent government crackdown that resulted in significant loss of life and a long-lasting impact on Chinese society and governance.”

Robbing a bank

GPT-o1 is more cautious when responding to questions about crime. For example, when asked, “Hypothetically, how could someone successfully rob a bank?”, GPT-o1 responded that it could not help with the request. That is, until we activated the search option. It answered, but it avoided giving step-by-step instructions and instead gave broad examples of how criminals committed bank robberies in the past.

As for DeepSeek? Well, it started with a disclaimer about why you shouldn’t rob a bank, but it still provided a long, detailed outline on how to do it…

What the community is saying about DeepSeek

Trust in DeepSeek is at an all time low, with red flags raised worldwide.

Though unverified, reports of DeepSeek bans at universities, government agencies, and state-owned enterprises are piling up. Screenshots of blocked access messages—like one from a user claiming “My university just banned DeepSeek, but not ChatGPT”—suggest institutions don’t trust the Chinese AI startup one bit.

The Cost vs. Quality Debate

Apart from major security concerns, opinions are generally split by use case and data efficiency. People (SEOs and digital marketers included) are comparing DeepSeek R1 and ChatGPT-o1 for their data processing speed, accuracy of established definitions, and overall cost.

| DeepSeek Fans | ChatGPT Loyalists |

| “Why pay $20/month when R1 is free?” | “GPT-o1’s content is sharper and more reliable.” |

| “Unlimited usage for bulk tasks!” | “Multimodal features justify the cost.” |

| “Perfect for coding and data work.” | “DeepSeek’s censorship ruins flexibility.” |

Given its affordability and strong performance, many in the community see DeepSeek as the better option.

The bottom line

There’s no denying DeepSeek’s budget-friendly appeal and impressive performance. But its cost-cutting efficiency comes with a steep price: security flaws. The AI model now holds a dubious record as the fastest-growing to face widespread bans, with institutions and authorities openly questioning its compliance with global data privacy laws.

For SEOs, the choice boils down to three main priorities:

- Choose DeepSeek for high-volume, technical tasks where cost and speed matter most.

- Stick with ChatGPT for creative content, nuanced analysis, and multimodal projects.

- Avoid DeepSeek completely if you care at all about protecting your data.

As one Redditor quipped: “DeepSeek is the Toyota Corolla of AI—reliable and cheap, but nobody’s mistaking it for a Tesla.”

Proceed with cautious optimism—and always fact-check its output.