ChatGPT is twice as likely to lead users to broken links as Google’s AI Overviews

We’ve all been there: clicking a ChatGPT link only to find a 404 page staring back at you.

Since ChatGPT’s public release, the SEO and developer communities have been openly skeptical about its ability to cite real, working URLs. Early feedback was brutal. Users didn’t just report some broken links. They reported all of them.

- One Reddit user summed it up perfectly back in 2023:

- “Why is it that when I ask ChatGPT to refer me to articles… they always come back with a 404 error? Every single one. It has never worked once.”

Similar complaints appeared on OpenAI’s own developer forums. One user tested URLs to major publishers (BBC, CNN, Reuters, The Guardian) in one of the ChatGPT answers, only to find that they led to non-existent pages.

At the time, the conclusion seemed obvious: ChatGPT was hallucinating URLs at scale.

Fast-forward to 2025, and the conversation has shifted. Instead of asking “Is this link broken?”, the more interesting question is:

Has this problem actually improved, or did it just get quieter?

We decided to answer that question with data.

-

404 errors are the primary broken-link problem in ChatGPT citations.

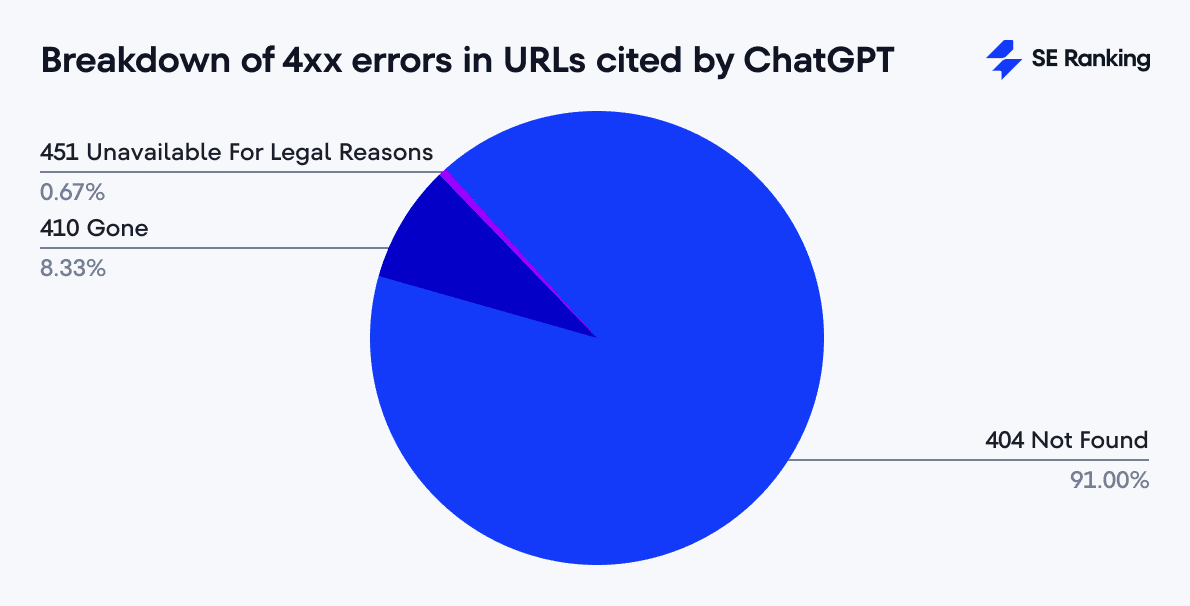

1.34% of URLs cited by ChatGPT have client-side errors (4xx). Of those errors, most (91%) are 404 Not Found.

-

ChatGPT sends users to broken pages more often than any other Google system.

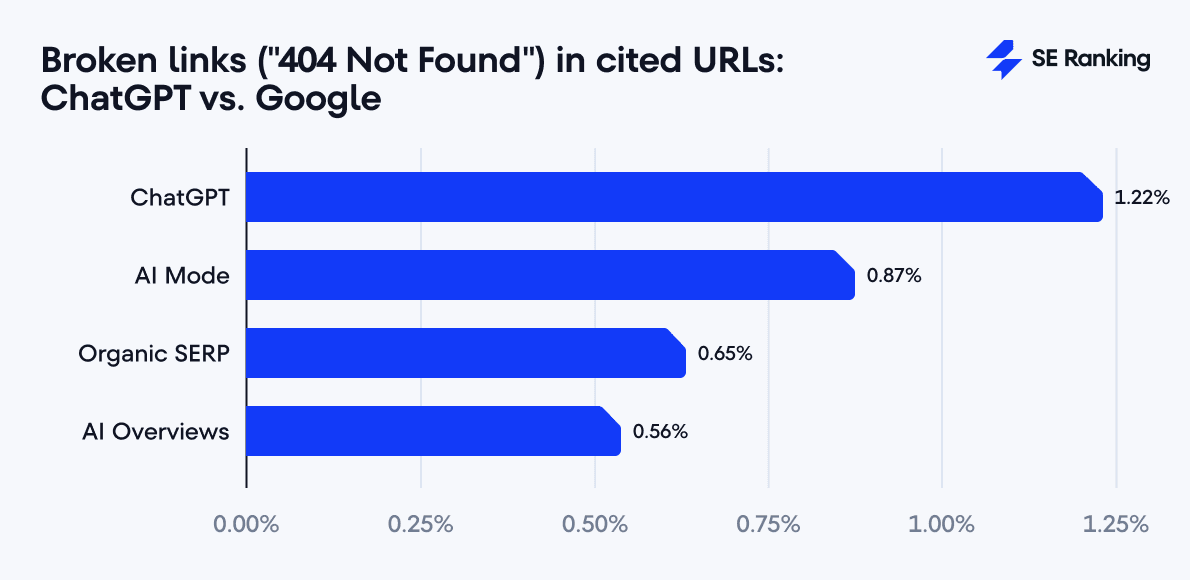

1.22% of ChatGPT citations return 404 errors. Among Google systems, AI Mode has the highest share of broken pages at 0.87%, followed by regular organic SERP at 0.65%, and AI Overviews at 0.56%.

-

Other 4xx errors exist, but they are not what users typically experience as “broken links.”

Codes like 410 Gone (8.33% of client errors) and 451 Unavailable for Legal Reasons (0.67%) are rare and usually reflect intentional content removal or legal restrictions (not hallucinated or incorrect URLs).

-

Google is 3-7 times more likely to send users to pages via redirects than ChatGPT.

Only 0.79% of URLs cited by ChatGPT are redirects, compared with 5.75% in Google’s organic search, 5.45% in AI Mode, and 2.85% in AI Overviews. Notably, ChatGPT typically reflects permanent redirects (301/308), while Google’s organic results often also include temporary ones (302/307).

Why hallucinated URLs are more than just annoying

Hallucinated (or simply broken) links aren’t just a minor inconvenience. In the context of AI search, they create very real downstream problems:

- Users lose trust in the answer itself

- Research workflows break

- Commercial intent gets interrupted

- Phantom URLs begin accumulating backlinks and traffic

In March 2025, our Head of SEO, Anastasia Kotsiubynska, noticed something unusual in Google Analytics: ChatGPT was sending traffic to URLs that didn’t exist on our site.

Not outdated pages. Not misconfigured redirects. Completely fabricated URLs.

Over three months, ChatGPT sent traffic to around 70 URLs that returned 404 errors.

- Most of these URLs had 1–3 visits, but some received 20+ sessions.

- Around 20 of those non-existent URLs even had backlinks, meaning other sites were linking to pages that don’t exist.

Shortly after, SEO specialist Dan Hinckley expanded this analysis across a much larger dataset (over 18,000 landing pages receiving ChatGPT traffic). And his findings highlighted a persistent problem:

- 3.35% of visits from ChatGPT landed on 404 pages.

At a small scale, that sounds manageable. On a global scale, it isn’t.

In July 2025, OpenAI announced to Axios that there are now 2.5 billion ChatGPT requests per day.

Even if only a tiny fraction of those interactions include cited links (and only a small percentage of those links break), that still adds up to millions of broken link experiences every day. At that scale, “edge cases” stop being edge cases and turn into a measurable source of friction, lost trust, and missed opportunities for both users and businesses.

Google’s John Mueller also publicly weighed in on the issue. He predicted that hallucinated links might increase in the short term as AI adoption grows, before gradually declining as systems become better grounded in real URLs.

Which brings us back to the core question:

Did that improvement in the number of broken links appearing in AI search actually happen?

Broken links (4xx) in ChatGPT responses

In practice, when ChatGPT sends users to a broken page, it’s almost always the same: 404 Not Found.

But let’s first break down how many working URLs ChatGPT actually delivers, before focusing on where and how things go wrong.

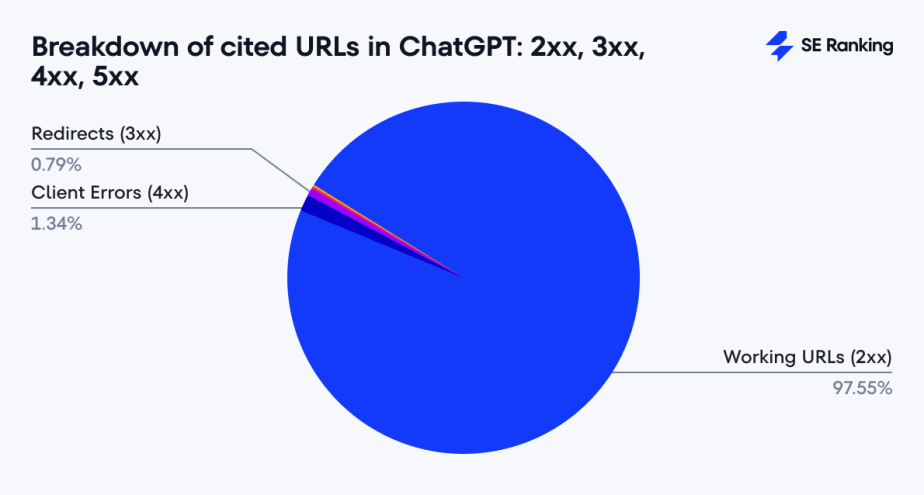

We analyzed 145,463 URLs cited by ChatGPT and found that the vast majority (97.55%) returned a 200 status code, meaning the pages loaded successfully.

- The next most common category was client-side errors (4xx codes), which accounted for 1.34% of URLs. These errors indicate that the page can’t be accessed by the user, usually because it doesn’t exist or access is blocked.

- Redirects (3xx codes) made up 0.79%, meaning a small portion of links required an extra step to reach their final destination.

- Server-side errors (5xx codes) were rare, accounting for 0.16% of cases.

Of the URLs that returned client errors (4xx), 91% were “404 Not Found”, accounting for 1.22% of all URLs.

In other words, when ChatGPT gets a link wrong, it’s usually not because the page is blocked, restricted, or temporarily unavailable. It’s because the page simply doesn’t exist.

Digging deeper into all client errors (the 4xx codes), we saw a few interesting patterns:

- 410 “Gone”: 162 cases (8.33% of client errors). Unlike 404, 410 is intentional. Webmasters explicitly mark this code when content is permanently removed.

- 451 “Unavailable for Legal Reasons”: 13 cases (0.67% of client errors). This code appears when content is blocked due to legal restrictions or court orders. Rare, but it shows that ChatGPT sometimes cites content that was deliberately restricted.

Together, these codes account for less than 9% of client-side errors among URLs cited by ChatGPT. They provide context, but they’re not what users mean when they say “ChatGPT sent me to a broken link.”

That experience is almost always a 404 page.

How Google’s AI and organic search compare

We ran the same analysis across Google’s AI Overviews (AIO), AI Mode (AIM), and traditional organic search (SERP). Here’s how all analyzed systems compare in terms of 404 rate within cited URLs:

- ChatGPT: 1.22%

- AI Mode: 0.87%

- Organic SERP: 0.65%

- AI Overviews: 0.56%

Based on our findings, URLs cited by ChatGPT show the highest 404 rate (1.22%) among the analyzed systems. At the same time, over 97% of ChatGPT’s cited URLs load successfully.

That doesn’t mean the problem is solved. But it does mean it has fundamentally changed in scale and severity.

In contrast, 404 errors are observed less often among URLs cited by Google’s systems overall. AI Overviews have the lowest 404 rate (0.56%), while AI Mode shows the highest rate among Google’s systems (0.87%). Even so, both remain below ChatGPT’s 1.22%.

Here’s a more complete breakdown of client-side errors in ChatGPT compared with Google’s organic search results and AI answers:

Total Client Errors

1,945 (1.34%)

404 — Not Found

1,770 (91%)

410 — Gone

162 (8.33%)

451 — Unavailable For Legal Reasons

13 (0.67%)

418 — I’m a Teapot

–

Total Client Errors

2,787 (0.63%)

404 — Not Found

2,447 (87.8%)

410 — Gone

72 (2.58%)

451 — Unavailable For Legal Reasons

186 (6.67%)

418 — I’m a Teapot

82 (2.94%)

Total Client Errors

4,876 (1%)

404 — Not Found

4,199 (86.12%)

410 — Gone

269 (5.52%)

451 — Unavailable For Legal Reasons

243 (4.98%)

418 — I’m a Teapot

–

Total Client Errors

3,204 (0.76%)

404 — Not Found

2,729 (85.17%)

410 — Gone

–

451 — Unavailable For Legal Reasons

305 (9.52%)

418 — I’m a Teapot

170 (5.31%)

1,945 (1.34%)

1,770 (91%)

162 (8.33%)

13 (0.67%)

–

2,787 (0.63%)

2,447 (87.8%)

72 (2.58%)

186 (6.67%)

82 (2.94%)

4,876 (1%)

4,199 (86.12%)

269 (5.52%)

243 (4.98%)

–

3,204 (0.76%)

2,729 (85.17%)

–

305 (9.52%)

170 (5.31%)

Some interesting observations:

- 404 remains the most common client error among cited URLs. Across URLs cited by both ChatGPT and Google, the majority of observed 4xx responses correspond to missing pages.

- Other 4xx codes give extra context. For example, 410 and 451 usually mean a page was intentionally removed or restricted for legal reasons. These codes show up a bit more often in URLs cited by Google, likely due to differences in the underlying URL sets rather than explicit handling of such statuses.

- ChatGPT has slightly fewer types of rare codes. For example, 418 (“I’m a teapot”) responses appeared in URLs cited by Google’s systems but not in those cited by ChatGPT. However, these responses are likely artifacts of our verification process. Some servers returned anti-bot status codes to our requests while still giving normal 200 responses to Googlebot.

Other errors in ChatGPT responses: Redirects (3xx) and server errors (5xx)

While most discussions around link quality focus on 404s and other client-side errors, there’s another category of issues that can affect user experience: redirects (3xx codes) and server errors (5xx codes). These errors might not be as obvious as broken links, but they still create friction for users.

Redirects (3xx)

Redirects are often overlooked because the page eventually loads, but that “eventually” comes at a cost. Each redirect adds:

- An additional server request

- Extra latency

- More points of potential failure

On mobile connections or slower networks, these small delays can become especially noticeable, frustrating users and potentially hurting engagement.

Here’s how redirect rates compare between URLs cited by ChatGPT and those cited by Google:

As you can see, when ChatGPT cites a URL, users are 3–7 times more likely to land directly on the final page.

Even more interesting is the type of redirects:

- Among cited sources, ChatGPT often includes URLs with permanent redirects (301/308), which indicate the page has moved permanently and is structurally stable.

- By contrast, when it comes to redirects in Google’s organic results, these are dominated by temporary ones (302/307).

So, it’s safe to say that ChatGPT is more selective about citing URLs that appear structurally “final” (even if it occasionally points users to the wrong path). From a practical standpoint, fewer redirects mean faster load times, lower friction, and a smoother user journey.

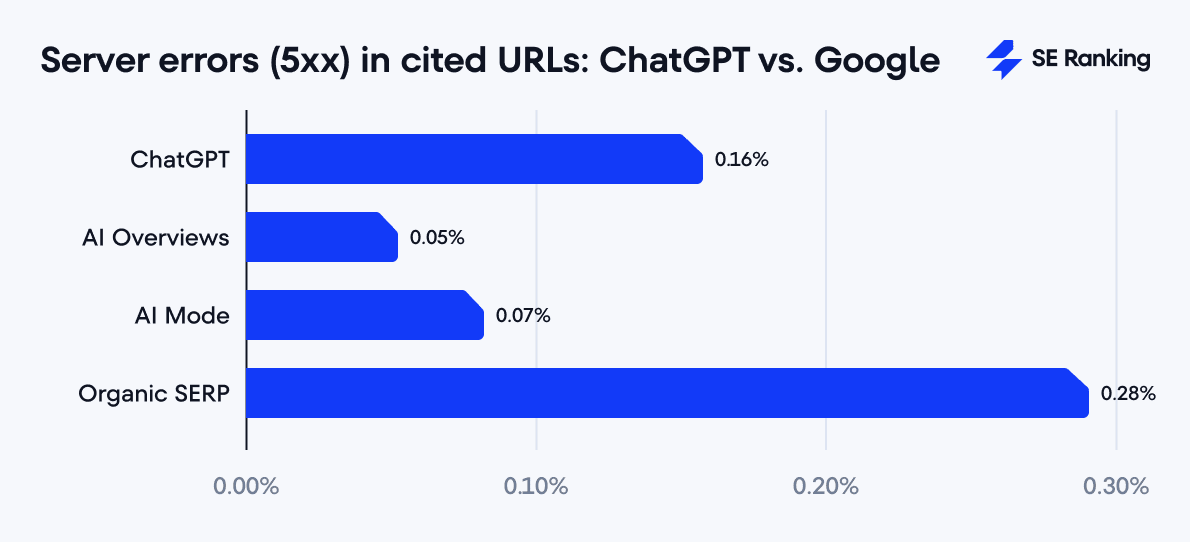

Server errors (5xx)

Server errors (5xx codes) represent the most serious failures, where the website itself cannot process the request. Here’s the breakdown of URLs cited across our analyzed systems:

Most of these errors were Cloudflare-related, including timeouts, SSL handshake failures, and gateway issues. Interestingly, organic search showed the highest server error rate, possibly because of a lag between indexing and validation (e.g., some sites may have been accessible at crawl time but became unavailable by the time we conducted our checks).

Even though server errors are rare across all systems, they highlight the importance of web stability and reliability. A broken server or timeout is far worse than a redirect. It’s a complete dead end.

Research methodology

To understand how AI systems cite web content and the prevalence of broken or inaccessible URLs, we conducted a comparative analysis of ChatGPT and Google’s search and AI systems.

For ChatGPT, we analyzed 100,000 prompts, focusing on long-tail keyword questions. From the responses, we extracted all URLs cited by the model. This produced a dataset of 159,949 unique URLs.

To provide a comparative benchmark, we also collected data from 100,000 keywords in the same categories across three Google systems:

- Organic Search Results (SERP)

- AI Overviews (AIO)

- AI Mode (AIM)

Then, we checked each unique URL across all four systems for its HTTP status code:

- 2xx: Page loads successfully

- 3xx: Redirects

- 4xx: Client-side errors (e.g., 404 Not Found, 410 Gone)

- 5xx: Server-side errors

Some URLs returned a 403 (“access forbidden”) status, preventing us from verifying their actual content. This affected:

- ChatGPT: 14,486 URLs (9.06%)

- AIO: 64,099 URLs (12.74%)

- AIM: 66,752 URLs (12.09%)

- SERP: 81,359 URLs (16.14%)

As a result, we excluded all 403 responses from further analysis, leaving a final dataset of:

- ChatGPT: 145,463 URLs

- AIO: 438,903 URLs

- AIM: 485,400 URLs

- SERP: 422,814 URLs

Disclaimer: This analysis is based on the latest version of ChatGPT available at the time of data collection and provides a contemporary snapshot of AI citation behavior. The reported HTTP status codes (2xx, 3xx) reflect the state of URLs at that time; because web content is dynamic, these codes may change over time. Results may differ in prior or future versions of ChatGPT.

Conclusion

So, has ChatGPT fixed its hallucinated links problem?

Not entirely. But it’s no longer the crisis it once was.

Even though ChatGPT now gets most links right, 404s are still its biggest problem. And that small 1–2% can still add up to a lot of frustration, lost traffic, and broken workflows (especially at scale).

The takeaway is simple: basic tech SEO still matters. Keep your site free of broken pages, manage redirects properly, and make sure your URLs are consistent. On top of that, start keeping an eye on how your pages are cited in ChatGPT and other AI tools.

Strong SEO plus proactive monitoring keeps your content working and your audience happy.