AI Search vs Traditional Search: The things you thought were gone? AI can find them.

But traditional search can’t. At least not painlessly.

Because it was never built to investigate, only to index. Type a long-tail query and you’ll get a static list of blue links. AI interprets your question, connects the fragments, and returns in seconds what was lost for decades.

Take Melissa Popp’s story. She wrote about using AI to find a piece of her childhood, a grief workbook for children given to her by her kindergarten teacher. She searched every few years on platforms like Amazon and Google. Nothing.

But after opening a conversation with ChatGPT, it appeared in an instant.

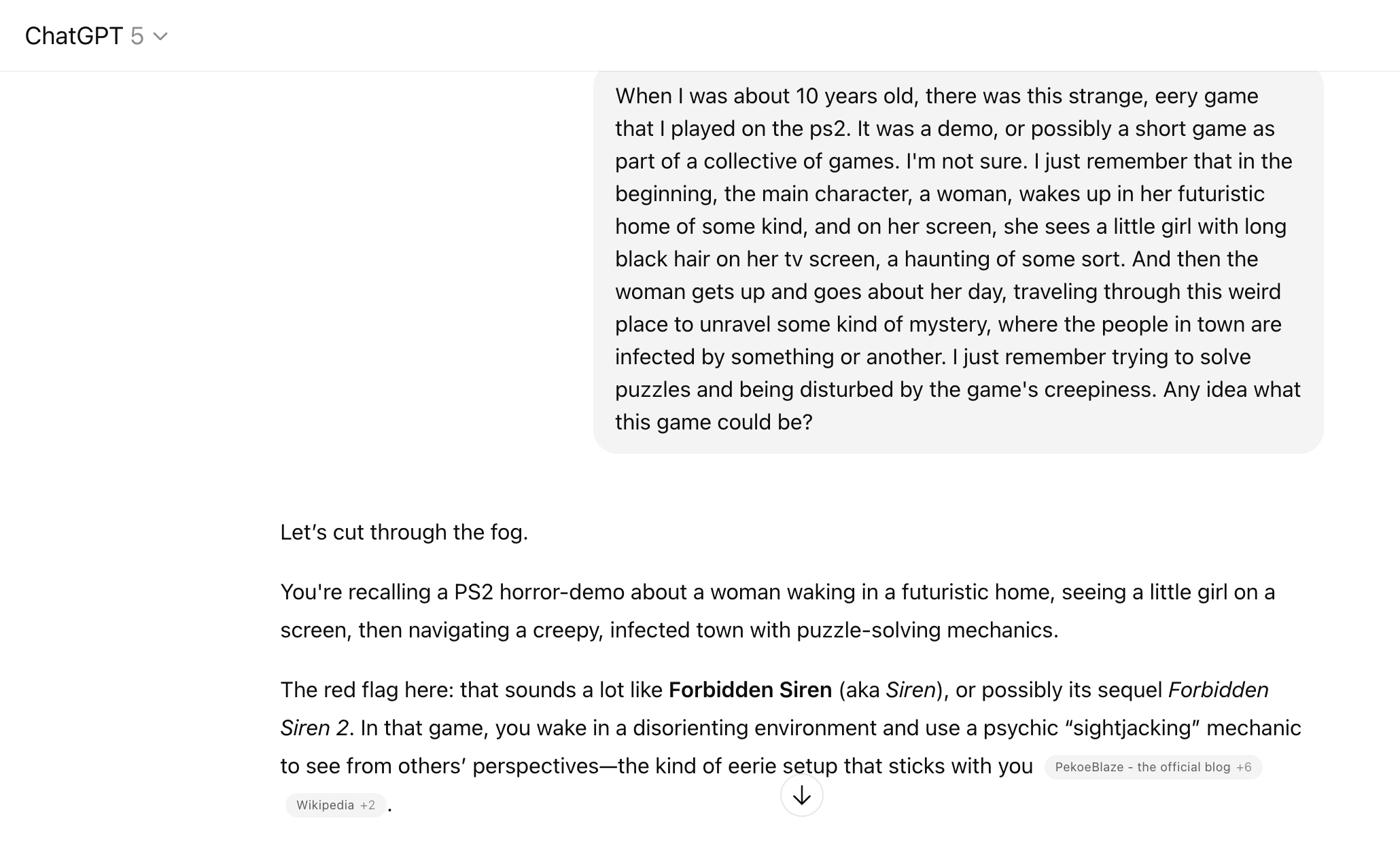

This isn’t an isolated case. Business Insider’s Andrew Lloyd wrote about how he used ChatGPT to find an obscure ‘90s movie from his youth. One Redditor even found an old PS2 game by feeding the model a hazy description.

I had my own version of this. For years, I carried the memory of a strange Xbox game I played when I was 10. It stuck with me the way dreams do. Vivid in feeling, but blurry in detail. I tried everything from Google to forums to late-night searches on the Internet Archive. No luck at all.

Until I asked ChatGPT.

Digging up my own past with AI

I was sure Chat wouldn’t find it. My memory was so vague it felt more like a dream than a game. How could an AI uncover something I couldn’t even prove to myself was real?

But there was hope. I could tie the memory back to one piece of the past I could remember clearly. I was in my friend James’s living room when his dad walked in with a binder of Xbox game discs. “Many of these aren’t even released yet,” he told us.

We tried a few random titles before stumbling onto it. Hours slipped away as we played, drawn in and unsettled by this strange, slow-paced, dreamlike game.

Not surprisingly, ChatGPT struggled to put a name to my vague reflections—at first.

Bits and pieces were the best I could do. I almost gave up. ChatGPT even warned me it might be too obscure, a ghost demo with no release.

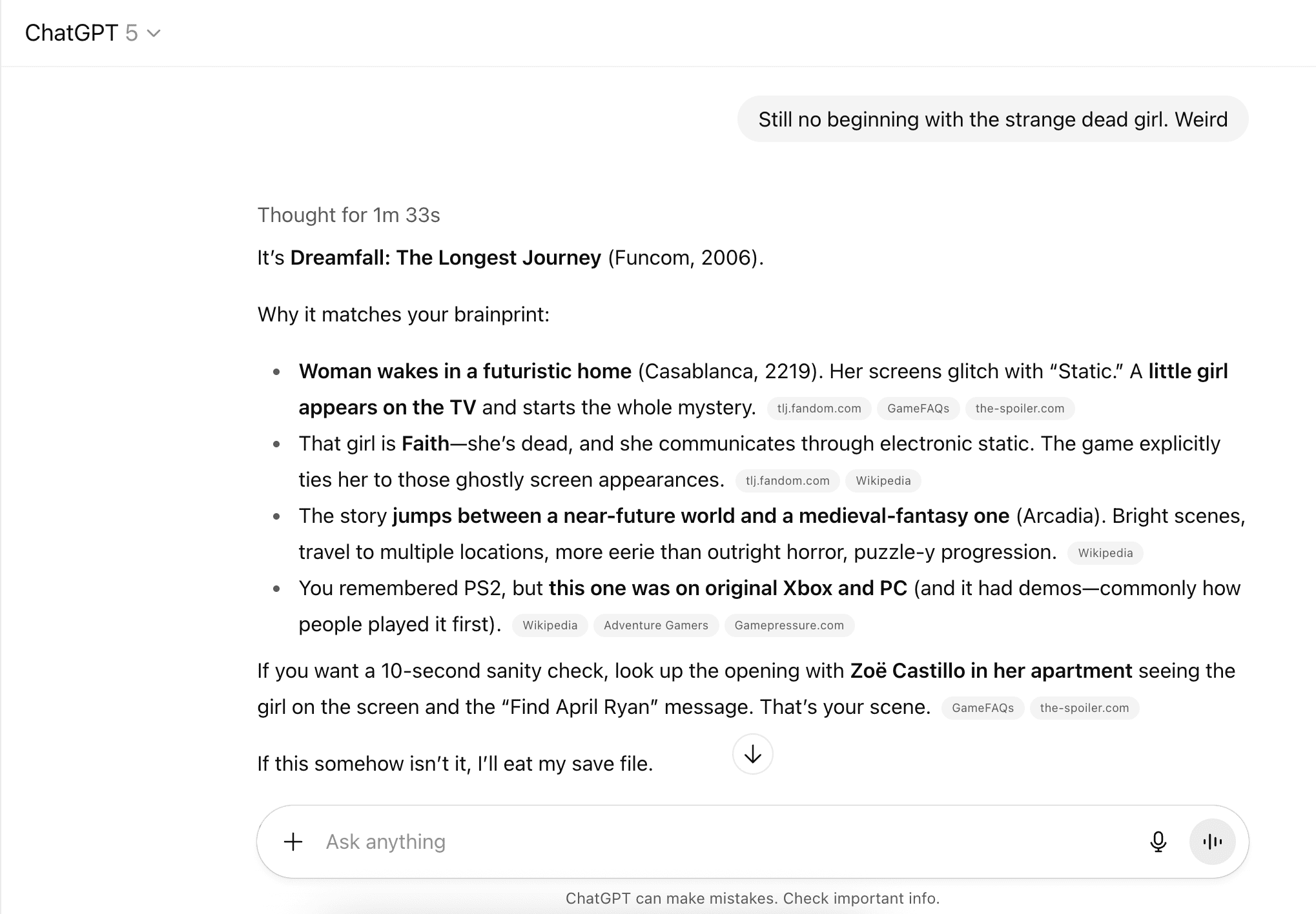

Then, after a few more attempts, Chat gave me one last answer. Not tentative this time, but certain. Almost too certain:

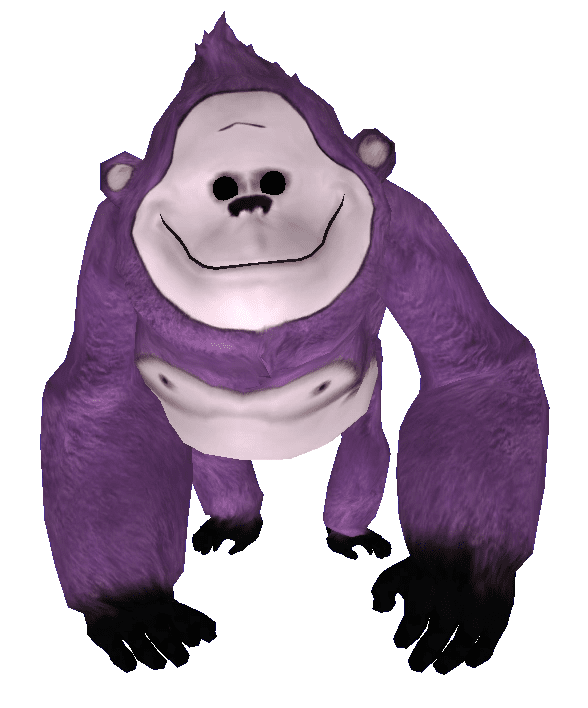

The name Dreamfall resonated. I searched on YouTube for game footage and clicked on a playthrough. 5 minutes in, this creepy thing meandered on-screen.

It was Wonkers the Watila, voiced by Jack Angel, the same actor who voiced Teddy from AI: Artificial Intelligence (2001).

My moment of recognition began with Wonkers

The memories came flooding in. The futuristic setting. The dream-like atmosphere. A dead girl’s ghost flickering through Zoe’s television, whispering from the static. And Wonkers—knuckle-walking to her bedside, lifting his plush head, and staring with glassy, unblinking eyes. A children’s toy with a grown man’s voice.

There was no mistaking it. Dreamfall: The Longest Journey was my ghost. It was the memory that haunted me for 20 years, and ChatGPT exorcized it in minutes.

The absurdity stung. Back then, nobody would have dreamed of something like AI search, so it was common knowledge that poor results on traditional search were a user defect, not a platform weakness.

But Google always promised everything was just a search away. So why was I spending countless hours scouring the internet and coming up empty every time?

I had to retrace my steps to find the truth.

Dreamfall was finally right in front of me. Now it was time to figure out what took me so long to find it.

I started by typing the following queries to see what came up:

- 2000s game with strange dead girl on tv

- Creepy futuristic mystery game with infected people

- Old Xbox horror mystery games

- Game where woman wakes up in futuristic setting and dead girl shows up on TV

- And so many more.

Google pulled the exact same games as ChatGPT’s first few picks:

- Fatal Frame

- Silent Hill 3

- Forbidden Siren

- Michigan: Report from Hell

This left me with the same feeling I’d felt year after year. Utterly hopeless. If I didn’t know the name of the game, or if I didn’t pin down the perfect keyword, Google would still send me spiraling down the same digital rabbit hole for decades more.

Dreamfall only showed up for the query below, and it appeared in this Reddit post:

And without ChatGPT’s help, I never would have typed those words. I’d still be second-guessing my own memory. But now it’s clear. I even recall James and me ejecting the disk and putting it back in the binder after the first time-travel scene.

We thought it was a different game altogether.

Decades later, my memory of Dreamfall mutated into a disorienting blur. But it was right there all along. What I lacked wasn’t access to data, but a tool that could interpret what I meant instead of punishing me for not knowing the exact words.

Why traditional search couldn’t help me find it

Search engines weren’t designed to reconstruct memories. They were designed to crawl, index and rank web documents.

From the very beginning, their purpose was less about answering user questions and more about ranking pages in a competitive ecosystem. As Carl Hendy put it, Google’s rise was largely tied to SEO and advertising. It was meant to keep results clean enough for commerce to flourish. We’re talking car insurance, credit cards, and fashion. Not childhood memories.

And although Google declared itself a “machine learning first” company back in 2016, its foundation hasn’t changed.

It was, as he puts it, a “big move away from the traditional search results methods of matching keywords in results in accordance with queries to a model based on anticipation of what the user is searching for.”

But user-first search only mattered insofar as corporations could profit from user attention. There’s no financial upside for Google in helping you reconstruct a dimly recollected Xbox game or some obscure personal reference. Those searches don’t generate ad clicks, affiliate traffic, or monetizable data.

Google’s AI Overviews and AI Mode hint at change, blending AI Search and traditional search, but they remain experimental layers on top of an index. Under the hood, it’s the same system optimized for advertisers, not for memory.

Why chatbots are better investigators

Google forces us to speak its language. Chatbots are being built to learn ours.

With Google, you take what you’re given and do your own research. With chatbots, you can push back, clarify, add details, and change direction.

Think of AI as a cheap detective for hire. You give AI vague clues to work with, and it does the searching for you.

But not all AI detectives are created equal. I gave the same vague memories to other models, with no extra hints or shortcuts, and tracked how long it took them to find Dreamfall. I counted success only when the model outputted “Dreamfall: The Longest Journey” in its final answer.

Here’s what happened:

| Chatbot / Mode | Found? | Prompts |

| ChatGPT-o3 + Deep Research | Yes | 2 |

| ChatGPT-o3 | Yes | 3 |

| Grok 4 (Expert) | Yes | 4 |

| ChatGPT-5 | Yes | 5 |

| Perplexity + Research | Yes | 6 |

| DeepSeek (Deep Research) | Sort of (appeared in internal reasoning) | 7 |

| Claude Sonnet 4 | No | 8 |

| AI Mode | No | 8 |

What this shows:

- Hit rate: 5 of 8 named it in final output.

- Average effort: About 4 prompts to get the exact title when it worked.

- Misses: 2 never found it; 1 “knew” it internally but didn’t commit.

The winners succeeded because they speculated more intelligently. They treated my fuzzy memory as evidence, but noticed my memories contradicted each other. Some memories indicated a horror game. Others suggested a whimsical adventure game.

The cost of playing it safe

The best models were flexible enough to reframe it as an adventure game with horror elements instead of getting hung up on “creepy girl=survival horror”.

The ones that failed showed the opposite instinct. Claude and AI Mode hung onto those cliches and kept second-guessing themselves. DeepSeek had the answer in its internal reasoning, but wouldn’t include it in its final output because it assumed the only viable options were horror games from Japan.

So the primary distinction? The best chatbots are willing to take risks. They don’t just process the fragments, but gamble on patterns and think outside the box. They even name a suspect when the evidence isn’t perfect.

What this means for memory and meaning

Humility is the only sane response. I spent years searching for this game and came up empty. Five different AI models found Dreamfall in minutes, armed with nothing more than the bits and pieces I carried in my head.

AI isn’t just a search partner. It’s an investigator at scale.

Melissa Popp used it to find a grief workbook from kindergarten. I used it to bring a hazy xbox fever dream back to light. Granted, I would have been better off not seeing Wonkers the Watila again. That thing is the stuff of nightmares, but that’s beside the point.

Only a few years ago, this was all science fiction. Now it’s changing how we interact with information. AI is forcing us—through sheer competence—to think twice about what it means to recover what we’ve lost, and how we’ll define a “query” in the future.