I poked at AI Mode to test its limits. It found mine.

When Google gave me access to AI Mode back in April, I thought, Great, search is cosplaying as a chatbot again. It looked like Gemini in a new outfit. My first instinct? Gimmick.

But I was wrong. This isn’t just a UI experiment. Signs point to a turning point in interface design.

Here’s why.

By the way, SE Ranking is working on a new AI Mode Tracker, so make sure to check out the tool as it’ll allow you to check AI answers for your queries and track brand mentions and links.

What I thought AI Mode was (And what it really is)

Another toy for tech enthusiasts? That was my first take.

Search Labs was cranking out experiments left and right. AI Mode felt like another one. I was more interested in NotebookLM at the time. A tool that could narrate 30+ minute voice summaries like a podcast host? Now that felt futuristic.

Still, I decided to give AI Mode a shot, if only to confirm my suspicions. I started by throwing darts at it. Random queries like:

- monster hunter iPhone

- AI Mode

- best tailor in my area

- where is the best place in western new york to get cheesecake?”

And you know what? AI Mode didn’t impress. It was buggy—and limited.

It still makes mistakes and hallucinates constantly. You’ll see plenty later on in this article.

But that’s not the point.

What matters is what it’s becoming, and how quietly Google is preparing to replace traditional search with something more predictive, more persistent. And…

More agentic.

No, it’s not alive. Not autonomous. But agentic.

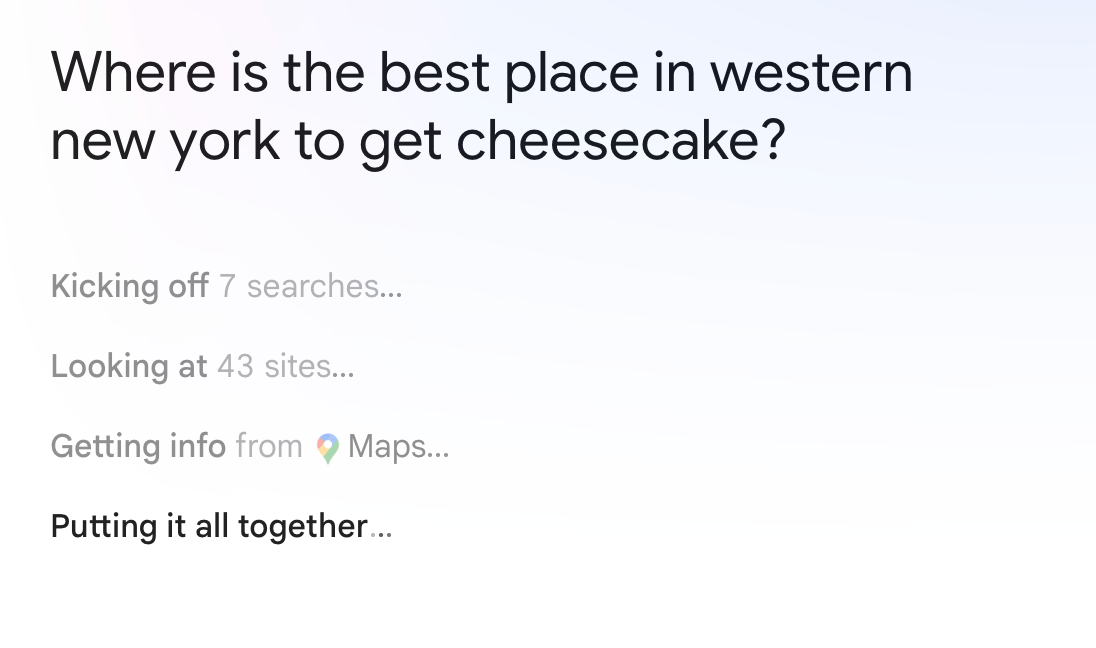

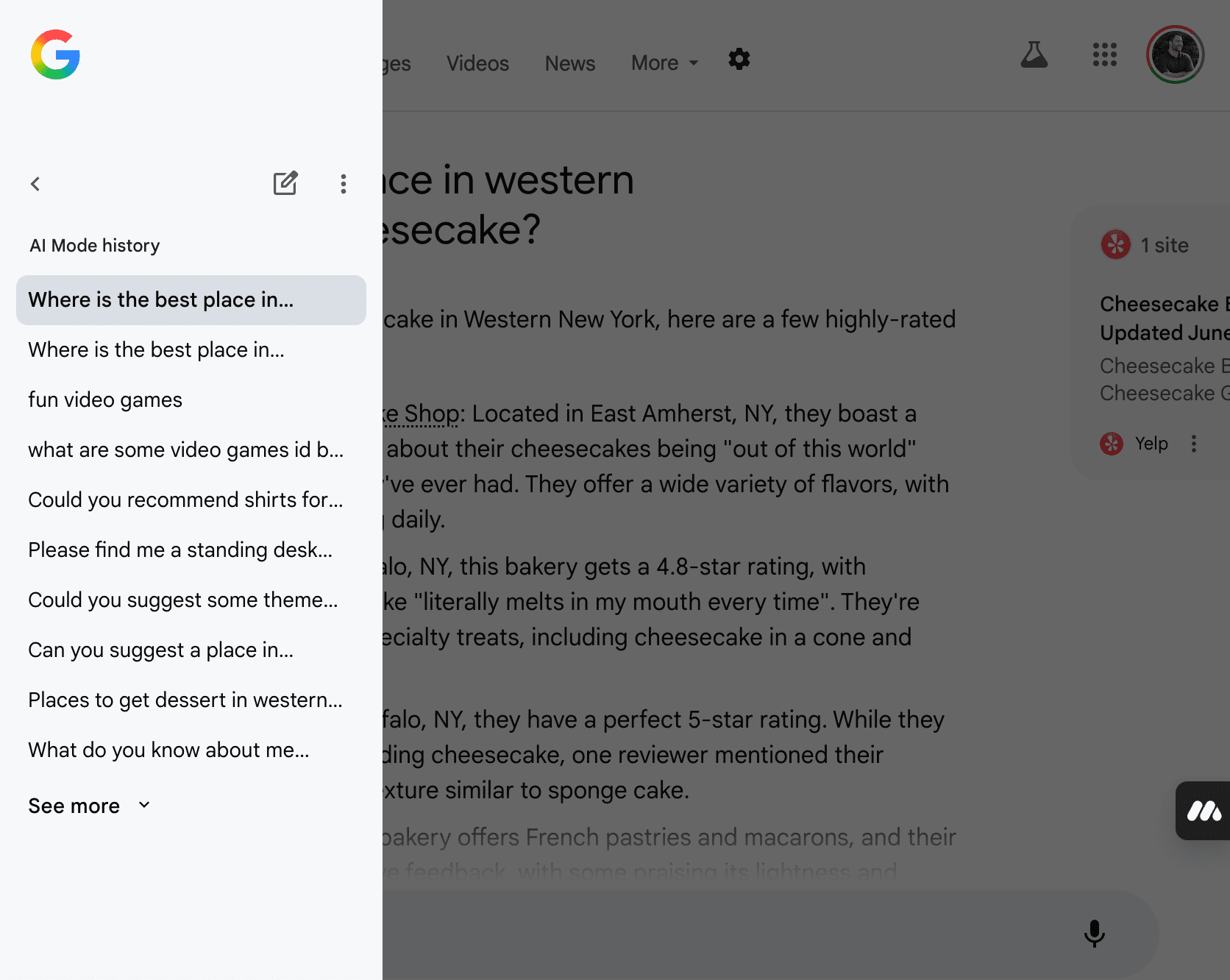

The first thing I noticed was how it operated. When I asked, “Where’s the best place in western New York to get cheesecake?”, AI Mode didn’t just spit out a list. It kicked off 7 searches, scanned 43 sites, pulled data from Maps, and stitched it all together in real time:

What makes this agentic?

My query didn’t trigger a search result. It launched a process.

If you’ve ever used tools like ChatGPT’s Operator, this will feel familiar. But AI Mode makes that flow native. It’s embedded directly into the search stack.

Instead of fetching static results, AI Mode initiated what’s called a query fan-out. Mike King breaks it down as a cascade of sub-searches, data pulls, and content synthesis.

One query can trigger dozens of background searches, drawing from Maps, news, forums, and more. No, you don’t see the steps. That opacity is exactly why SEOs are scrambling to reverse-engineer the patterns. And whoever cracks the logic first could leapfrog the rest.

AI Mode isn’t just agentic. It’s the beginning of a world where rankings are no longer king. Not obsolete. Not irrelevant. But contested.

Google wants you to start delegating your questions to an assistant, one that understands what you mean better than you do. Which means SEO is moving beyond ranking pages and toward surfacing answers. Here’s how to increase visibility in that kind of landscape.

It listens, sees, reads, and guesses what you mean before you say it. Your queries aren’t just being interpreted. Your input is being redefined—especially on mobile.

Multimodal is the new mobile

AI Mode taps into your device’s voice, camera, and screen data. Basically anything your phone can sense. Most of these features are buried in the flow, but when you find them, Google’s vision for the future of search becomes clear as day. It isn’t just trying to answer your query. It wants to interpret the world around you.

Live—Real-time voice assistant

Live appears below the search bar or next to the “ask anything” prompt in AI Mode, but only after asking a question. You tap the mic and start talking, and it keeps listening, even if the app is in the background.

I tested this by asking Live to give me a basic rundown of AI Mode while I was scrolling through Facebook. The response was stiff and corporate, as expected:

“You can think of Google AI as a large team of researchers and engineers working on various projects related to artificial intelligence…”

It wasn’t wrong, just lifeless.

But Live did get a fact wrong when I asked it to tell me which of its multimodal features are mobile-only. It claimed “AI Mode history is a mobile-only feature.” It’s not. See for yourself:

So I did more digging on my own. Here’s what’s actually mobile-only right now.

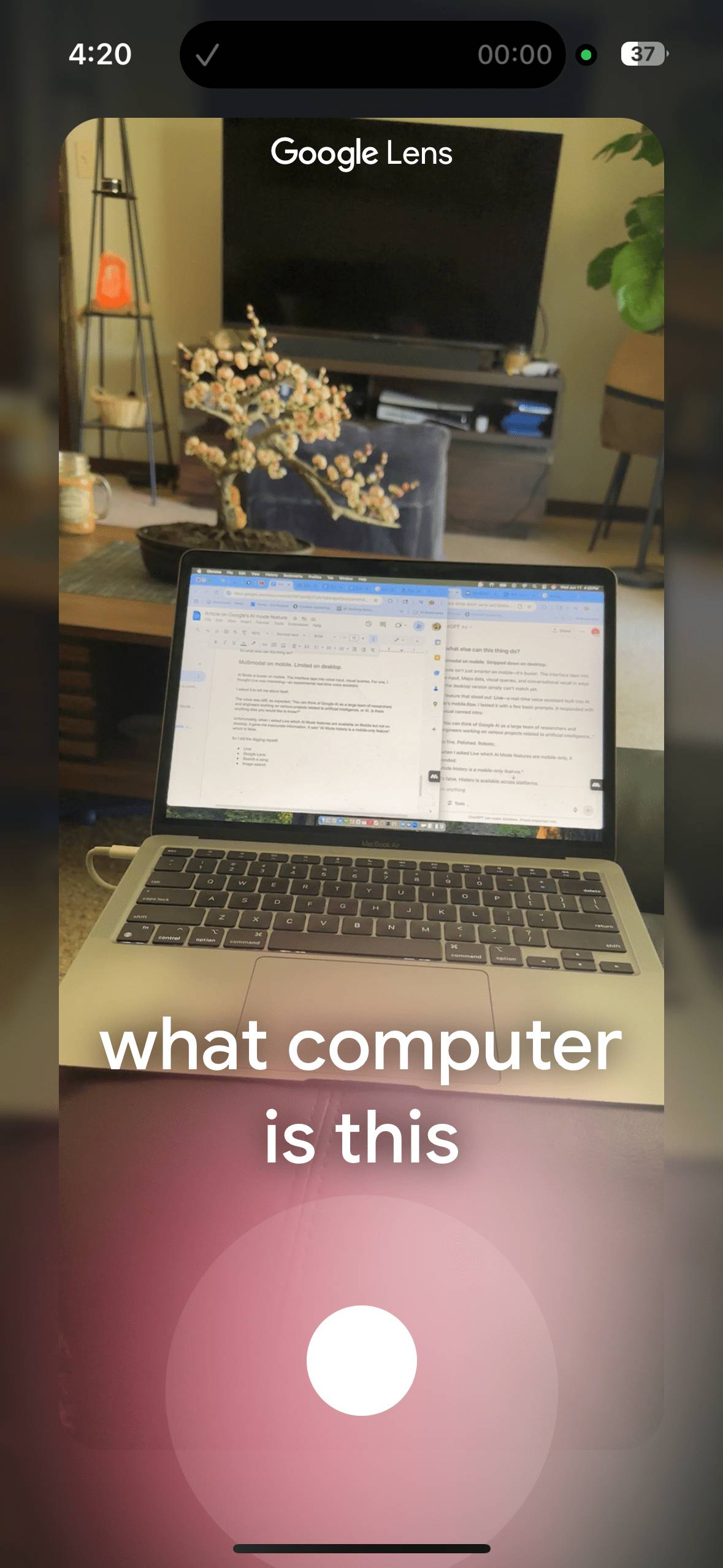

Google Lens integration

I pointed the camera at my macbook and tapped the magnifying glass. It launched Lens inside AI Mode, letting me ask questions based on what the camera saw. It even wrote my question on screen:

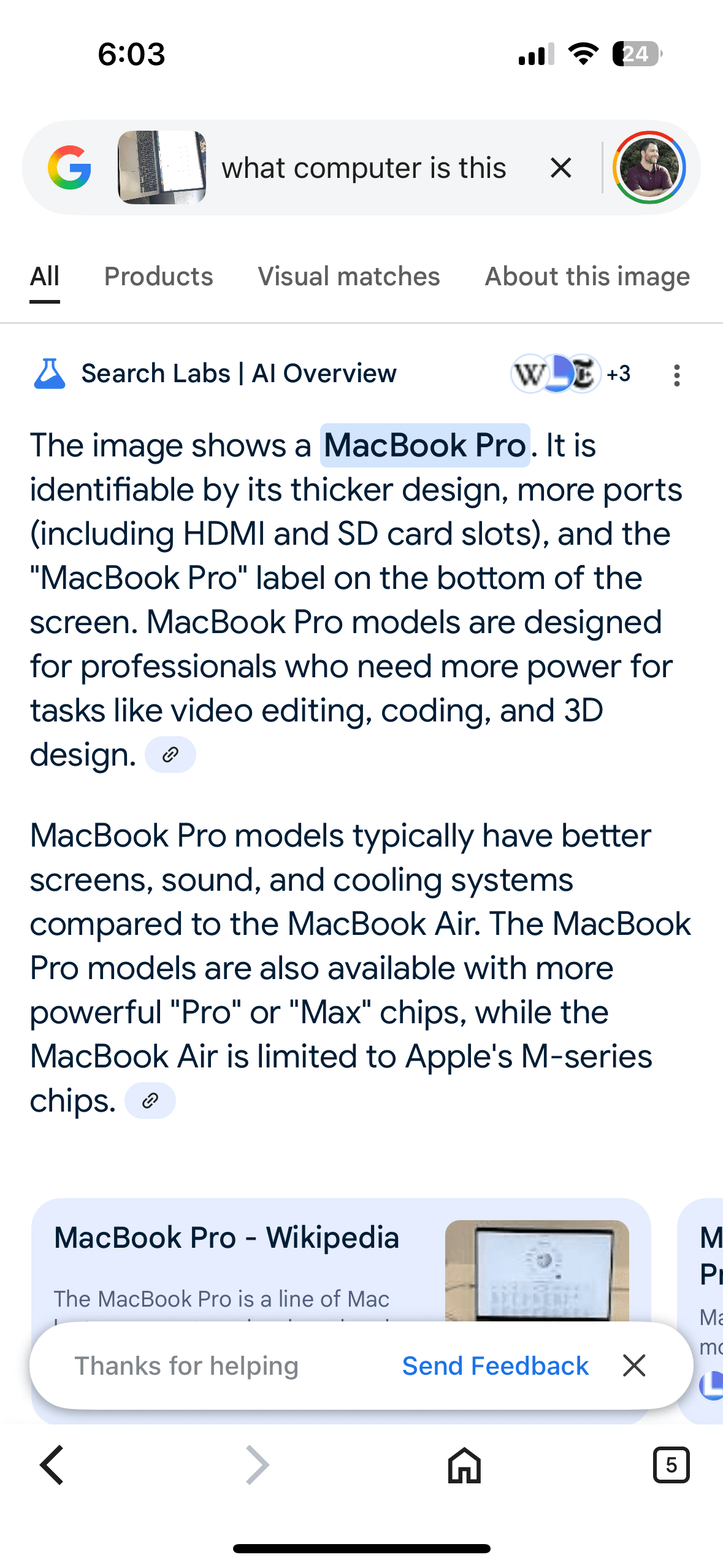

The response stitched together visual matches, Product IDs, and similar items. AI Mode identified my PC as a Macbook Pro, which is false. I have a Macbook Air. But that’s fine. Even Apple geniuses can’t tell the difference from a glance.

It pulled AI Overviews too–the AI search format explained here–making Lens feel like the new front door to multimodal search:

What you’re seeing above is a fusion of image understanding and Gemini multimodal models. Together they interpret the scene, occasionally generate follow-up questions, and surface context-rich info.

As for static images?

You can upload any image from your phone. AI Mode analyzes it for product matches, landmarks, or text using Lens’s OCR (Optical Character Recognition). I tried this with a few screenshots and real-world photos. Everything from packaging labels to a random tree—even my cat.

See Harvey’s AI Mode debut below:

Everything feels seamless, but what’s going on in the background is anything but simple. Think back to query fan-out. It’s a layered process stitched together in real time.

“What type of cat is this?” is a seemingly straightforward query that unleashes a cascade of subqueries—that I sadly don’t have access to.

Here’s what the process would look like if we could see it:

- AI Mode scans the image →

- Then it runs visual matches through Google Lens →

- Next, it cross-references similar photos from pet forums and breed databases →

- It proceeds to pull in articles about cat breeds →

- Then surfaces a carousel of lookalikes with confidence scores →

- Finally, it answers my question.

That’s multimodality in action: combining image recognition, text analysis, and web search into a single fluid response. It’s not just fetching results. It’s synthesizing signals from multiple channels, all tied to a deceptively simple question.

What looks like a cute cat pic to you is an information matrix to AI Mode.

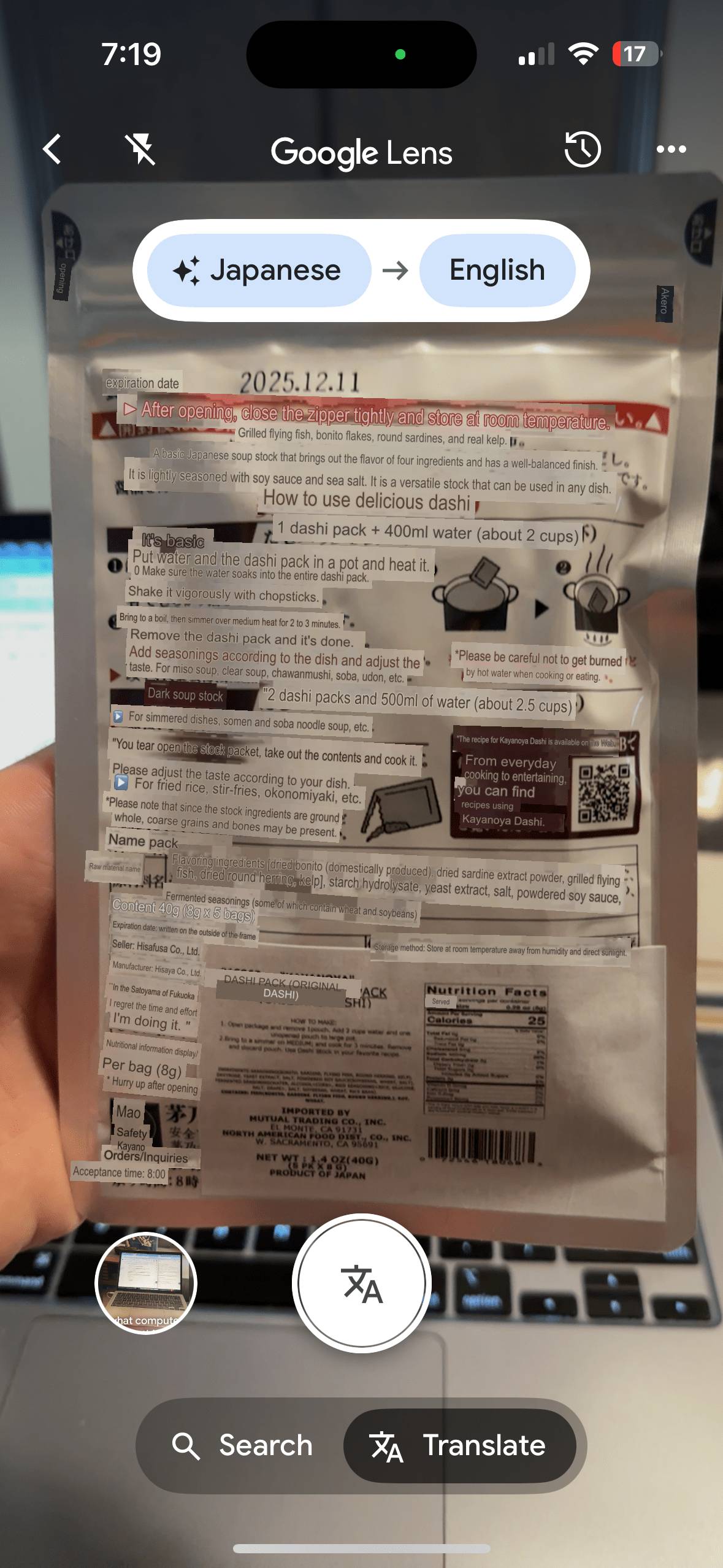

Translate (unofficial, but functional)

Translate isn’t officially integrated into AI Mode, but it shows up in practice. I took a picture of a Japanese food label for dashi broth and asked AI Mode to translate it. It did—roughly. Not as fluid as Lens Translate, but usable. See the result below:

Google’s standalone Translate feature is better built for this. Point your camera at foreign text and Lens overlays the translation in real time. I tested this on the dashi packet and got instant results:

This is another example of AI Mode pulling from multiple modalities to interpret rather than retrieve. AI Mode may not fully integrate with every experimental feature—not even on mobile—but Google’s quiet rollout could be signalling something bigger.

AI Mode doesn’t feel like a test. Is it becoming the default?

This whole layer of mobile-only features matters because it changes what Google considers a query. Sometimes it’s your words. Sometimes it’s what you see, where you are, or what you highlight.

That shift—from search as input to search as context—is why SEOs need to start paying attention now, not later.

You’re not just being indexed. You’re being remembered.

Every query launches a thread, not a one-off lookup. And the conversational memory is massive. It runs on a custom version of Google’s latest AI model, Gemini 2.5, with a 1M+ token context window. This means AI Mode can sustain sprawling, multi-step conversations without losing the thread.

That’s context stacking at scale. It means you’re not competing for a single keyword. You’re competing for ongoing influence inside an AI’s evolving understanding of a user’s journey.

And that understanding? It doesn’t reset between sessions.

AI Mode remembers you, across threads. Google’s Sundar Pichai calls it personal context:

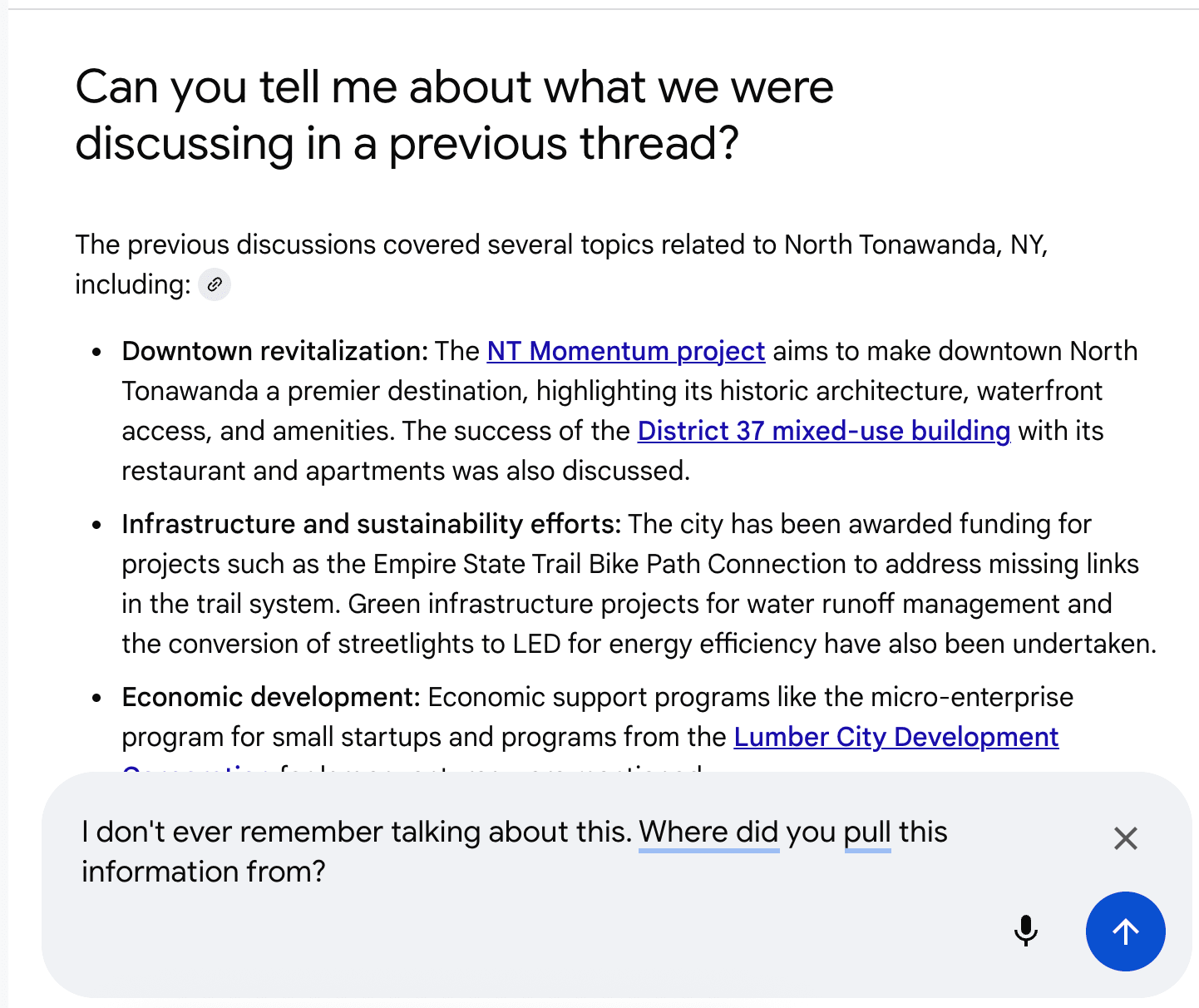

Small mistake. Big implications.

My experience with AI Mode’s memory feature revealed more about me than it. I expected a saved chat log. What I got was a behavioral model. The screenshot below shows me taking the feature too literally.

AI Mode made a mistake, but it was mostly my fault:

It confidently referenced a past discussion that never happened. Urban planning? Not even close. I’m a tech nerd.

That’s when I realized: it wasn’t recalling me. It was reconstructing me.

I misunderstood what memory means.

AI Mode doesn’t replay your words. It learns your patterns. Instead of storing conversations for use across sessions, AI Mode extracts patterns and builds a personalized profile of you based on user embeddings. Google team said it best:

So no, it’s not a history. It’s a model of you.

But it’s not just modeling users. It’s modeling content too. In fact, we saw a preview of this shift before AI Mode even entered the picture. In our AI content experiment, AI-written articles performed just as well as human ones—not because of quality signals, but because they fit the context.

At the time, this was seen as a quirk of AI Overviews. In hindsight? It was a sign of where things were headed.

Inside AI Mode: What our 50-query test exposed

Just field-testing it was enough, but I was pleasantly surprised when the SEO team at SE Ranking shared a side-by-side study comparing AI Mode with AI Overviews using 50 queries:

This grounded my observations in data. Made the patterns visible:

AI Mode

100 % (50/50 queries)

What it signals

Ubiquity — AI Mode is not a test feature

AI Mode

Yes (system-wide)

What it signals

AI Mode is persistent, signaling a depart from opt-in

AI Mode

12.26 avg

What it signals

Deliberate curation over raw quantity

AI Mode

Google Maps links only (0 search)

What it signals

Geolocation-focused action, not browsing

AI Mode

Low for domains (14.85%), lower for exact URLs (10.62%)

What it signals

AI Mode uses a different source logic

AI Mode

422 (74.4% of which are exclusive)

What it signals

Independent ecosystem from AIO

AI Mode

google.com (40 times, all Maps)

What it signals

Maps is the new front door

100 % (50/50 queries)

Ubiquity — AI Mode is not a test feature

Yes (system-wide)

AI Mode is persistent, signaling a depart from opt-in

12.26 avg

Deliberate curation over raw quantity

Google Maps links only (0 search)

Geolocation-focused action, not browsing

Low for domains (14.85%), lower for exact URLs (10.62%)

AI Mode uses a different source logic

422 (74.4% of which are exclusive)

Independent ecosystem from AIO

google.com (40 times, all Maps)

Maps is the new front door

What the data says

AI Mode responded to every single query. AIOs triggered 56%. What kind of test does that?

The implications are clear: Google’s new AI search experience is starting to look less like an experiment. And more like a soft launch.

I get how bold that sounds, but AI Mode is already embedded in mobile search for opted-in users. That makes it sticky by design:

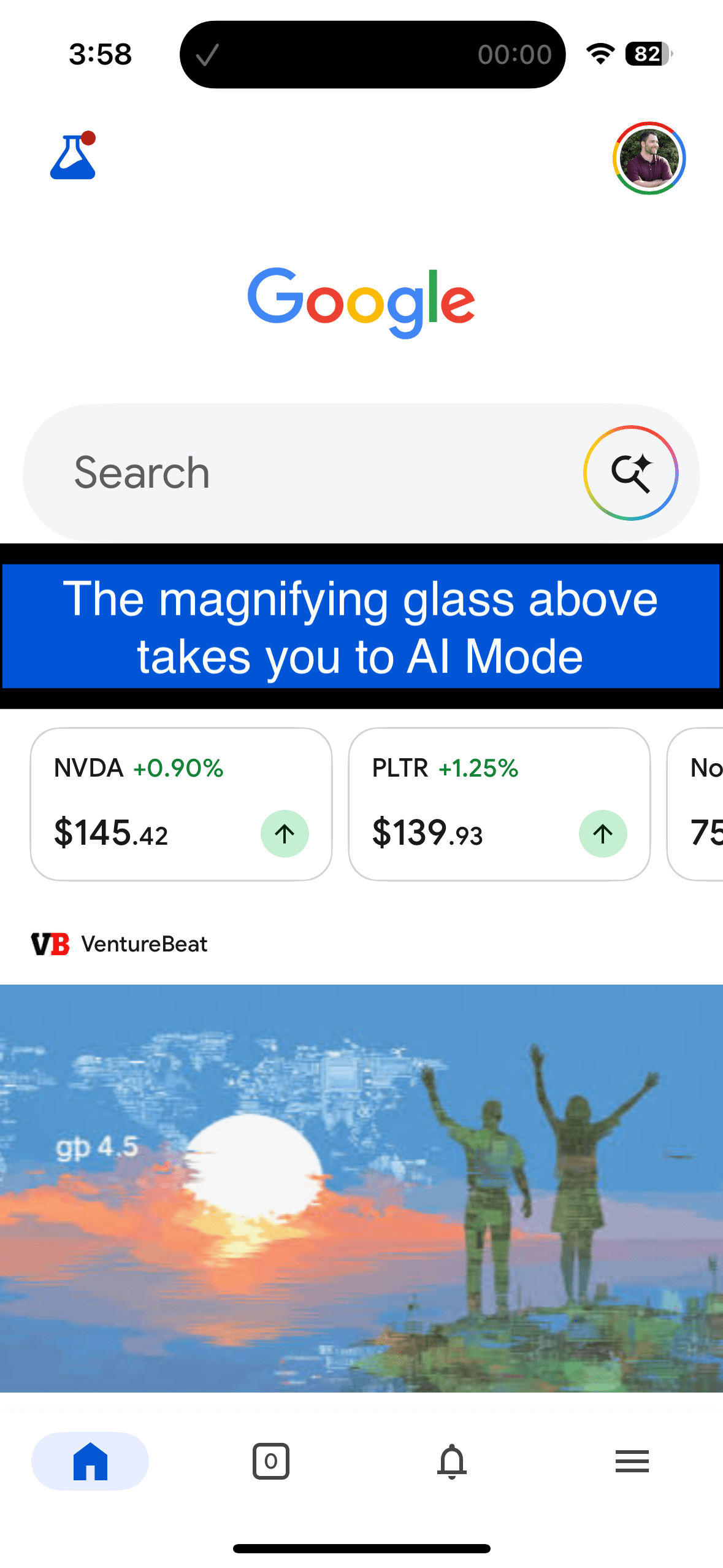

The image above shows Google’s mobile app in action. The magnifying glass inside the search box takes you straight to AI Mode.

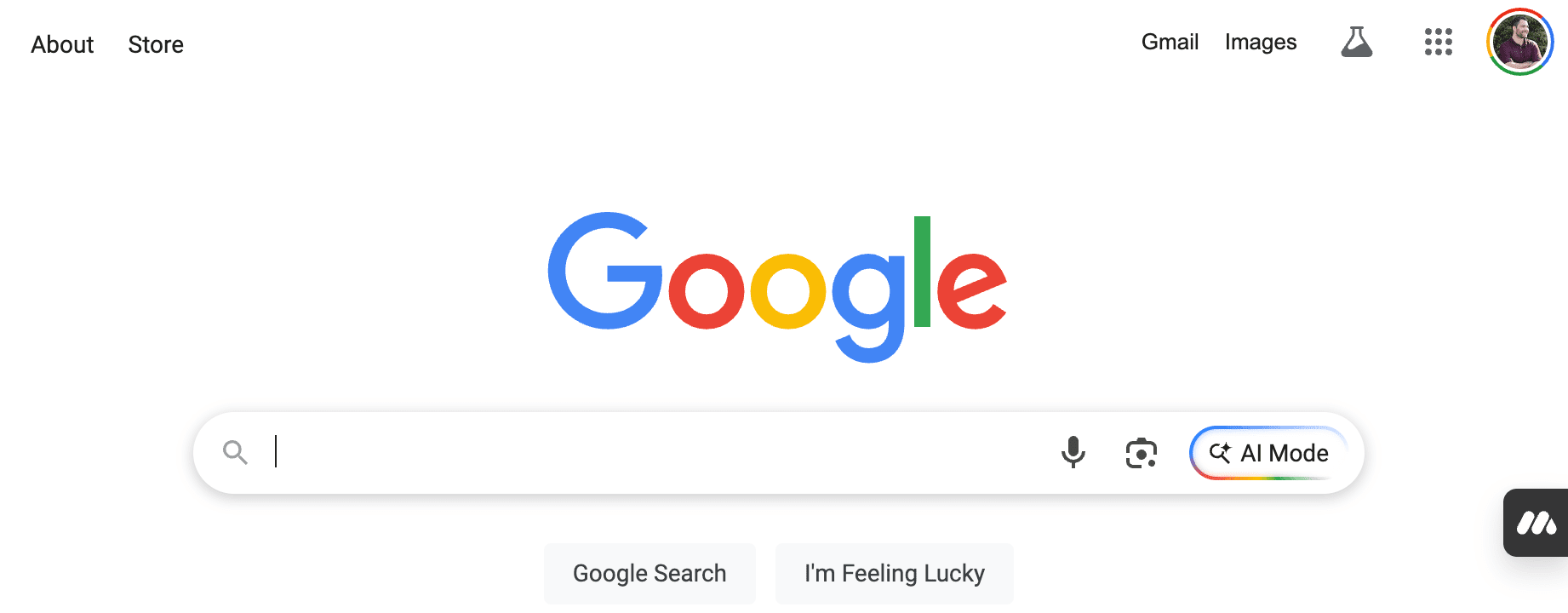

Desktop is murkier. The icon is there but the UX is different:

You have to manually enable AI Mode via Search Labs. And even then, it resets on refresh. Inconvenient? Yes, but not a flaw. Google is deliberately testing different UX paths while pushing the more complete experience through mobile:

This supports what I suspected earlier: Google is building a multimodal-first interface with AI Mode at the center.

AI Mode also uses fewer sources than AIOs (12.26 vs 23.36), but did so with consistency and clear curation. That signals less noise. More intent.

But the clearest break between these systems? Source logic:

Exact URL overlap between the two systems for the same queries was just 10.6%.

This tells us two things:

- AI Mode is pulling from entirely different sources.

- Optimizing for AIOs won’t help you in AI Mode’s world.

If AI Mode becomes the default search experience, SEO could shift away from traditional ranking. It may no longer be about appearing in AIOs, but about aligning with how generative systems synthesize information.

We’re not fully in that world yet, but Google says it’s building it anyway.

Will AI Mode become search?

Google made it clear at I/O 2025:

So even if AI Mode never becomes the new default UI, its design principles already are. The logic behind it is bleeding into every corner of Google Search.

Final thought: Not just a UI

I started this journey expecting a gimmick.

Towards the end? My mindset shifted from skepticism to wonder:

- Why is Google so intent on reinventing the search experience?

- What would an AI Mode-first future look like?

- And for SEOs: What happens when ranking is no longer the goal, but just one signal among many?

This doesn’t feel like just a new interface. Signs point to the quiet construction of a new interpreter. A new filter. A new layer between your content and your audience.

If it continues evolving in this direction, understanding how that system works could become an essential part of staying visible.