14 prompt hacks to get 5x better answers from ChatGPT-5

AI is changing marketing workflows whether we like it or not. For anyone using ChatGPT in SEO, content, or campaigns, one rule is clear: your prompt makes or breaks the output. A vague one? Expect fluff. A clear one? Expect results.

With the release of ChatGPT-5, expectations skyrocketed – only to be met with frustration. Marketers complained about bland answers, robotic tone, and outputs that felt less useful than before.

Yet hidden in OpenAI’s updates were valuable insights about how this new family of models processes prompts – and how to get better results from them. We tried them ourselves, added lessons from our own work, and distilled them into practical hacks you can apply right away.

Here they are:

#1 Use the prompt formula (golden rule)

The fastest way to cut hallucinations and keep answers consistent is to build every prompt around a simple formula:

Task + Context + Target Audience + Example + Format + Tone

Here’s what each part means:

- Task – What exactly do you want GPT to do?

- Context – Why are you doing this / what’s the situation?

- Target Audience – Who’s the final output for?

- Example – Give GPT a sample to mimic (a good output).

- Format – Lock the output’s shape (bullets, paragraph, table, etc.).

- Tone – Define the voice (professional, casual, concise, etc.).

👉 Pro tip: Don’t skimp on detail. The richer your input in each section, the less GPT guesses – and the stronger your result.

Quick example:

Instead of:

“Write me a LinkedIn post about our new product.”

Try:

- Task: Write a LinkedIn post.

- Context: We’re launching a new product aimed at small businesses.

- Audience: Busy founders and marketing managers.

- Example: Use the same flow as this [sample LinkedIn launch post].

- Format: 2 short paragraphs + 3 bullet points.

- Tone: Conversational, upbeat, and lightly persuasive.

The difference is night-and-day – vague prompts get you vague results, while detailed prompts give GPT less room to hallucinate.

#2 Optimize every prompt before running it

Even a decent prompt can be sharpened. Before you hit enter, take a minute to run your draft through the Prompt Optimizer tool. This is a tool developed specifically by OpenAI to help its users get started on “improving existing prompts and migrating prompts for GPT-5 and other OpenAI models.”

Here’s what it does for you:

- Polishes messy language so GPT doesn’t misinterpret.

- Removes vague words that leave too much room for guessing.

- Restructures instructions into a format the model follows more reliably.

- Clarifies format requirements, so GPT knows exactly how to shape the output.

- Aligns examples with your ask, eliminating inconsistencies that often trigger hallucinations.

👉 The result: Fewer “what was that?” moments and much less back-and-forth fixing weak outputs.

#3 Lock the format

One of the biggest reasons GPT goes off the rails is freedom to improvise. If you don’t tell it exactly how you want the answer shaped, it fills the gaps with extra sections, side notes, or plain fluff.

The fix? Lock the format upfront. Be explicit about structure, length, and boundaries before GPT starts writing.

Here’s a quick example:

❌ Vague prompt:

“Write a product description.”

✅ Clear prompt with locked format:

“Return only a 3-sentence product description. No extra notes, no alternative versions, no bullet points. Just one clean paragraph, under 80 words.”

By setting these guardrails, you cut down hallucinations and save yourself from editing out things you never asked for in the first place.

👉 Pro tip: Treat format rules like a contract. The stricter and clearer they are, the less GPT drifts into “creative” territory you didn’t want.

#4 Use bracketed tags to structure instructions (best for Custom GPTs and Projects)

OpenAI says XML best resonates with GPT-5, so they recommend putting tags around your text to make intent crystal clear and stop the model from guessing. Tags also keep instructions portable across Custom GPTs and Projects, so you don’t have to rewrite the same rules again and again.

When instructions are left in a plain-text block, GPT may blur together context, task, and bans – which is where hallucinations start creeping in. Bracketed tags fix that by cleanly separating logic.

Why this works:

- Makes your intent unmissable.

- Stops GPT from mixing up context vs. task.

- Reusable structure for any workflow.

What to do: Enforce bracketed tags for all core instructions (<context>, <task>, <format>, <tone>, <caps>, <bans>, <sources>,<role>, etc.)

Example:

❌ Messy prompt:

“Act as a social media assistant. Write LinkedIn copy. Make it engaging but professional. Add a CTA.”

✅ Bracketed prompt:

<role>

Act as a professional social media assistant.

</role>

<task>

Write a LinkedIn post about a new marketing report release.

</task>

<tone>

Professional, approachable, and engaging. Keep sentences short and scannable.

</tone>

<format>

One short hook line + 2 short paragraphs + CTA at the end.

</format>

<bans>

Avoid clichés like “game-changing” or “in today’s fast-paced world.”

</bans>

#5 Control vocabulary with ban & prefer lists

GPT tends to fall back on generic, AI-templated wording. That’s why giving it two lists: a ban list (words/phrases never to use) and a prefer list (phrases you want it to use) – helps steer the output closer to your brand voice.

What to do:

- Create a ban list → clichés, overused marketing buzzwords, or “AI-ish” wording you don’t want to see.

- Create a prefer list → vocabulary, phrases, or expressions that fit your brand style and tone.

- Add both lists into your instructions so GPT knows what to avoid and what to lean on.

Example:

<bans>

game-changing, cutting-edge, fast-paced world

</bans>

<prefer>

reliable, actionable, proven, time-saving

</prefer>

👉 Pro tip: Build team-wide ban/prefer lists and reuse them across prompts. It keeps your marketing consistent around all types of copies you create.

#6 Add acceptance tests & thinking mode (force self-QA)

To cut down hallucinations and make sure outputs really meet your needs, don’t let GPT jump straight into writing. Instead, make it plan first and self-check before finalizing.

What to do:

- Start with a checklist: Tell GPT to outline 3–7 steps it will follow before drafting (this is “thinking mode”).

- Require self-validation: GPT must check its own work against 3–5 quality tests before giving the final version.

- Force revision if it fails: If any check fails, GPT should revise until it passes.

Example self-checklist:

Before finalizing, GPT must confirm the output:

- Matches the requested format and structure

- Uses only verified or provided data (no made-up details)

- Avoids banned or restricted words/phrases

- Fits the requested tone, audience, and style

- Respects length or scope limits

- Is factually accurate, logically consistent, and natural in context

- Aligns with the supplied instructions, documents, or guidelines

Example instruction:

<validation>

Start with a checklist (3–7 bullets) of the steps you’ll take before providing the output.

Before finalizing, check that the output:

- Matches the requested format and structure

- Uses only verified or provided data (no made-up details)

- Avoids banned or restricted words/phrases

- Fits the requested tone, audience, and style

- Respects length or scope limits

If the output fails validation, revise until it passes. Then, generate the final version.

</validation>

#7 State “No external knowledge” when needed

One of the fastest ways to stop hallucinations about product features, pricing, or policies is to tell GPT explicitly not to invent anything beyond what you’ve given it.

What to do:

- Instruct GPT to use only provided materials (knowledge base, brand docs, or attached resources).

- If the info is missing, it should say: “Data not provided” instead of making something up.

- Add a clear line like: “Never invent features, claims, or numbers. Do not guess.”

- For brand safety, emphasize: “Use only verified details from official resources.”

Example:

<rules>

– Use only provided materials in the knowledge section.

– If info is missing: say “Data not provided.”

– Never invent features, claims, or numbers. Do not guess.

– Utilize only verified features and details from official [Brand] resources in the knowledge section.

</rules>

#8 Define clear boundaries

One of the simplest ways to keep GPT from running wild is to set explicit limits upfront. Boundaries can cover things like:

- Length (word count, bullets, paragraphs)

- Structure (tables, outlines, lists)

- Style (formal, casual, concise)

- Data sources (only official docs, no outside info)

- Actions (summarize vs. analyze vs. rewrite)

When you don’t define these constraints, GPT fills in the blanks with its own defaults, which often means overly long, vague, or off-track outputs.

Example boundary instructions:

<boundaries>

“Total length ≤300 words.”

“Summarize in 5 bullets.”

“Maximum 2 short paragraphs.”

</boundaries>

#9 Always teach GPT with examples – show what’s good, and what’s not

The fastest way to steer GPT into your brand’s voice is to show, not tell. Instead of hoping it “figures out” what good output looks like, give it crystal-clear examples and don’t be afraid to show bad ones too. When GPT sees both sides, it learns your boundaries much faster.

How to apply:

- Provide 1–2 good outputs that follow the brand voice, tone, and rules.

- Provide 1 bad output that breaks them (e.g., hallucinated features, banned phrases, awkward style).

- Tell GPT explicitly: “Do it like a good example. Never do it like a bad example.”

Example:

<examples>

GOOD OUTPUT (approved style):

SE Ranking is a multi-tool SEO platform built for pros—agencies, in-house teams, and marketers who need to stay visible across search and AI-driven channels. Its Keyword Research tool is just one of 30+ features under the hood, with reliable insights on volume, intent, and difficulty. It integrates seamlessly with Rank Tracker and Content Editor, so you can move from data to action without the back and forth.

BAD OUTPUT (never use):

SE Ranking is a treasure chest of cutting-edge SEO tools. In today’s fast-paced digital landscape, it unleashes the potential of agencies and businesses by revolutionizing the way you do SEO.

Instruction: Follow the GOOD example. Never copy the BAD one.

</examples>

#10 Add your own samples

If you want GPT to sound like you, feed it your past work. The more you anchor GPT in your own examples, the more natural, on-brand, and reliable the outputs become. Over time, it starts treating your style as second nature.

How to apply:

- Collect strong samples from your best work – blog posts, LinkedIn updates, case studies, reports, client emails, product descriptions, campaign summaries, or even ad copy.

- Keep them in one spot (Knowledge section, shared doc, or inside a Project) so they’re easy to reuse.

- Reference them directly in your prompt:

“Write in the same style and tone as these samples.” - Refresh regularly so GPT reflects your current brand voice, not an outdated one.

👉 This works especially well for long-term content strategies. Instead of explaining your style again and again, your samples do the heavy lifting.

#11 Add assumption rule

One of the biggest reasons GPT hallucinates? It guesses when the instructions aren’t clear. That guess sneaks into the output as if it were fact, which is exactly what you don’t want.

The fix: make it spell out assumptions. This way, hidden guesses become visible, and you can decide whether they’re correct before the final output.

How to apply:

- Tell GPT: “If the context is unclear, stop and ask before continuing.”

- If it’s only partially clear, instruct it to add an Assumption note at the top:

Assumption: <short line> - Review the assumption before moving forward. If it’s wrong, GPT revises.

Example:

– If the context is unclear, stop and ask.

– If partially clear, add at the top: Assumption: <short line>.

#12 Lock context boundaries

One of the easiest ways GPT drifts into hallucinations is by pulling from its own memory instead of sticking to what you provided. That’s how you end up with made-up features, wrong pricing, or “facts” that were never in your docs.

The fix: lock its context boundaries. Tell GPT exactly where it can (and cannot) pull information from.

What to do:

- Always define the allowed info source(s).

- Add an explicit “no external knowledge” rule.

- If info is missing, require GPT to say: “Data not provided.”

Example instruction:

<instruction>

Use only the attached [brand] resources. Do not use your own knowledge, assumptions, or external data. If information is missing, respond with: “Data not provided.”

</instruction>

#13 Force stop conditions

Sometimes GPT tries to “be helpful” by filling gaps with generic fluff or invented details. The fix: tell it when to stop.

If your prompt doesn’t set boundaries, GPT assumes it should always produce something. That’s risky when key info is missing. By defining stop conditions, you force GPT to pause instead of making things up.

What to do:

- Explicitly say what to do if data is missing.

- Tell GPT: ask instead of guessing.

- Add a hard “do not generate” rule when context is incomplete.

Example instruction:

<instruction>

If article/section/product information is missing:

STOP. Do not generate text.

Instead, ask for clarification.

</instruction>

#14 Define GPT’s role

When GPT knows its job (and what it’s not supposed to do), outputs stay focused and relevant. Without role definition, responses often drift into vague or off-track territory.

Role-setting keeps GPT “in character.” It narrows the scope, cuts down on guesswork, and helps the model stick to your goals instead of wandering into unwanted directions.

What to do:

- Set the role at the very top of your instructions.

- Clarify the scope: what GPT should cover and what it should avoid.

- State the end-goal (e.g., write product insertions, draft LinkedIn posts, analyze SEO data).

Example instruction:

<Role>

Act as a social media strategist for a B2B SaaS brand. Your task is to draft LinkedIn posts that turn complex product insights into short, engaging updates for marketing professionals.

</Role>

<NotRole>

Do not create content for Instagram or TikTok. Do not add hashtags unless explicitly asked. Do not make up statistics or results.

</NotRole>

💡 Bonus 1: Built-in tools that make prompting easier

On top of the hacks we shared, ChatGPT already has a few under-the-hood features that can make your life easier:

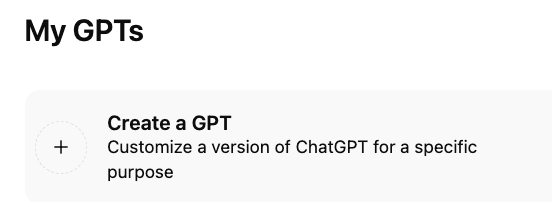

- Custom GPTs – Think of these as your reusable “mini-models.” You can save prompt rules, tone, and examples into one package so you don’t have to rewrite them every time. Great for consistent outputs across teams.

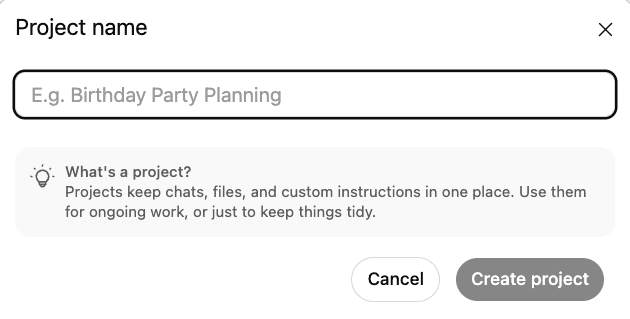

- Projects – Instead of juggling scattered chats, group your related prompts, docs, and instructions into a single project. That way, GPT always stays in the right context without losing track mid-workflow.

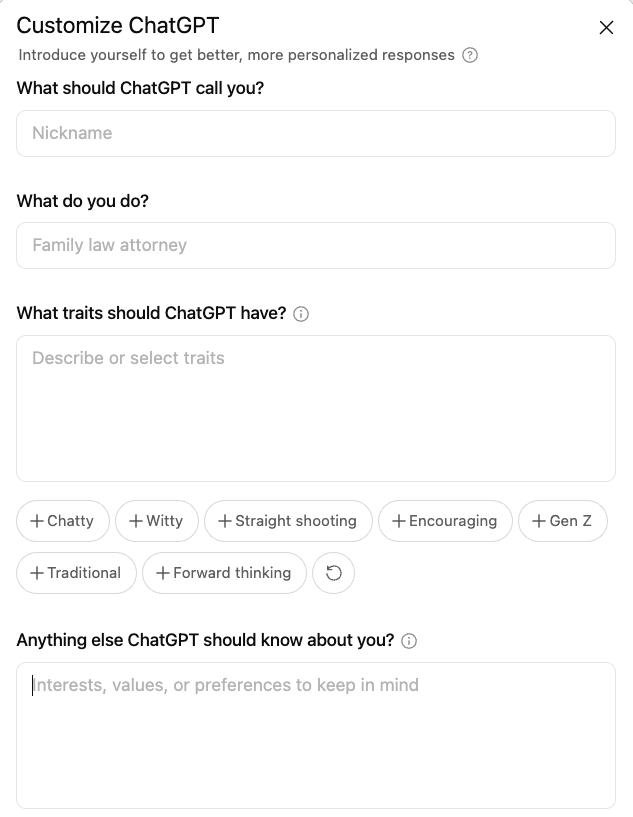

- Customize ChatGPT – Set your default brand tone, style, and preferences once, and GPT applies them automatically across every conversation.

💡Bonus 2: Plug real SEO data into ChatGPT via SE Ranking’s MCP server

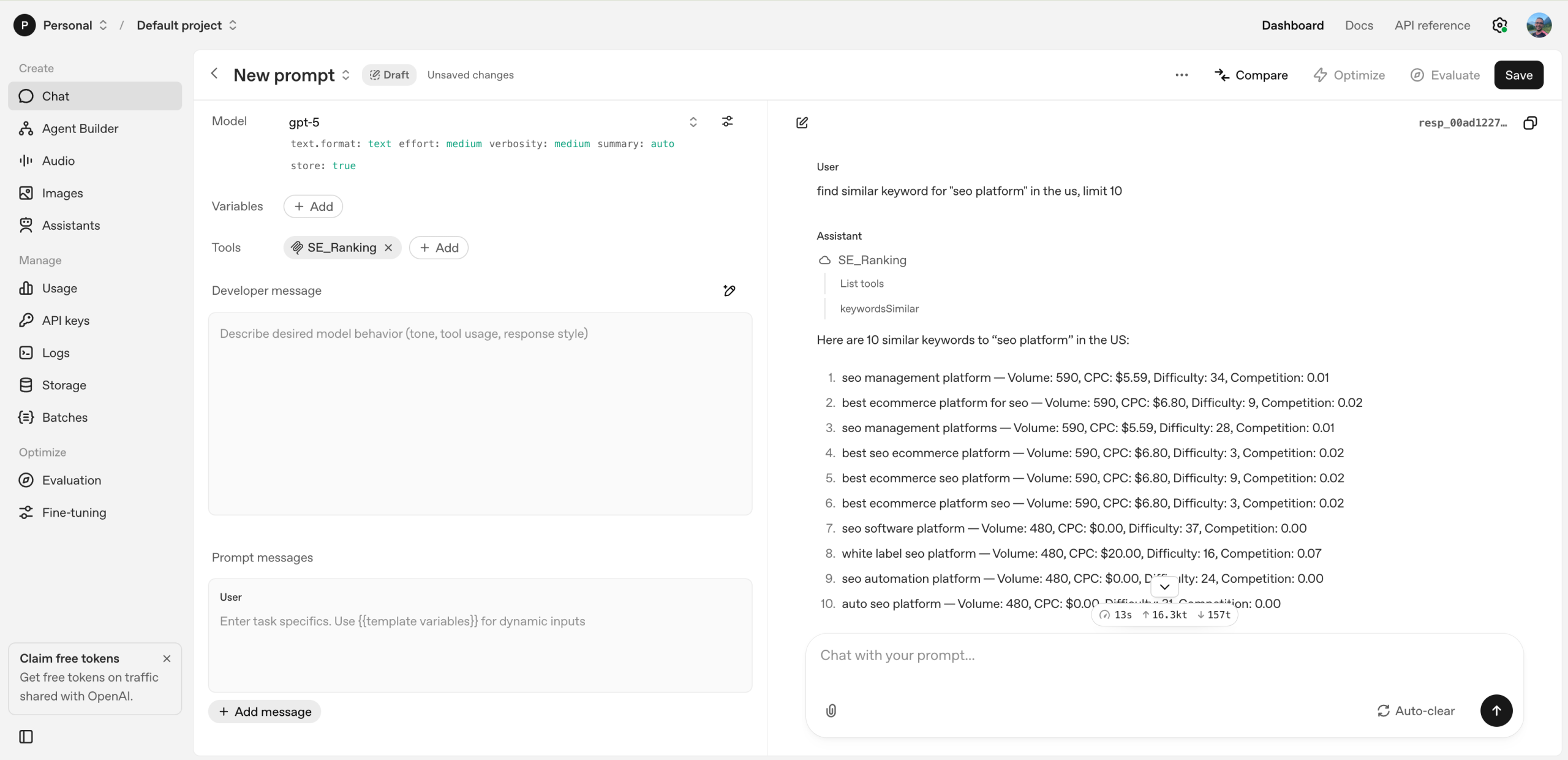

Want to skip the copy-paste routine and get live SEO insights directly in your AI assistant? SE Ranking now offers an MCP server via API that delivers real-time keyword, backlink, and competitor data into tools like ChatGPT, Claude, and Gemini.

Just type a prompt like:

“Pull the top 10 organic keywords for domain.com.”

Your assistant instantly connects to SE Ranking’s data and returns current results.

Explore the SEO API + MCP integration in this step-by-step guide.

Final thoughts

At the end of the day, prompts decide whether ChatGPT-5 works like a helpful teammate or a random text generator. The hacks we’ve covered give you a framework to cut hallucinations and steer outputs toward something you can actually use. And with useful built-in features, you’ve got extra support to make things faster and more consistent.

👉 Try one or two hacks in your next prompt, combine them with these built-in tools, and you’ll see a night-and-day difference in the quality of your results!