AI Mode volatility: Results from our local search test

In our first round of AI Mode research, we found that volatility was one of its defining features. This naturally raised another question: how does that volatility impact local searches?

For example: if you search “restaurants near me” in New York and repeat the same query a few minutes later, do you get the same results? If someone in Denver searches at the same time, will their results match yours? And what happens if the query specifies a city name instead of just “near me”?

What we learned gave us a clearer picture of how AI Mode behaves in real searches and also helped us improve the way we approach positions in the AI Mode Tracker.

Now, let’s move on to our findings.

-

AIM results for general local queries are highly volatile, even in the same city.

Repeating local searches like “restaurants near me” in the same city produces very different results. On average, only 35% of domains repeat, which means two-thirds vanish between runs.

-

General local queries look completely different across cities.

If two people in different cities search for something like “gyms near me,” they’ll see almost entirely different results. Only about 23% of websites match, and the exact pages (URLs) are almost never the same.

-

Location-specific queries make AIM results much more consistent.

For example, adding a city name (e.g., “restaurants in Denver”) nearly doubles consistency, with about 50–55% of websites repeating across AIM responses.

-

AIM volatility patterns are consistent across all cities.

The volatility pattern was identical across all cities tested (New York, Los Angeles, Washington, Houston, and Denver). What mattered wasn’t geography but phrasing: queries with a location reference produced far more stable results.

-

Domains featured in AIM results are more stable than URLs.

Even when AIM repeats the same websites, it often rotates through different pages. For general local queries, about 35% of domains are repeated, but only 18–20% of URLs. This shows that AIM not only shifts between sources but also varies the specific content it surfaces.

-

Geography matters only when the search query is vague (without the explicit location reference).

For broad “near me” searches, shifting the user’s location increases volatility by 10–12%. But once a query names a specific place, the impact of geography shrinks dramatically, nudging volatility by only 2–6%.

Before examining how domains and URLs vary across AIM responses, let’s first take a look at the types of queries we used in this test:

1. General local queries: intent-driven searches using “near me” or “nearby,” without naming a location (e.g., gyms near me, urgent care nearby, bookstores close to me, late-night pizza near me).

2. Location-specific queries: searches with explicit geographic context (e.g., restaurants in New York, pet stores in Denver, Memorial City Mall in Houston, famous coffee shops in LA, Saturday farmers market in DC).

For more examples and details on these types of search queries, check the methodology section at the end of this post.

This distinction matters since AIM behaves very differently depending on whether a query is general or tied to a specific place.

Domain-level results

At the domain level (looking at whether the same websites were cited, regardless of the exact page), AIM’s behavior splits sharply depending on query phrasing.

General local queries

If you search for “restaurants near me” several times in New York, you might expect most of the same sites to appear each time. Instead, AIM reshuffled results almost every run. The same happened with “gas station nearby” in LA, “coffee shops near me” in Chicago, and other general local queries.

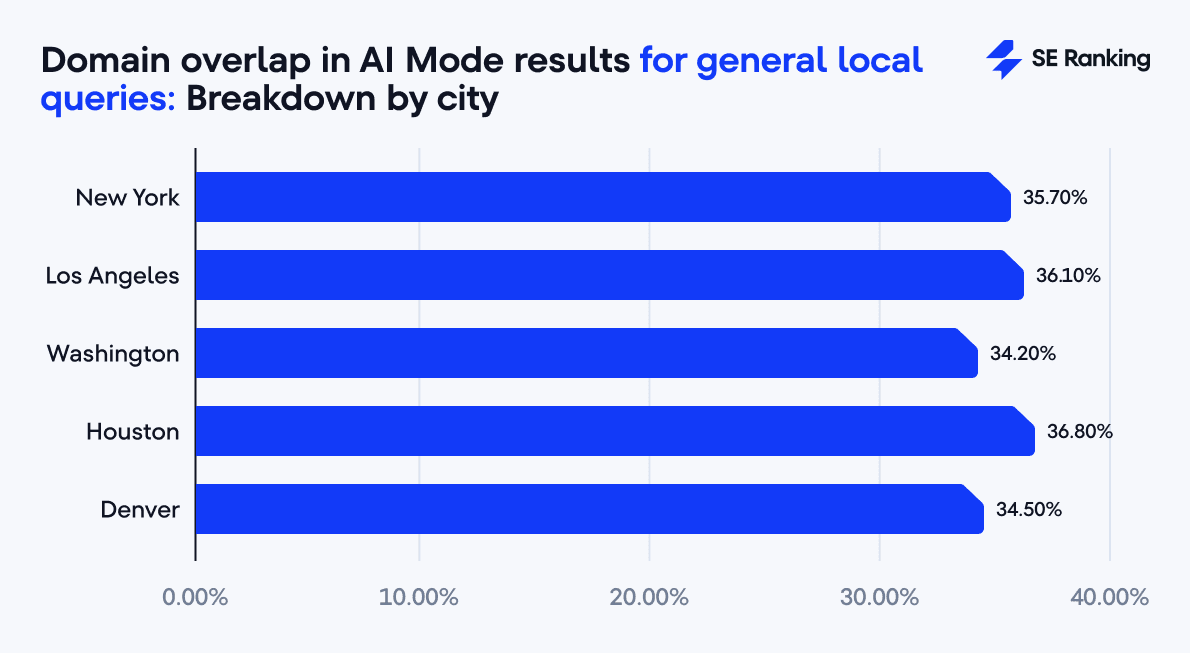

The numbers below show the average overlap (how many of the same domains repeated across runs) and volatility (how often results changed) for a sample of cities:

- New York: 35.7% overlap (64.3% volatility)

- Los Angeles: 36.1% overlap (63.9% volatility)

- Washington: 34.2% overlap (65.8% volatility)

- Houston: 36.8% overlap (63.2% volatility)

- Denver: 34.5% overlap (65.5% volatility)

That means more than 60% of the domains disappear between runs, even with the same user, city, and query.

Volatility grew when we ran the same general local queries across multiple cities:

- New York–Los Angeles–Washington group: 23.7% overlap (76.3% volatility)

- Los Angeles–Denver–Houston group: 24.1% overlap (75.9% volatility)

Here, less than one in four domains carried over. A New Yorker and a Houstonian searching “restaurants near me” will likely see AIM results based on data from different websites.

This means the user’s geographic location has a significant impact on AIM results for general local queries.

Location-specific queries

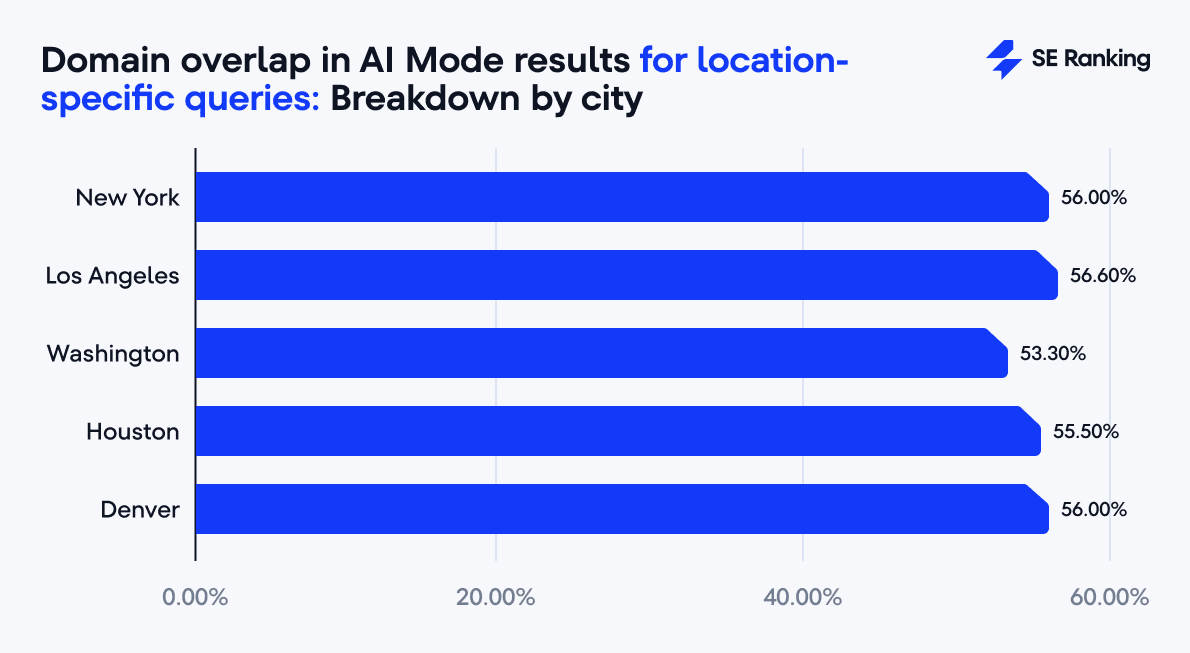

When we added a city name (e.g., “restaurants in Denver”), the pattern flipped. Overlap nearly doubled compared to general local search queries:

- New York: 56% overlap (44% volatility)

- Los Angeles: 56.6% overlap (43.4% volatility)

- Washington: 53.3% overlap (46.7% volatility)

- Houston: 55.5% overlap (44.5% volatility)

- Denver: 56% overlap (44% volatility)

So, by adding the city name to the query, you can expect AIM to show a more consistent set of domains in its responses.

Even across locations, city-specific phrasing held up well:

- New York–Los Angeles–Washington group: 48% overlap (52% volatility)

- Los Angeles–Denver–Houston group: 49.2% overlap (50.8% volatility)

So while AIM still adjusts its results based on the user’s location, the effect is much smaller once the query includes a location reference.

Distribution of domain overlap

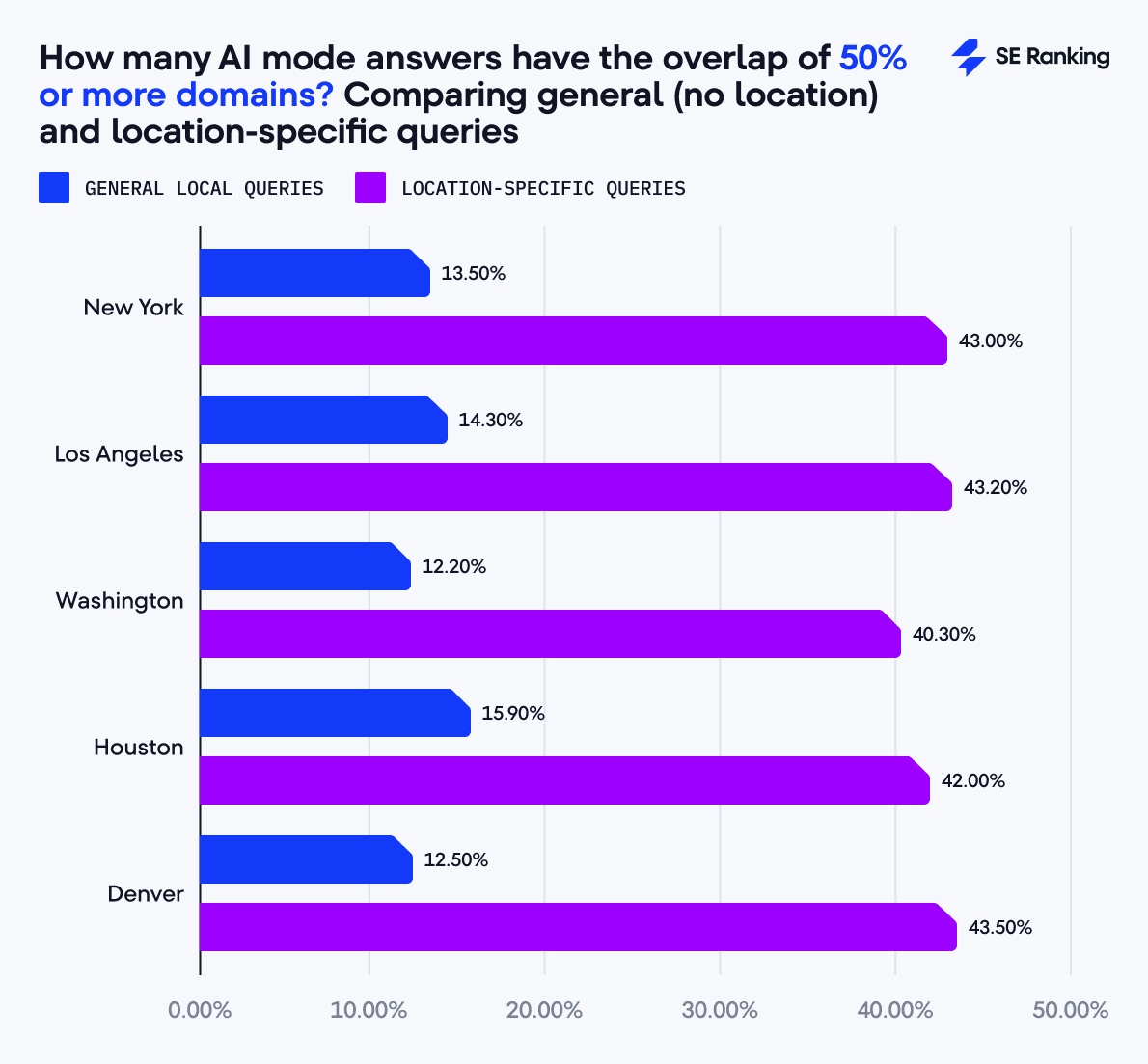

When we look at how domains are distributed across AIM responses, the difference is striking.

For general searches with local intent (say, “best coffee shops near me”), only about 12-16% of the keywords show over half the same websites when the query is repeated in the same city. In other words, the results shift around quite a bit if the location isn’t spelled out.

But when the query is tied to a specific place (e.g., “best coffee shops in Denver”), that overlap climbs to 40-44%.

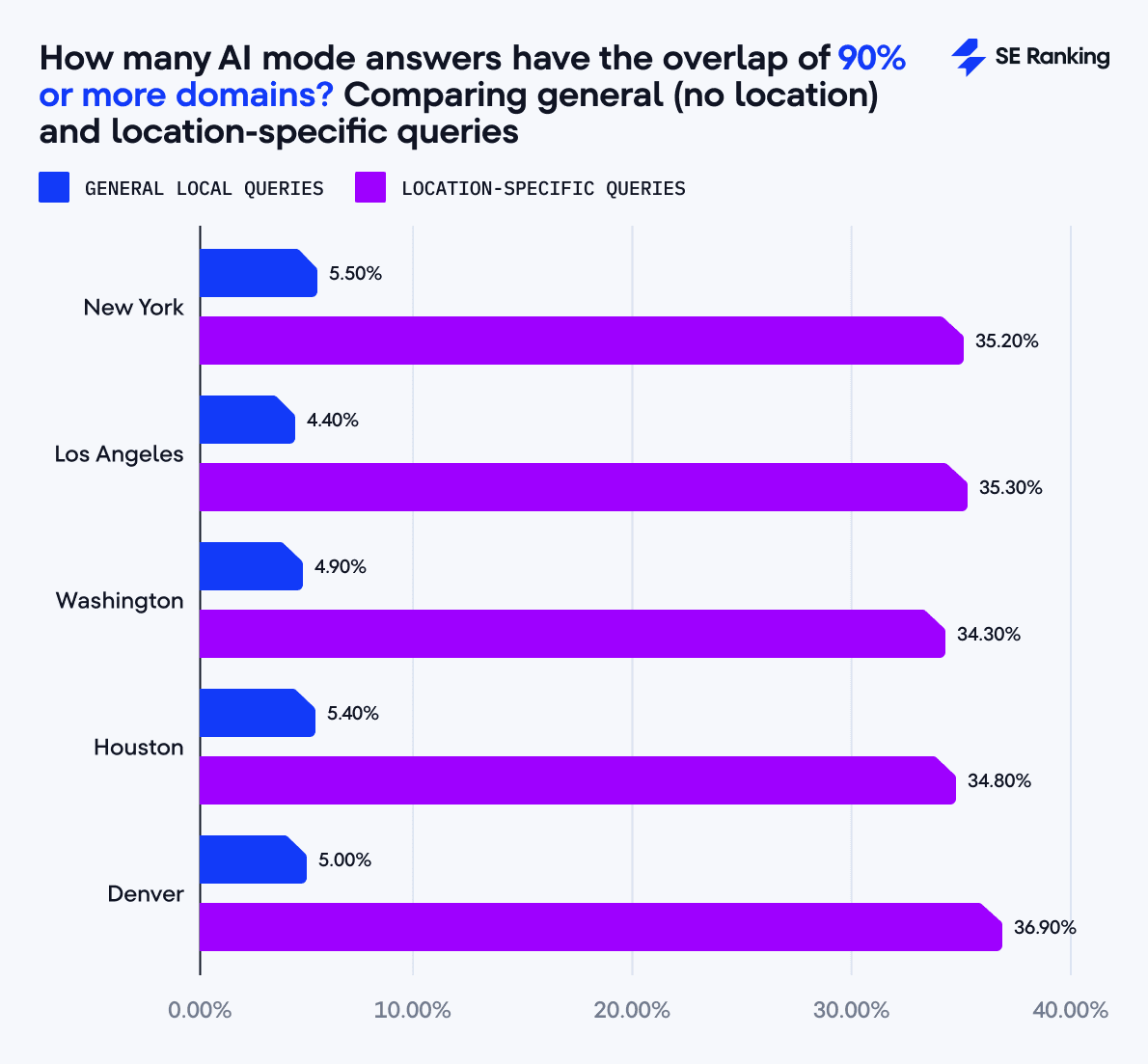

Even more remarkably, in more than a third of these city-specific searches (35-37%), the overlap goes beyond 90%. That means AIM is essentially giving you the same set of domains each time.

AIM can deliver consistent, stable results, but only when the query makes the location explicit. For example, users who specify a city in their query (Denver, LA, Arizona, etc.) tend to see more consistent overlaps than those who leave the location implicit. If the city isn’t specified, the system has more room for interpretation, and the domains it surfaces are much more likely to change from one run to the next.

URL-level results

At the URL level, volatility becomes even more visible. Here, AIM isn’t just swapping websites but entire pages.

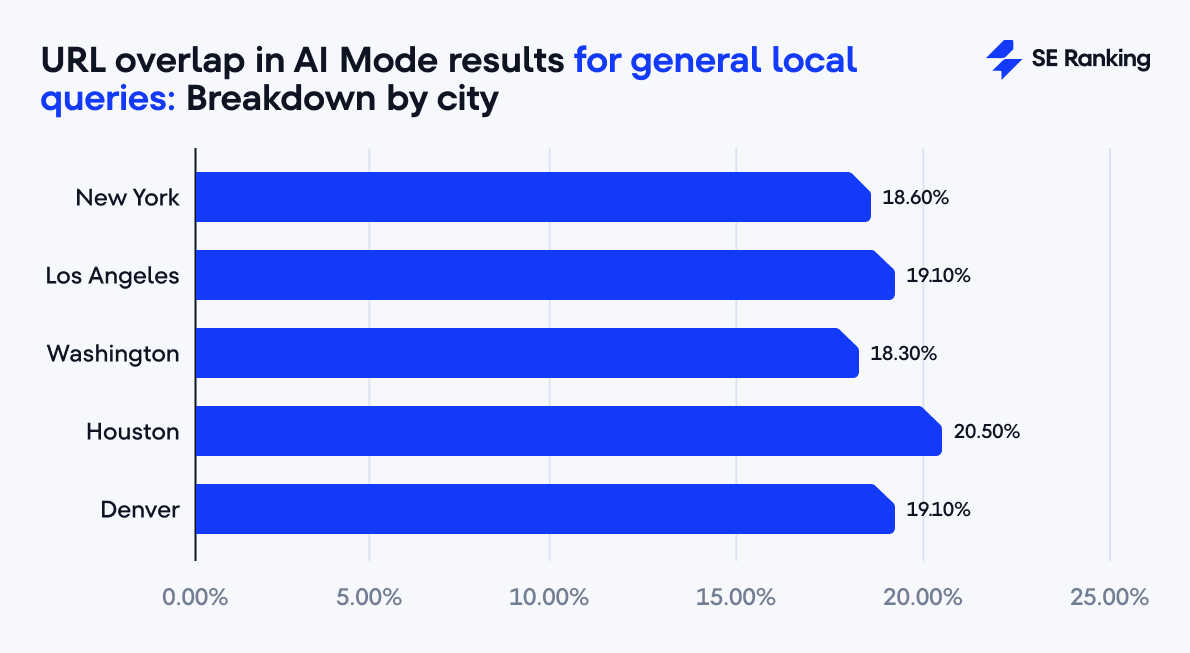

General local queries

Within the same city, URL overlap hovered around 18–20%:

- New York: 18.6% overlap (81.4% volatility)

- Los Angeles: 19.1% overlap (80.9% volatility)

- Washington: 18.3% overlap (81.7% volatility)

- Houston: 20.5% overlap (79.5% volatility)

- Denver: 19.1% overlap (80.9% volatility)

That translates to volatility rates of 80–82%. Simply put, four out of five URLs disappeared between runs for the same user, location, and search query.

Across cities, stability all but collapsed:

- New York–Los Angeles–Washington group: 1.6% overlap (98.4% volatility)

- Los Angeles–Denver–Houston group: 2% overlap (98% volatility)

In other words, AIM rebuilt its answer set from scratch. The chance of two people in different cities seeing the same URLs was close to zero.

This makes sense when you think about it. If you’re in Los Angeles, you’d expect local suggestions, not tips from a Denver or Atlanta guide. To ensure this, AIM places local context at the center of its results. So, this approach feels entirely expected.

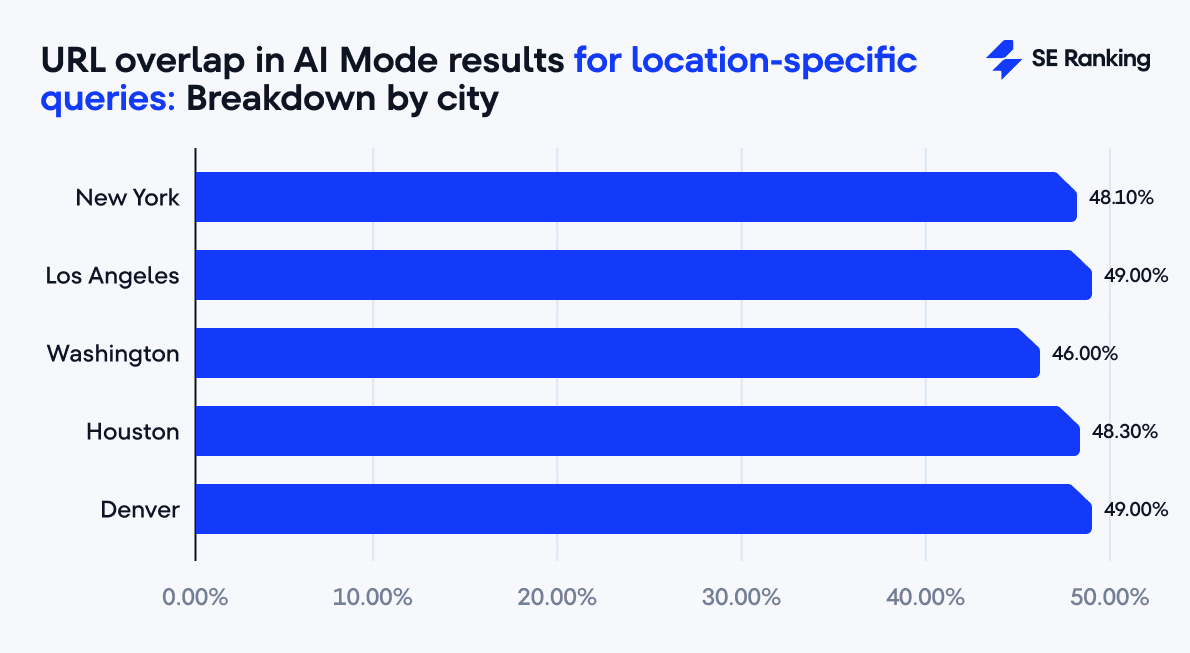

Location-specific queries

Once again, adding a city name doubled stability:

- New York: 48.1% overlap (51.9% volatility)

- Los Angeles: 49% overlap (51% volatility)

- Washington: 46% overlap (54% volatility)

- Houston: 48.3% overlap (51.7% volatility)

- Denver: 49% overlap (51% volatility)

Now, nearly half the URLs are repeated from run to run.

Even across user locations, city-specific queries retained relatively strong stability:

- New York–Los Angeles–Washington group: 41.9% overlap (58.1% volatility)

- Los Angeles–Denver–Houston group: 44% overlap (56% volatility)

This is far from the near-zero overlap of general local queries across cities.

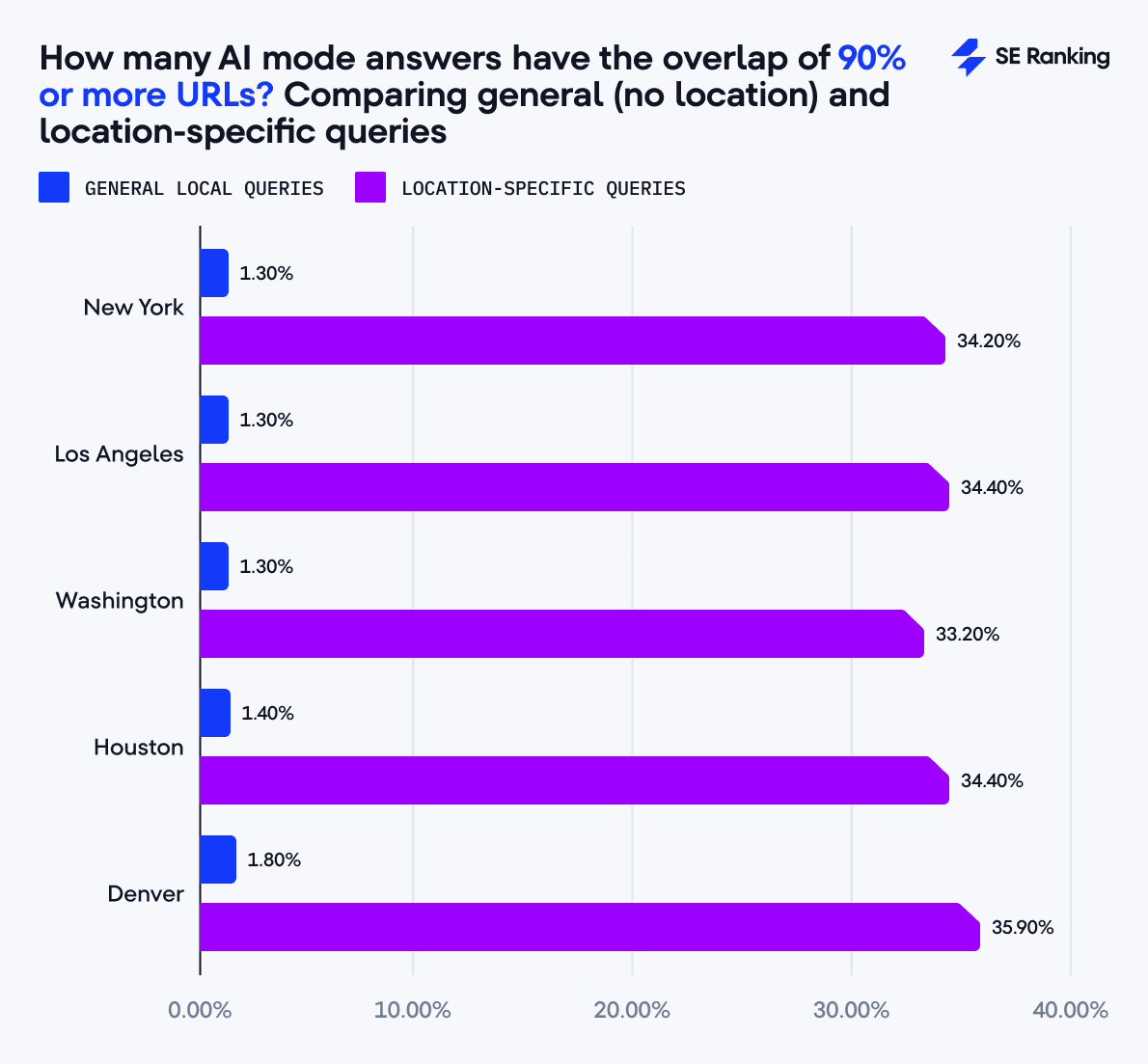

Distribution of URL overlap

At the URL level, the patterns are also interesting.

For general local queries, only about 1.3-1.8% of keywords reached 90% or higher overlap. In practice, that means the exact pages AIM surfaces usually shift almost completely between runs.

When the query references a specific location, however, the stability improves dramatically. In these cases, about 34-36% of keywords hit the 90%+ overlap mark. This means AIM is far more likely to return the same set of pages consistently when the location is explicit.

AIM doesn’t just shuffle which domains you see. It often changes the exact pages as well. But once the city is part of the query, the results become far more predictable.

What drives AIM volatility (and what doesn’t)?

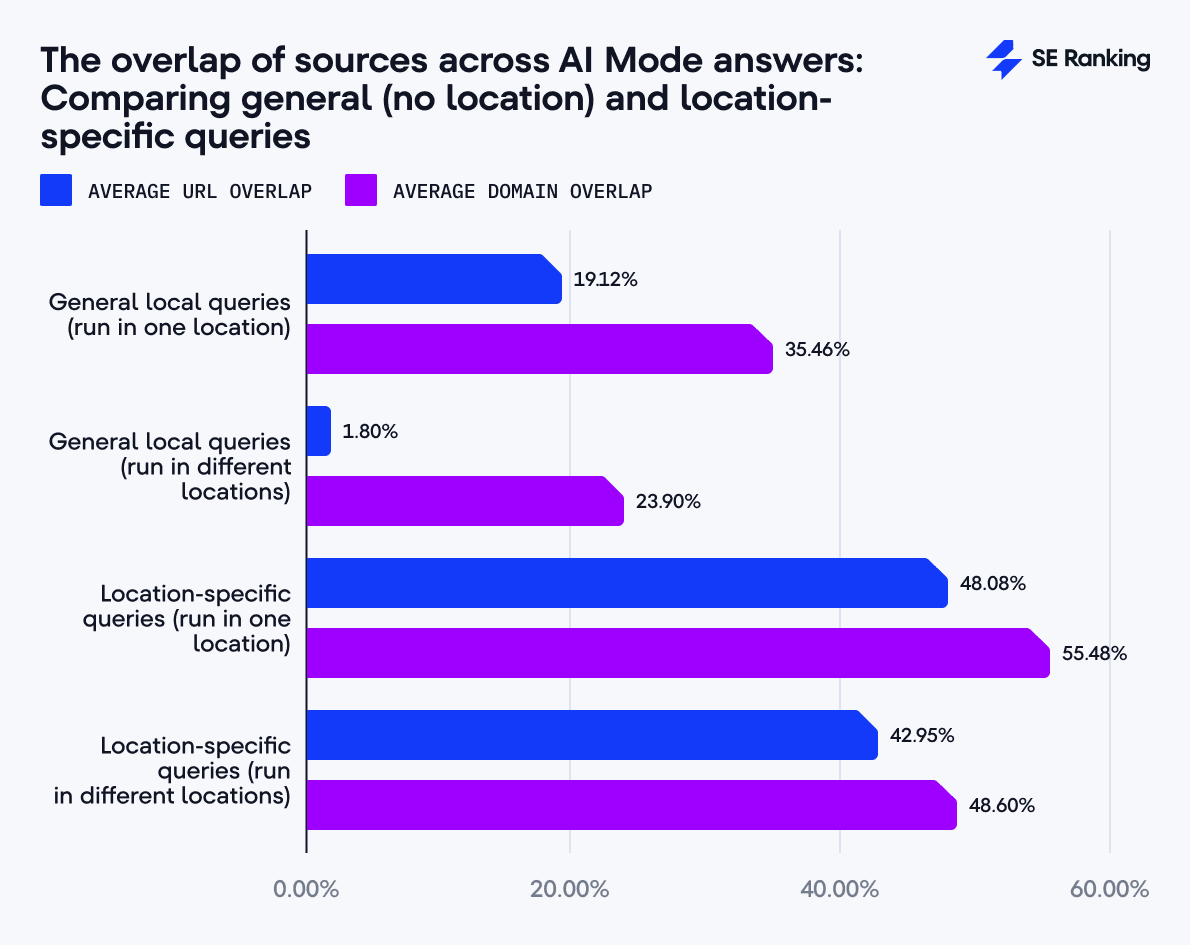

Let’s first take a look at the overlap of sources across AIM answers. The table below shows how much results overlap at the URL and domain levels, depending on whether queries are general or location-specific, and whether they’re run in the same location or across different ones.

As you can see, general local queries are highly volatile: when repeated in the same location, they share only 19% of URLs and 35% of domains, and across different locations, the overlap drops to just 2% of URLs and 24% of domains. In contrast, location-specific queries are far more stable: within the same location, they share nearly 48% of URLs and 55% of domains, and even across locations, the overlap remains relatively high at 43% of URLs and 49% of domains.

Now, let’s look at the bigger picture: what these overlaps tell us about when AIM gives consistent results and when it doesn’t.

1. Query phrasing is the biggest driver.

Specifying the location makes AIM far more predictable. Broad prompts like “restaurants near me” produce unstable results, with overlap dropping into the 30% range. Adding a location reference (whether it’s a city, a neighborhood, or even a specific place like “restaurants near the White House”) nearly doubles stability, both at the domain and URL level.

2. User location only matters for general local queries.

Geography plays a role when the query is vague. For “near me” searches and similar, moving between cities reduces overlap by 10–12% (from the mid-30s to the low-20s). But once the query includes a city name, the effect of user location nearly disappears, shifting results by only 2–6%.

3. Domains are steadier than URLs.

Even when AIM repeats the same websites, it often cycles through different pages. For general local queries, more than 80% of URLs change between runs, compared to roughly 60–65% turnover at the domain level. That’s only natural, since AIM shifts sources for local queries depending on local context (what’s nearby, region-specific content, or what’s most relevant in that area at the time).

4. Patterns are consistent across cities.

The results were strikingly similar in New York, Los Angeles, Washington, Houston, and Denver. No city proved more stable than another, which means the volatility patterns aren’t tied to local markets. They’re built into how AIM handles local queries.

5. Distribution of overlap reinforces the trend.

Looking at overlap distributions highlights just how different the two query types are. For general local queries (without a specific location mentioned), only 12–16% of keywords returned more than half the same domains, and just 1–2% reached 90%+ overlap at the URL level. In contrast, city-specific queries were far steadier: 40–44% of keywords crossed the 50% domain threshold, and 34–36% hit 90%+ overlap at the URL level.

In short: volatility depends more on how the query is phrased than on where it is run. General local queries leave AIM with a broad context to interpret, so the results vary widely. Once a city name is included, however, the search space narrows. This reduces ambiguity, anchors AIM to a consistent pool of sources, and minimizes the impact of user location.

Research methodology

The dataset of 5,000 keywords was divided into two equal groups:

- General local queries (e.g., restaurants near me, best barbershops nearby, restaurant nearby my location, office supply stores close to me, best place for chicken wings near me, water births in hospitals near me, bar near me open now).

- Location-specific queries with explicit locations (e.g., restaurants in New York, pet stores in Denver, Memorial City Mall in Houston TX, famous coffee shops in LA, public pools in NYC, NYC dog-friendly bars, Saturday farmers market in DC, subway in Washington DC, places to go out in Denver, and so on).

Each group contained 2,500 queries. For city-specific queries, we selected five cities and assigned 500 queries to each:

- New York, New York, US

- Los Angeles, California, US

- Washington, District of Columbia, US

- Houston, Texas, US

- Denver, Colorado, US

Each query was parsed 15 times (three runs per city) to capture geographical and temporal variation.

We compared the results under four conditions:

- General local queries tested from the same location – e.g., “best pizza near me” run three times in New York.

- General local queries tested from different locations – e.g., “best pizza near me” run in New York, Denver, and LA.

- Location-specific queries tested from the same location – e.g., “best pizza in New York” run three times from New York.

- Location-specific queries tested from different locations – e.g., “best pizza in New York” run from LA, Washington, and Houston.

Overlap was measured at two levels:

- Domain level: whether the same websites appeared in the AIM results.

- URL level: whether the exact same pages appeared in the AIM results.

This approach allowed us to isolate the effects of query phrasing and user geography while controlling for timing and repetition.

Disclaimer: While we aim to provide the most objective interpretations, we recognize that other valid perspectives may also exist.

Conclusion

Our tests show that AIM volatility is real, but there are clear factors that shape these AI responses.

When queries are vague, the system has too much freedom, and the results shift dramatically. But when the query clearly names a location (and especially when that location matches the user’s own), the results settle into a stable pattern.