SE Ranking’s new Website Audit: blazing fast and customizable solution that checks every issue that matters

We are extremely excited to share some great news with you—SE Ranking’s new site auditing tool is finally live. Please, welcome Website Audit 2.0—it crawls 1000 pages in just 2 minutes, checks every page against 110 parameters, adjusts to your needs, and offers ready-made solutions for detected issues.

We’ve revamped the tool both in and out, so it also boasts a modern laconic design. You’ll see what I mean the moment you start crawling your website—those jumping figures are on my three-things-to-watch-forever list along with burning fire and flowing water 🙂

Website Audit has gone through several stages of technical trials, so you can rely on it when it comes to your website health. Now, how about opening the tool and launching the website audit process? Come back to our blog later on to continue reading this post. Our tool will most probably complete your website crawl by the time you get through the text. And if you’ve got a huge website, no big deal—the updated Website Audit will crawl it anyway, but it just may take a tad longer.

If you are not yet an SE Ranking user, you can test out our new Website Audit under the free trial. Once you sign up, you’ll have 14 days to take every single tool the SE Ranking platform offers for a spin.

Meanwhile, let’s take a closer look at other Website Audit upgrades. We have plenty of things to share with you, since the new Website Audit is not just ten times faster, but it’s also way more accurate and fully customizable.

Dozens of new parameters that matter

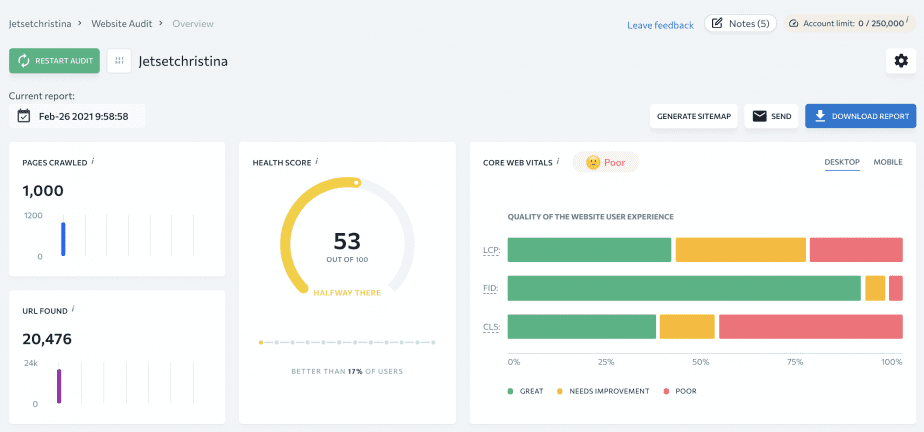

The new audit has 110 website parameters under its belt, and they are conveniently divided into 16 categories. You’ll find a graph with all of them on the main dashboard—it will help you quickly grasp which areas need to be improved ASAP, be it website security, website crawling or duplicate content. By clicking the category icon, you’ll immediately get to the respective section of the Issue Report.

Thanks to the dozens of new parameters, the new Website Audit will not simply tell you what kind of problem you have on your website but it will pinpoint what exactly needs to be mended.

For example:

- The previous version of Website Audit would only tell you that you have some issues with hreflang tags—now by studying the report you’ll know for sure what kind of error you need to fix (you may be using multiple language codes or your hreflang page may not link to itself, etc).

- Previously, we only recommended you to reduce your CSS and JavaScript code size—now the audit will notify you in case your CSS and JavaScript files are not minified, compressed, or cached.

If you’re new to SEO and all the terms we mentioned sound gibberish to you, don’t you worry. By simply clicking the parameter name, you’ll see a comprehensive issue description and a short how-to-fix guide. On the other hand, if you are an SEO pro, click the number of detected issues to immediately see the full list of affected pages.

Now, let me mention a few more parameters. The updated audit analyzes many SEO-critical issues in greater detail. For example:

- The tool not only checks if the website has an XML sitemap but also measures its size and goes to your robots.txt file to see if it contains a link to the sitemap. Moreover, the audit scans the XML sitemap file itself to find broken links, noindex and noncanonical pages, etc.

- Website Audit looks for noindex and nofollow tags not just in your website’s HTML code, but also within the X-Robots-Tag. If you happen to use some directives both in website code and with the help of X-Robots-Tag, the tool will spot it.

- The updated Website Audit not only reminds you that it’s high time to switch to HTTPS but also checks the quality of your SSL certificate: whether it is properly registered if it uses up-to-date encryption and protocol. If the tool finds out that your SSL certificate is about to expire, you’ll get notified as well.

More accurate health score and issue significance scale

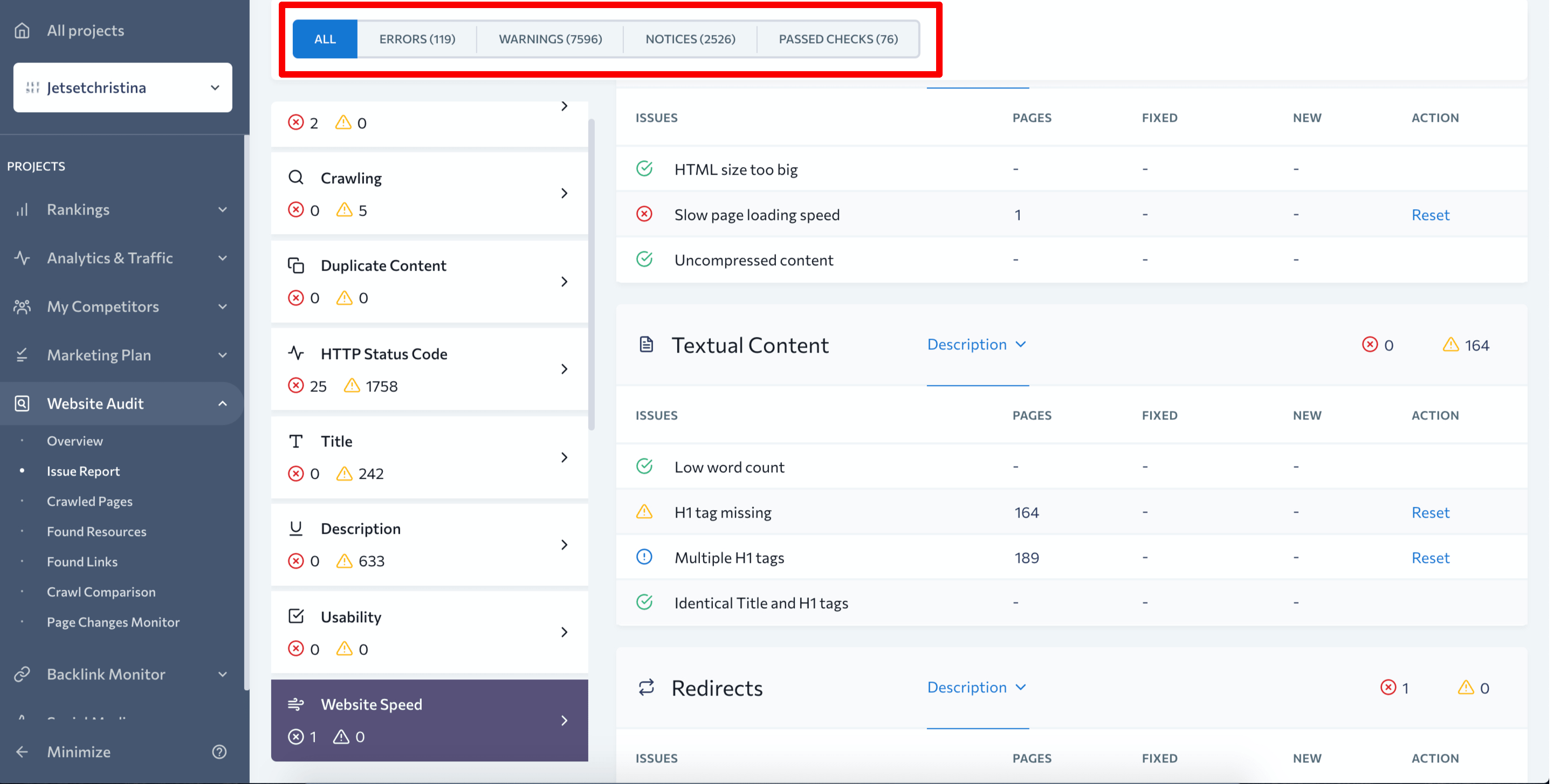

In the previous version of the Website Audit tool, we divided all the issues into important fixes and semi-important fixes. The new scale is more precise:

- Most severe issues are now called Errors—you need to fix those yesterday. For example, duplicate pages, mixed content, and critically low loading speed are all classified as errors.

- Less important issues are now called Warnings—they are not as critical as errors, but can still harm your website SEO. Issues with your XML sitemap, missing alt tags, or excessively long title tags are all marked as warnings.

- Notices are issues you need to pay attention to, but you may as well leave them as they are. For example, if some of your internal links have no anchor text or some of your website pages have short text content, this isn’t necessarily bad.

On the main dashboard, you’ll find a chart with all issues broken down into circles according to their significance. And if you go to the Issue Report, you’ll be able to filter out Errors, Warnings, and Notices in one click.

There’s a direct correlation between your website’s Health score and the type of issues that prevail on your site. If the audit has detected plenty of issues, but they are not really critical, you’ll get a higher score. If, on the other hand, there are only a few issues, but they are mostly errors, your website will get a lower score.

Easy to measure your progress

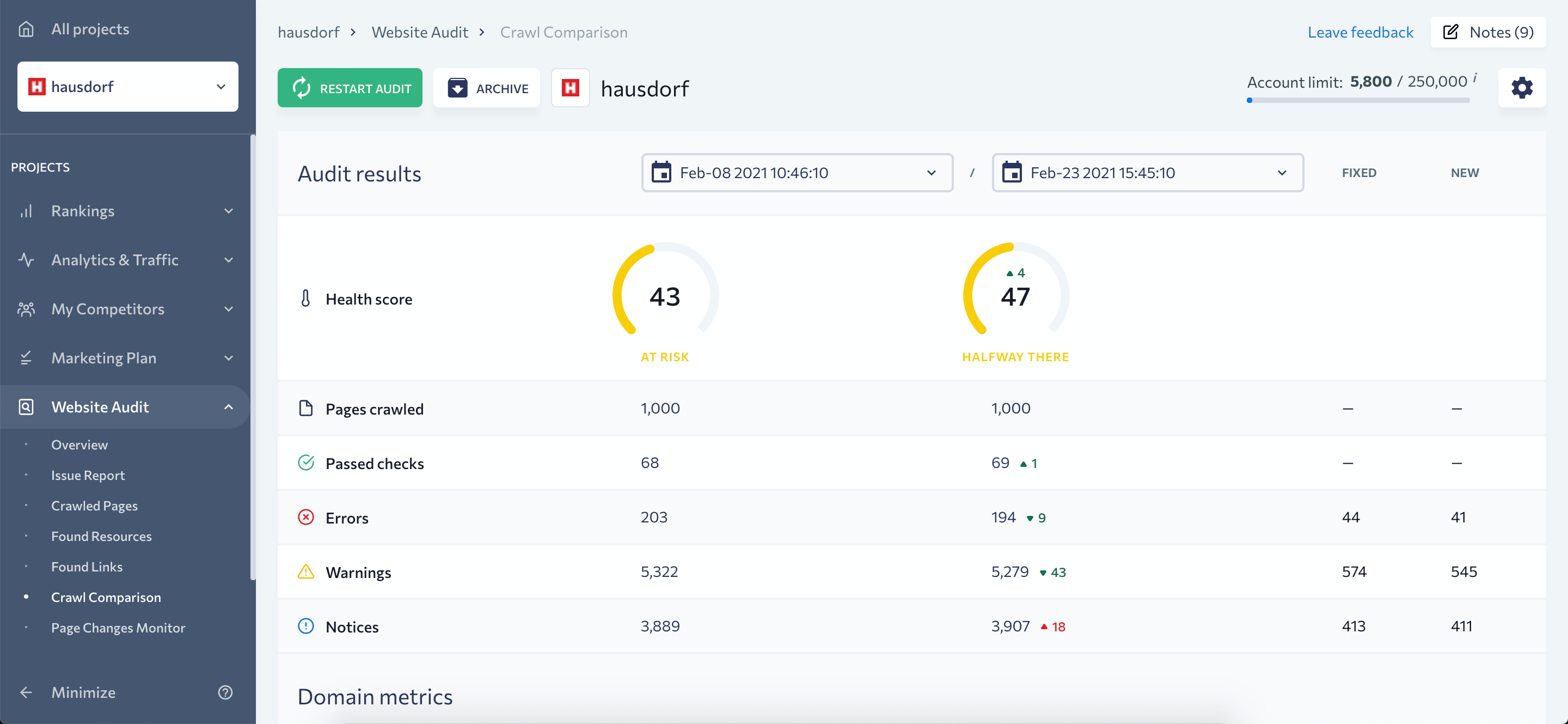

Can you picture yourself fixing some issues and still end up seeing them on the report after you restart an audit? This is when you start wondering whether your dev team has failed to fix some problems or if those detected issues are brand new.

With the new Website Audit, you won’t have to wonder: you’ll immediately see how many issues have been fixed along with the number of new problems. Moreover, once you fix an issue, you can make use of the reset option—parameters you reset won’t influence your health score. That way, if you only have a few things to fix, there’s no need to restart the audit to get an up-to-date report. This also conveniently saves your account limits.

To compare the general results of two audits (your health score, the number of errors, warnings, and notices), go to the Crawl Comparison section. Here you’ll also find an Archive with your old website audits. As you must have already noticed, the updated Website Audit has changed a lot, so you can’t adequately compare old and new crawls. So don’t feel frustrated when you see the “We haven’t audited your website yet.” message on the Website Audit home screen. Your older crawls are still there, they’ve just been moved to the Archive section.

Data presented in several formats

When upgrading SE Ranking tools, we try to meet the needs of all our users. Managers, marketers, SEO pros and newbies all use Website Audit because they care about their websites’ technical health. Still, while they all want to know which issues our tool has found, they prefer getting data in different formats.

Managers will appreciate our main dashboard where all major metrics are presented as graphs and charts. Here you can check the website’s health score, Core Web Vitals metrics, the list of the most critical errors, data indexation, and other important parameters.

Marketers and SEO newbies should check out the Issue Report—it has all the necessary data to help you figure out why some issues are a problem and how they can be fixed. In addition, depending on whether an issue is classified as an error, a warning or a notice, you can easily prioritize the most important fixes.

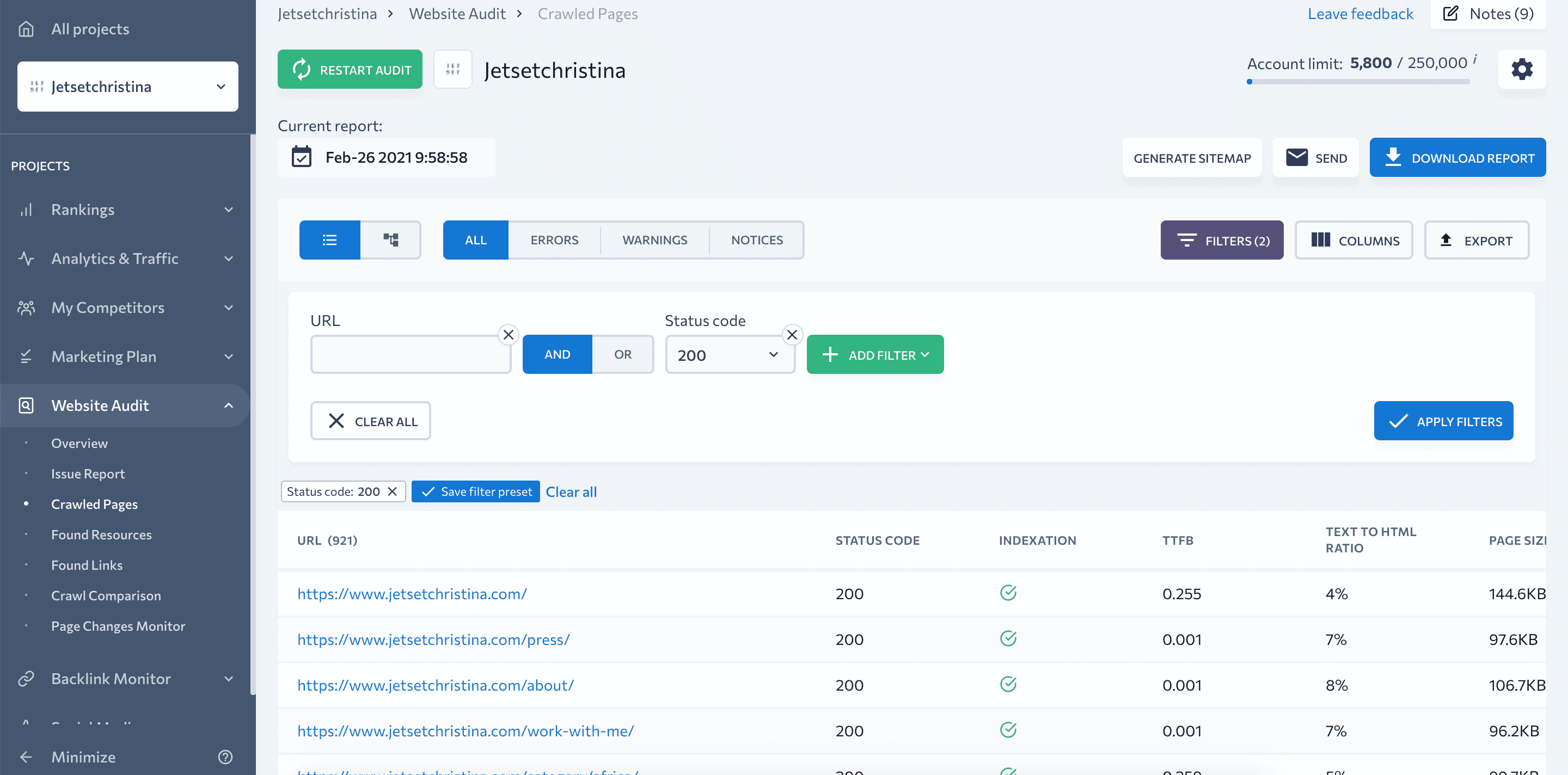

Seasoned SEO specialists can also be interested in the Crawled Pages, Found Links, and Found Resources sections in addition to studying the report in detail. Here you can find all the major metrics for every single page, link, or resource. For links, you can check their type, status code, and source URL. For resources, you can find data on their size, status code, type, and source URL. As for crawled pages, they come with dozens of various metrics including new parameters such as page speed, TTFB, refresh redirect time, content hash, and many others.

You can use columns and filters to set your own criteria and find respective pages, links, and resources. For example, that way you can work on improving your website structure and internal linking. Or you can collect a list of slow 200 pages that are open for indexing. By analyzing every relevant parameters such as TTFB, page size and image size, text-to-HTML ratio, CSS and JS size, you can understand which resources need to be optimized to improve page speed.

The tool adapts to your needs

Back in 2020, we made tools customization one of our top priorities. We want our platform to adapt to the individual needs of our users, so we added custom settings to SE Ranking tools. With this big update, Website Audit now also has custom settings—you can choose which issues you want to monitor and which ones should be dropped by simply toggling the switch. If you believe some issues are irrelevant to your website, just turn them off, and they won’t add up to your general health score. And in case you change your mind, you can easily update the settings accordingly.

We also want to remind you that we are always happy to hear back from you. If you want us to add some specific issues to Website Audit, use the feedback form that you’ll find on the dashboard.

In any case, we’ll keep upgrading the Website Audit to make sure it checks every critical parameter. We’ll also keep increasing capacities so that the tool could crawl your website even faster—our ambitious goal is to seamlessly crawl websites with a million pages.

We’ll keep you posted about all the new features on this blog, so stay tuned. Meanwhile, check what health score your website got, test the tool out, and share your thoughts in the comments section below.